zhound420

zhound420

Hi there, I wanted to mention that if you guys think this is a good idea and want to make it part of the base toolkit, you could add rabbitmq...

> Hey can you point out the exact use cases of this tool? > How will it help in agent-to-agent communication? I did write a readme.md for the toolkit, here's...

Conflicts resolved in requirements.txt. The toolkit now has its own requirements.txt

Okay, fresh install of the v0.7 branch. Imported in the toolkit from my github url using the gui. I see the tools.json is updated an new toolkit directory exists in...

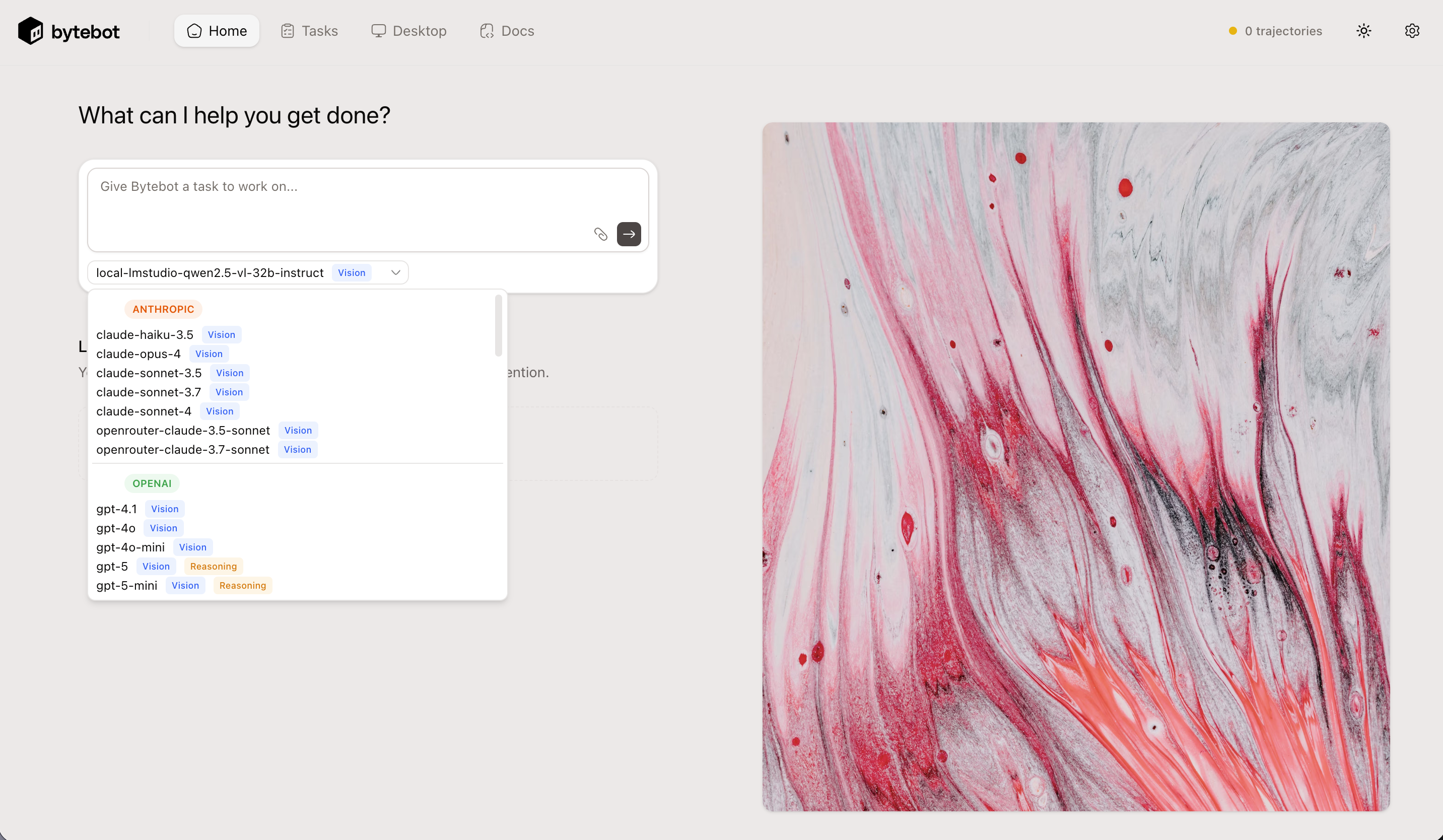

The Gemini and GPT5 models are not working very well with bytebot. They seem to have problems with screen co-ordinates. Only Claude models are working well, opus 4.1 especially fantastic...

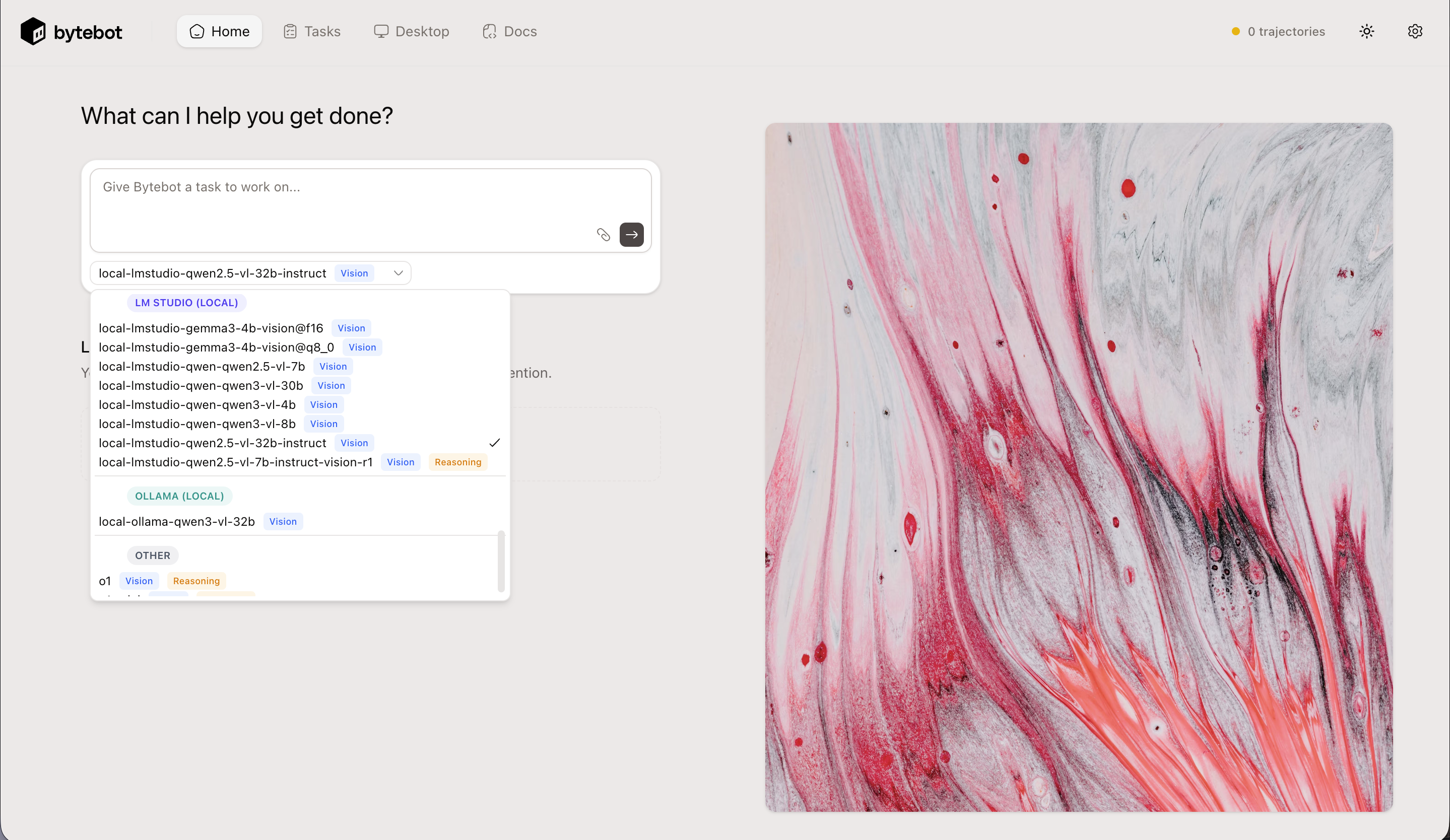

Problem is that Ollama doesn't support tools with the opensource models. You need to use vLLM or LMStudio. Both have better openAI API support including tools/function calling. I have the...

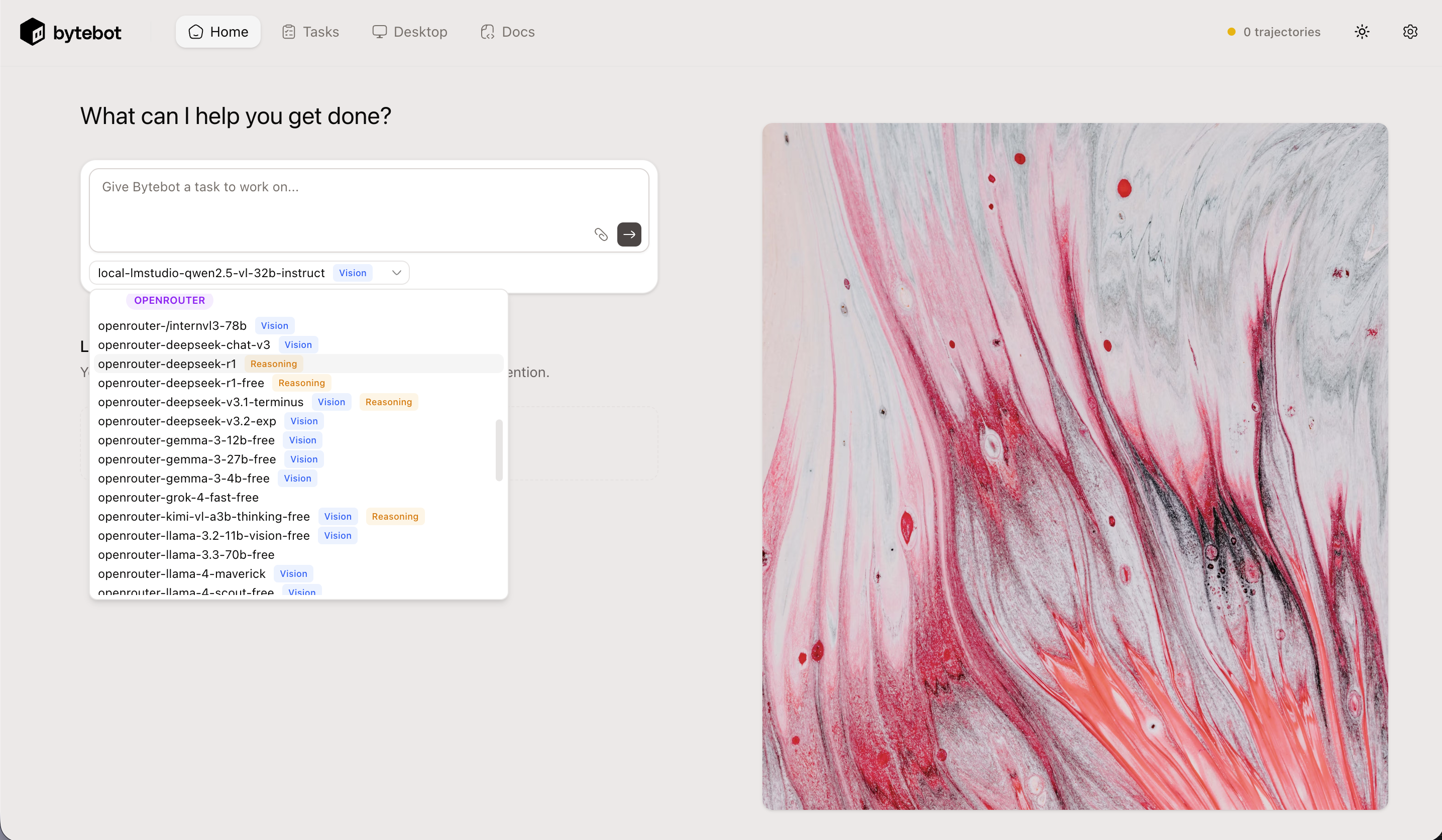

I have it working with litellm built-in proxy. You'll have to compile the list of vision models from openrouter and add them to litellm config. [bytebot_lite_llm_open_router_option_a_proxy_compose_runbook.md](https://github.com/zhound420/docs/blob/main/bytebot_lite_llm_open_router_option_a_proxy_compose_runbook.md) Your litellm config should...

> how to add a custom openai compatible endpoint such as venice.ai or w/e You'll need to read up on litellm: ``` # packages/bytebot-llm-proxy/litellm-config.yaml model_list: # --- Venice.ai example (OpenAI-compatible)...

1. Deepseek R1 does not have vision. 2. Ollama does not support proper function calling. Switch to LMStudio.

I have more providers integrated in one of my forks: [bytebot-hawkeye-op](https://github.com/zhound420/bytebot-hawkeye-op)