bytebot

bytebot copied to clipboard

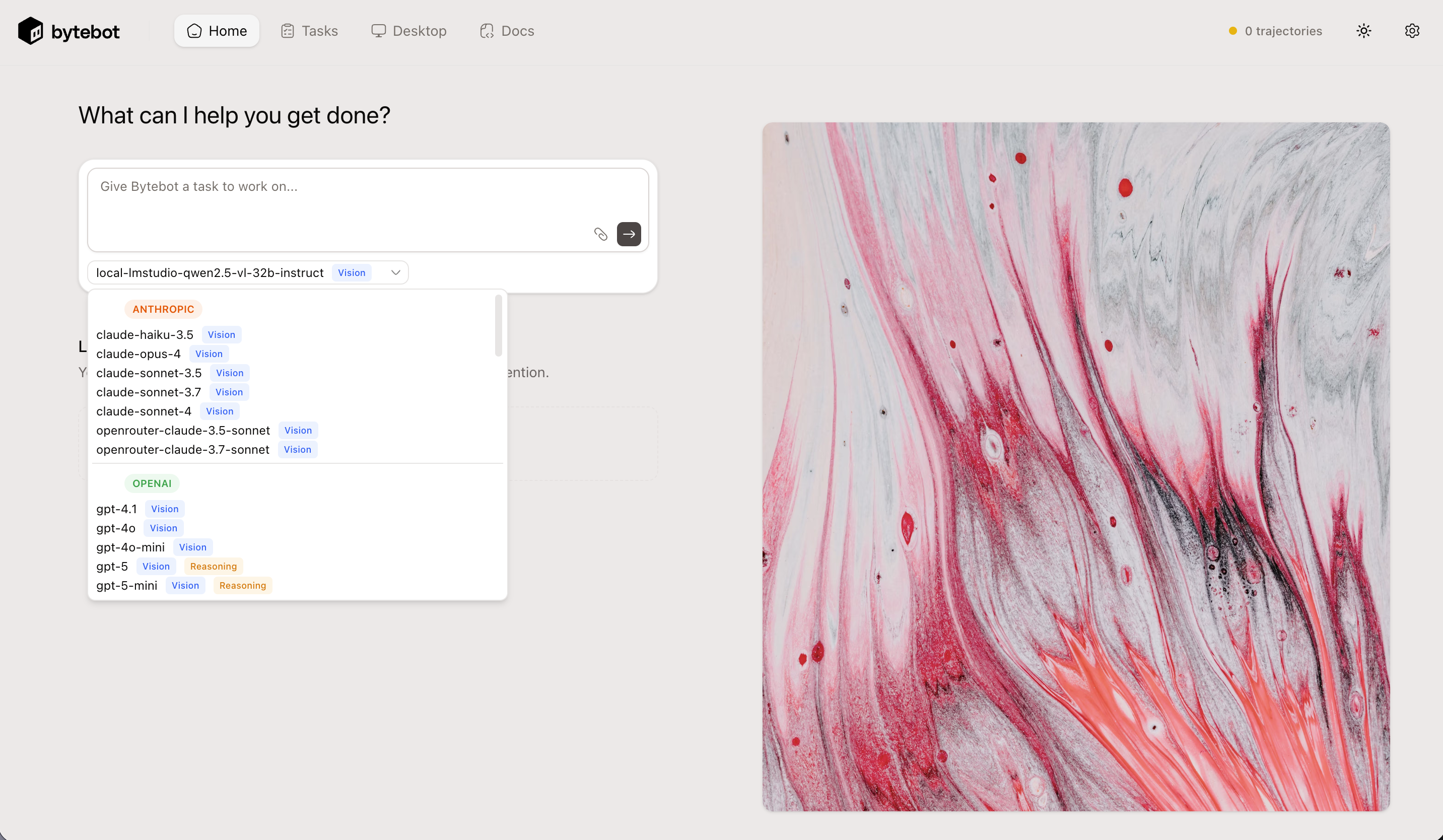

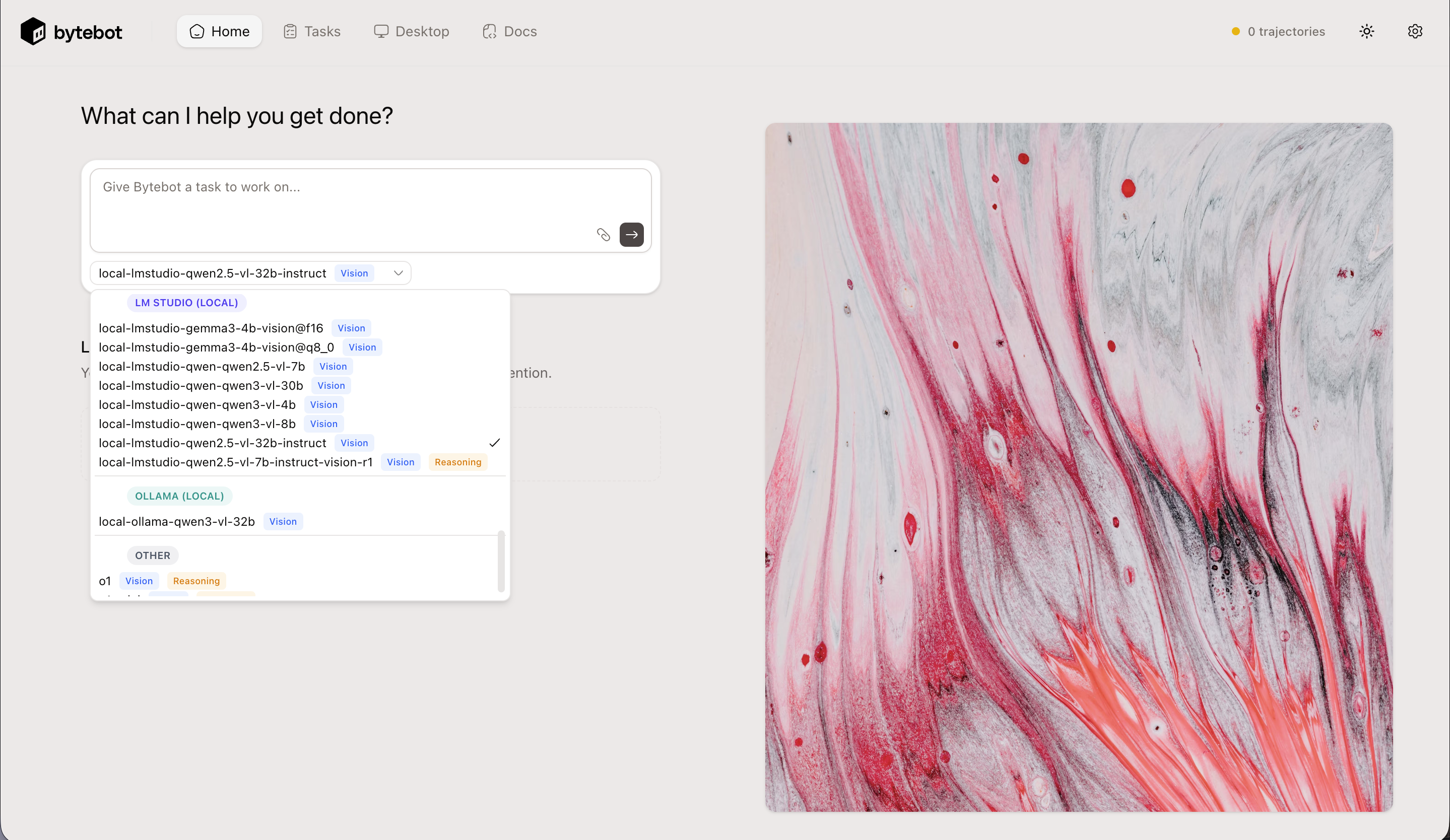

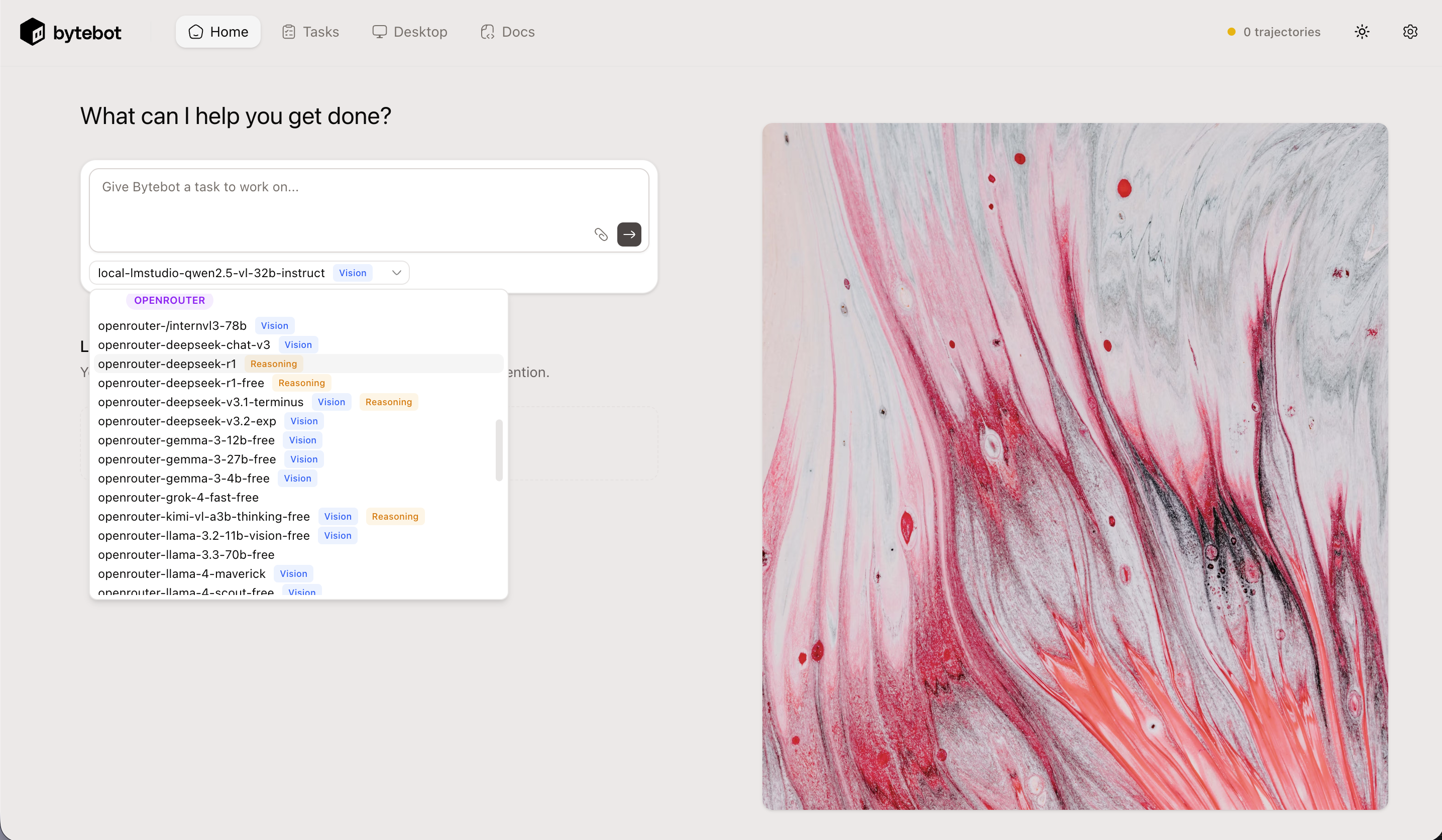

Tried many local AI models, all failed.

I tried ollama deepseek-r1:14b and from lm studio: gpt-oss 20b and others, all failed. for deepseek, this is the log:

21:18:47 - LiteLLM Proxy:ERROR: common_request_processing.py:644 - litellm.proxy.proxy_server._handle_llm_api_exception(): Exception occured - litellm.APIConnectionError: list index out of range

Traceback (most recent call last):

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 3254, in completion

response = base_llm_http_handler.completion(

model=model,

...<13 lines>...

client=client,

)

File "/usr/lib/python3.13/site-packages/litellm/llms/custom_httpx/llm_http_handler.py", line 330, in completion

data = provider_config.transform_request(

model=model,

...<3 lines>...

headers=headers,

)

File "/usr/lib/python3.13/site-packages/litellm/llms/ollama/completion/transformation.py", line 358, in transform_request

modified_prompt = ollama_pt(model=model, messages=messages)

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/prompt_templates/factory.py", line 237, in ollama_pt

tool_calls = messages[msg_i].get("tool_calls")

~~~~~~~~^^^^^^^

IndexError: list index out of range

. Received Model Group=ollama/deepseek-r1:14b

Available Model Group Fallbacks=None LiteLLM Retried: 1 times, LiteLLM Max Retries: 2

Traceback (most recent call last):

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 3254, in completion

response = base_llm_http_handler.completion(

model=model,

...<13 lines>...

client=client,

)

File "/usr/lib/python3.13/site-packages/litellm/llms/custom_httpx/llm_http_handler.py", line 330, in completion

data = provider_config.transform_request(

model=model,

...<3 lines>...

headers=headers,

)

File "/usr/lib/python3.13/site-packages/litellm/llms/ollama/completion/transformation.py", line 358, in transform_request

modified_prompt = ollama_pt(model=model, messages=messages)

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/prompt_templates/factory.py", line 237, in ollama_pt

tool_calls = messages[msg_i].get("tool_calls")

~~~~~~~~^^^^^^^

IndexError: list index out of range

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3.13/site-packages/litellm/proxy/proxy_server.py", line 4092, in chat_completion

result = await base_llm_response_processor.base_process_llm_request(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

...<16 lines>...

)

^

File "/usr/lib/python3.13/site-packages/litellm/proxy/common_request_processing.py", line 438, in base_process_llm_request

responses = await llm_responses

^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 1076, in acompletion

raise e

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 1052, in acompletion

response = await self.async_function_with_fallbacks(**kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 3895, in async_function_with_fallbacks

return await self.async_function_with_fallbacks_common_utils(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

...<8 lines>...

)

^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 3853, in async_function_with_fallbacks_common_utils

raise original_exception

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 3887, in async_function_with_fallbacks

response = await self.async_function_with_retries(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 4092, in async_function_with_retries

raise original_exception

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 3983, in async_function_with_retries

response = await self.make_call(original_function, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 4101, in make_call

response = await response

^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 1357, in _acompletion

raise e

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 1309, in _acompletion

response = await _response

^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/utils.py", line 1598, in wrapper_async

raise e

File "/usr/lib/python3.13/site-packages/litellm/utils.py", line 1449, in wrapper_async

result = await original_function(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 565, in acompletion

raise exception_type(

...<5 lines>...

)

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 538, in acompletion

init_response = await loop.run_in_executor(None, func_with_context)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/concurrent/futures/thread.py", line 59, in run

result = self.fn(*self.args, **self.kwargs)

File "/usr/lib/python3.13/site-packages/litellm/utils.py", line 1072, in wrapper

result = original_function(*args, **kwargs)

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 3582, in completion

raise exception_type(

~~~~~~~~~~~~~~^

model=model,

^^^^^^^^^^^^

...<3 lines>...

extra_kwargs=kwargs,

^^^^^^^^^^^^^^^^^^^^

)

^

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/exception_mapping_utils.py", line 2301, in exception_type

raise e

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/exception_mapping_utils.py", line 2277, in exception_type

raise APIConnectionError(

...<8 lines>...

)

litellm.exceptions.APIConnectionError: litellm.APIConnectionError: list index out of range

Traceback (most recent call last):

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 3254, in completion

response = base_llm_http_handler.completion(

model=model,

...<13 lines>...

client=client,

)

File "/usr/lib/python3.13/site-packages/litellm/llms/custom_httpx/llm_http_handler.py", line 330, in completion

data = provider_config.transform_request(

model=model,

...<3 lines>...

headers=headers,

)

File "/usr/lib/python3.13/site-packages/litellm/llms/ollama/completion/transformation.py", line 358, in transform_request

modified_prompt = ollama_pt(model=model, messages=messages)

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/prompt_templates/factory.py", line 237, in ollama_pt

tool_calls = messages[msg_i].get("tool_calls")

~~~~~~~~^^^^^^^

IndexError: list index out of range

. Received Model Group=ollama/deepseek-r1:14b

Available Model Group Fallbacks=None LiteLLM Retried: 1 times, LiteLLM Max Retries: 2

INFO: 172.19.0.3:38404 - "POST /chat/completions HTTP/1.1" 500 Internal Server Error

21:18:51 - LiteLLM Proxy:ERROR: common_request_processing.py:644 - litellm.proxy.proxy_server._handle_llm_api_exception(): Exception occured - litellm.APIConnectionError: list index out of range

Traceback (most recent call last):

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 3254, in completion

response = base_llm_http_handler.completion(

model=model,

...<13 lines>...

client=client,

)

File "/usr/lib/python3.13/site-packages/litellm/llms/custom_httpx/llm_http_handler.py", line 330, in completion

data = provider_config.transform_request(

model=model,

...<3 lines>...

headers=headers,

)

File "/usr/lib/python3.13/site-packages/litellm/llms/ollama/completion/transformation.py", line 358, in transform_request

modified_prompt = ollama_pt(model=model, messages=messages)

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/prompt_templates/factory.py", line 237, in ollama_pt

tool_calls = messages[msg_i].get("tool_calls")

~~~~~~~~^^^^^^^

IndexError: list index out of range

. Received Model Group=ollama/deepseek-r1:14b

Available Model Group Fallbacks=None LiteLLM Retried: 1 times, LiteLLM Max Retries: 2

Traceback (most recent call last):

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 3254, in completion

response = base_llm_http_handler.completion(

model=model,

...<13 lines>...

client=client,

)

File "/usr/lib/python3.13/site-packages/litellm/llms/custom_httpx/llm_http_handler.py", line 330, in completion

data = provider_config.transform_request(

model=model,

...<3 lines>...

headers=headers,

)

File "/usr/lib/python3.13/site-packages/litellm/llms/ollama/completion/transformation.py", line 358, in transform_request

modified_prompt = ollama_pt(model=model, messages=messages)

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/prompt_templates/factory.py", line 237, in ollama_pt

tool_calls = messages[msg_i].get("tool_calls")

~~~~~~~~^^^^^^^

IndexError: list index out of range

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3.13/site-packages/litellm/proxy/proxy_server.py", line 4092, in chat_completion

result = await base_llm_response_processor.base_process_llm_request(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

...<16 lines>...

)

^

File "/usr/lib/python3.13/site-packages/litellm/proxy/common_request_processing.py", line 438, in base_process_llm_request

responses = await llm_responses

^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 1076, in acompletion

raise e

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 1052, in acompletion

response = await self.async_function_with_fallbacks(**kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 3895, in async_function_with_fallbacks

return await self.async_function_with_fallbacks_common_utils(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

...<8 lines>...

)

^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 3853, in async_function_with_fallbacks_common_utils

raise original_exception

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 3887, in async_function_with_fallbacks

response = await self.async_function_with_retries(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 4092, in async_function_with_retries

raise original_exception

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 3983, in async_function_with_retries

response = await self.make_call(original_function, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 4101, in make_call

response = await response

^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 1357, in _acompletion

raise e

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 1309, in _acompletion

response = await _response

^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/utils.py", line 1598, in wrapper_async

raise e

File "/usr/lib/python3.13/site-packages/litellm/utils.py", line 1449, in wrapper_async

result = await original_function(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 565, in acompletion

raise exception_type(

...<5 lines>...

)

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 538, in acompletion

init_response = await loop.run_in_executor(None, func_with_context)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/concurrent/futures/thread.py", line 59, in run

result = self.fn(*self.args, **self.kwargs)

File "/usr/lib/python3.13/site-packages/litellm/utils.py", line 1072, in wrapper

result = original_function(*args, **kwargs)

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 3582, in completion

raise exception_type(

~~~~~~~~~~~~~~^

model=model,

^^^^^^^^^^^^

...<3 lines>...

extra_kwargs=kwargs,

^^^^^^^^^^^^^^^^^^^^

)

^

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/exception_mapping_utils.py", line 2301, in exception_type

raise e

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/exception_mapping_utils.py", line 2277, in exception_type

raise APIConnectionError(

...<8 lines>...

)

litellm.exceptions.APIConnectionError: litellm.APIConnectionError: list index out of range

Traceback (most recent call last):

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 3254, in completion

response = base_llm_http_handler.completion(

model=model,

...<13 lines>...

client=client,

)

File "/usr/lib/python3.13/site-packages/litellm/llms/custom_httpx/llm_http_handler.py", line 330, in completion

data = provider_config.transform_request(

model=model,

...<3 lines>...

headers=headers,

)

File "/usr/lib/python3.13/site-packages/litellm/llms/ollama/completion/transformation.py", line 358, in transform_request

modified_prompt = ollama_pt(model=model, messages=messages)

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/prompt_templates/factory.py", line 237, in ollama_pt

tool_calls = messages[msg_i].get("tool_calls")

~~~~~~~~^^^^^^^

IndexError: list index out of range

. Received Model Group=ollama/deepseek-r1:14b

Available Model Group Fallbacks=None LiteLLM Retried: 1 times, LiteLLM Max Retries: 2

INFO: 172.19.0.3:56464 - "POST /chat/completions HTTP/1.1" 500 Internal Server Error

This is my yaml config:

litellm_settings:

drop_params: true

prompt_template: null

model_list:

- model_name: gpt-oss-20b

litellm_params:

model: openai/gpt-oss-20b

api_base: http://host.docker.internal:1234

api_key: ""

- model_name: deepseek-r1:14b

litellm_params:

model: ollama/deepseek-r1:14b

api_base: http://host.docker.internal:11434

api_key: "ollama"

- Deepseek R1 does not have vision.

- Ollama does not support proper function calling. Switch to LMStudio.

I had the same issue, and Claude told me the AI model failed.

I used llm with vision, but cant seems to run it either..

Me too. I'm using gpt-oss-20b with llama.cpp (llama-server), it seems like llama-server have stricter parser.

LiteLLM error message:

06:32:45 - LiteLLM Proxy:ERROR: common_request_processing.py:699 - litellm.proxy.proxy_server._handle_llm_api_exception(): Exception occured - litellm.InternalServerError: InternalServerError: OpenAIException - Assistant message must contain either 'content' or 'tool_calls'!. Received Model Group=openai/gpt-oss-20b

Available Model Group Fallbacks=None LiteLLM Retried: 1 times, LiteLLM Max Retries: 2

Traceback (most recent call last):

File "/usr/lib/python3.13/site-packages/litellm/llms/openai/openai.py", line 823, in acompletion

headers, response = await self.make_openai_chat_completion_request(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

...<4 lines>...

)

^

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/logging_utils.py", line 190, in async_wrapper

result = await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/llms/openai/openai.py", line 454, in make_openai_chat_completion_request

raise e

File "/usr/lib/python3.13/site-packages/litellm/llms/openai/openai.py", line 436, in make_openai_chat_completion_request

await openai_aclient.chat.completions.with_raw_response.create(

**data, timeout=timeout

)

File "/usr/lib/python3.13/site-packages/openai/_legacy_response.py", line 381, in wrapped

return cast(LegacyAPIResponse[R], await func(*args, **kwargs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/openai/resources/chat/completions/completions.py", line 2589, in create

return await self._post(

^^^^^^^^^^^^^^^^^

...<48 lines>...

)

^

File "/usr/lib/python3.13/site-packages/openai/_base_client.py", line 1794, in post

return await self.request(cast_to, opts, stream=stream, stream_cls=stream_cls)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/openai/_base_client.py", line 1594, in request

raise self._make_status_error_from_response(err.response) from None

openai.InternalServerError: Error code: 500 - {'error': {'code': 500, 'message': "Assistant message must contain either 'content' or 'tool_calls'!", 'type': 'server_error'}}

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 595, in acompletion

response = await init_response

^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/llms/openai/openai.py", line 870, in acompletion

raise OpenAIError(

...<4 lines>...

)

litellm.llms.openai.common_utils.OpenAIError: Error code: 500 - {'error': {'code': 500, 'message': "Assistant message must contain either 'content' or 'tool_calls'!", 'type': 'server_error'}}

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3.13/site-packages/litellm/proxy/proxy_server.py", line 4531, in chat_completion

result = await base_llm_response_processor.base_process_llm_request(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

...<16 lines>...

)

^

INFO: 172.18.0.2:57990 - "POST /chat/completions HTTP/1.1" 500 Internal Server Error

File "/usr/lib/python3.13/site-packages/litellm/proxy/common_request_processing.py", line 494, in base_process_llm_request

responses = await llm_responses

^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 1141, in acompletion

raise e

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 1117, in acompletion

response = await self.async_function_with_fallbacks(**kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 4069, in async_function_with_fallbacks

return await self.async_function_with_fallbacks_common_utils(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

...<8 lines>...

)

^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 4027, in async_function_with_fallbacks_common_utils

raise original_exception

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 4061, in async_function_with_fallbacks

response = await self.async_function_with_retries(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 4266, in async_function_with_retries

raise original_exception

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 4157, in async_function_with_retries

response = await self.make_call(original_function, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 4277, in make_call

response = await response

^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 1420, in _acompletion

raise e

File "/usr/lib/python3.13/site-packages/litellm/router.py", line 1372, in _acompletion

response = await _response

^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/utils.py", line 1632, in wrapper_async

raise e

File "/usr/lib/python3.13/site-packages/litellm/utils.py", line 1478, in wrapper_async

result = await original_function(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.13/site-packages/litellm/main.py", line 614, in acompletion

raise exception_type(

~~~~~~~~~~~~~~^

model=model,

^^^^^^^^^^^^

...<3 lines>...

extra_kwargs=kwargs,

^^^^^^^^^^^^^^^^^^^^

)

^

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/exception_mapping_utils.py", line 2273, in exception_type

raise e

File "/usr/lib/python3.13/site-packages/litellm/litellm_core_utils/exception_mapping_utils.py", line 502, in exception_type

raise InternalServerError(

...<5 lines>...

)

litellm.exceptions.InternalServerError: litellm.InternalServerError: InternalServerError: OpenAIException - Assistant message must contain either 'content' or 'tool_calls'!. Received Model Group=openai/gpt-oss-20b

My yaml config:

model_list:

# Anthropic Models

- model_name: claude-opus-4

litellm_params:

model: anthropic/claude-opus-4-20250514

api_key: os.environ/ANTHROPIC_API_KEY

- model_name: claude-sonnet-4

litellm_params:

model: anthropic/claude-sonnet-4-20250514

api_key: os.environ/ANTHROPIC_API_KEY

# OpenAI Models

- model_name: gpt-4.1

litellm_params:

model: openai/gpt-4.1

api_key: os.environ/OPENAI_API_KEY

- model_name: gpt-4o

litellm_params:

model: openai/gpt-4o

api_key: os.environ/OPENAI_API_KEY

# Gemini Models

- model_name: gemini-2.5-pro

litellm_params:

model: gemini/gemini-2.5-pro

api_key: os.environ/GEMINI_API_KEY

- model_name: gemini-2.5-flash

litellm_params:

model: gemini/gemini-2.5-flash

api_key: os.environ/GEMINI_API_KEY

# Local Models

- model_name: gpt-oss-20b

litellm_params:

model: openai/gpt-oss-20b

api_key: sk-none

api_base: http://ai-host.lan:8000/v1

litellm_settings:

drop_params: true

prompt_template: null