Ensheng Shi (石恩升)

Ensheng Shi (石恩升)

I think you are right. When I run ./data_utils.py. he error is: FileNotFoundError: [Errno 2] No such file or directory: '/data/enshi_data/Chinese-Poetry-Generation/data/sxhy_dict.txt' When I make a file named data in the...

+1. The same error when running in Ubuntu ```RuntimeError: probability tensor contains either `inf`, `nan` or element < 0```

fixed by ``` pip uninstall bitsandbytes pip install bitsandbytes ``` refer to https://github.com/TimDettmers/bitsandbytes/issues/134. Another issue ```RuntimeError: probability tensor contains either `inf`, `nan` or element < 0```

@tloen Hi Eric J. Do you have any considerations or motivations to add the above code snippets?

> It's interesting, my alpaca run produced a 36mb file, and had really good results. Then, when I merged it and tried to finetune my own custom dataset, the model...

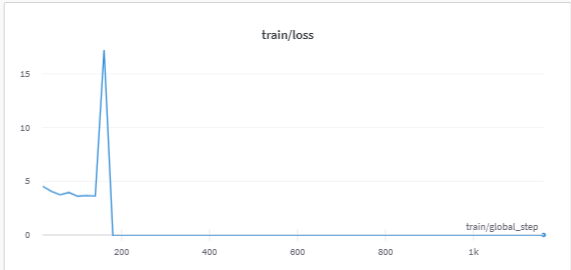

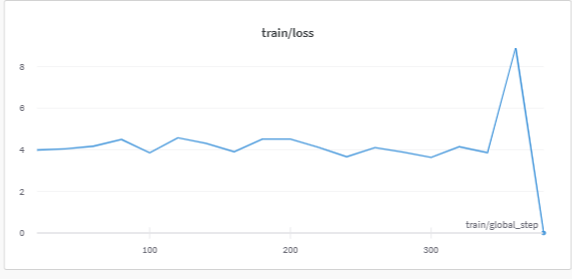

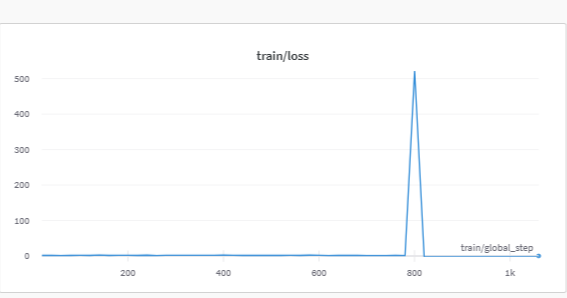

Update the training loss. I fine-tuned llama-7b with lora on alpaca_data.json three time. The training losses are shown as follows.

> Trying to remove the script causes CUDA crashes on my end during training Do you mean comment/remove the following code? ``` old_state_dict = model.state_dict model.state_dict = ( lambda self,...

Thanks! I also saw some issues (https://github.com/tloen/alpaca-lora/issues/288, https://github.com/tloen/alpaca-lora/issues/170) discussing GPU type would affect the training process. The root cause may be the peft update instead of GPU type. I think...

Thanks for your sharing! I think one quick solution is to install peft and transformer of the specified version. For example, ```` pip install git+https://github.com/huggingface/peft.git@xxxxxxx pip install git+https://github.com/huggingface/transformers.git@xxxx ```` We...

I commented the following code and fine-tuned llama-7b with lora on alpaca_data.json in 8V100. The training loss still became 0 at the iteration 560. Is it a problem with multi-card...