lostnighter

lostnighter

代码还没放出来呀,等待ing

@ahmed-retrace I also got gpu problem with 12G gpu memory during training. It is due to the large network parameters and too much crossattn computation. When I change num_res_blocks from...

@XavierXiao it can be possible if you set accumulate_grad_batches to a larger number.

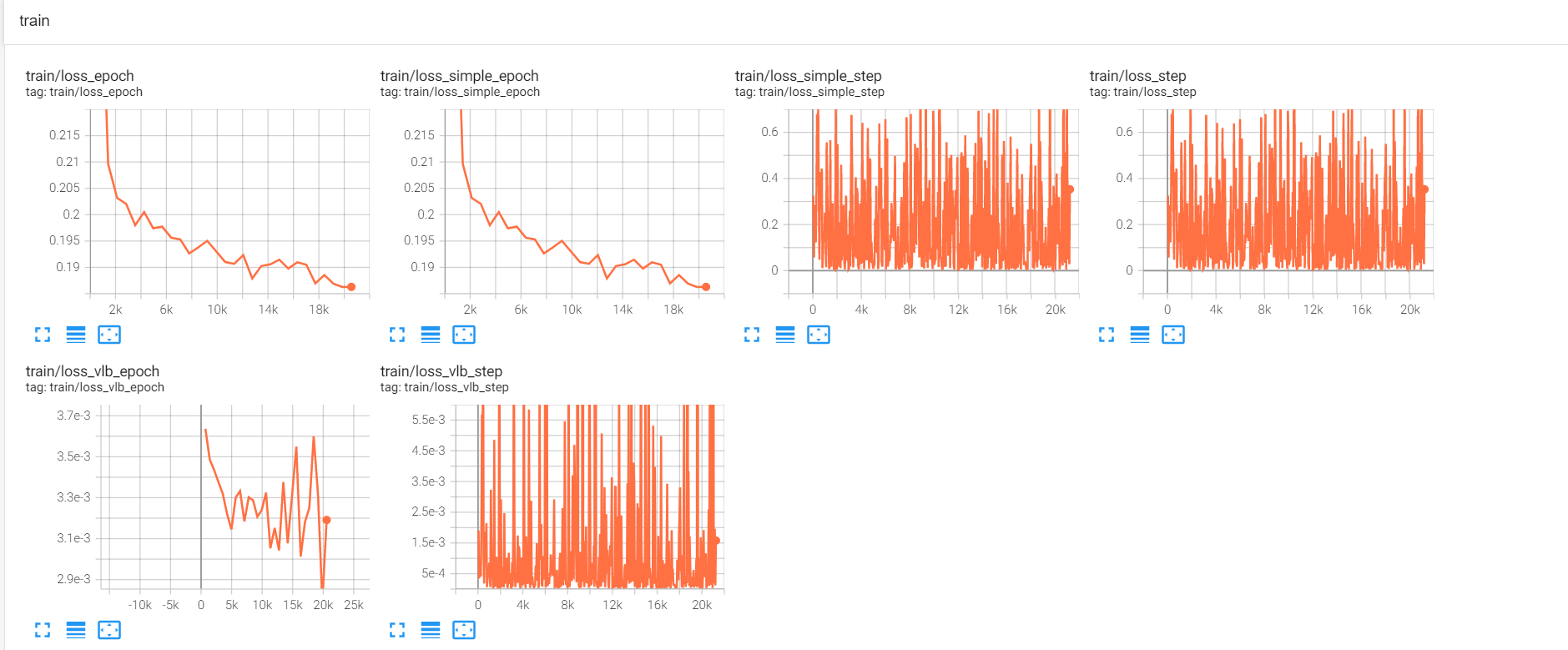

@ahmed-retrace i used the similar training config to yours, and set --scale_lr False to avoid unsatble training.  However, the training loss of step seems unstable and the generated images...

@zhangqizky @Ir1d Do you know why one GPU works and multiple GPU can not work? How to make multiple GPUs to work?

@Ir1d when i used multiple GPUs, I set --scale_lr False to enforce the total lr is the same as 1gpu. However, training using multiple GPUs get bad result. When I...

Hi jontooy, I download this file via azcopy as follows: path/to/azcopy copy https://biglmdiag.blob.core.windows.net/vinvl/pretrain_corpus/coco_flickr30k_googlecc_gqa_sbu_oi.lineidx ./ --recursive This url is not given. I just try it out.