lonelydancer

lonelydancer

@Taka152 i tried, it doesn't work. My model is a generate model train by myself.

= > Sampling doesn't have multiple outputs. You can feed a batch of the same inputs to get multiple outputs. For the wrong results, you can compile with debug info...

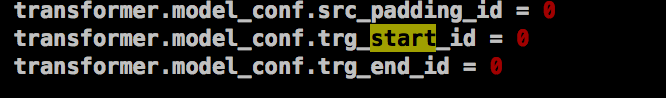

[vocab.txt](https://github.com/bytedance/lightseq/files/7862517/vocab.txt) My vocab is above, and i set  the result is still wrong. 噢 噢 噢 , 昨 天 刚 刚 刚 刚 刚 忙 叨 忙 啦 唉...

> For your own model, I suggest compiling with debug info and checking each layer's output. If you can reproduce the wrong result on an open model, I can find...

这样训练和预测会不会不一致呢? 为什么不做成一致的呢?

再弱问一句,paper里的batch_size是524,288,能开这么大啊。 不是ernie中的token num的概念吧

plato-2分支 跑2.1和2.2的时候,用infer.py可以吗;我配了两个model,但没找到代码中对应load两个model的地方 vocab_path="./package/dialog_cn/med.vocab" spm_model_file="./package/dialog_cn/med.model" infer_file="./data/valid_filelist" data_format="raw" file_format="filelist" config_path="./package/dialog_cn/plato/24L.json" output_name="response" save_path="output" model=Plato task=DialogGeneration do_generation=true is_distributed=false save_steps=10000 #is_cn=true init_params=output/step_160000 nsp_inference_model_path=output_nsp/step_220000 #nsp_init_params=output_nsp/step_220000 infer_args="--ranking_score nsp_score --mem_efficient true --do_lower_case true" #ranking_score=nsp_score #num_samples=20 #topk=5

@sserdoubleh 这个配置我是理解的,但是在代码infer.py没见到对应的。感觉infer只能加载一个model

> https://github.com/PaddlePaddle/Knover/blob/plato-2/plato-2/scripts/24L_plato_interact.sh > 参考这个吧,不知道你用plato-2分支的时候用的是哪一个运行脚本 > conf文件只是一个辅助的方法,具体的调用可以查看对应的运行shell脚本 > > 如果你使用到的是scripts/local/infer.sh(会进一步调用scripts/distributed/infer.sh),要不你把nsp_inference_model_path放到infer_args里面试试?如:infer_args="XXXX --nsp_inference_model_path output_nsp/step_220000" > 因为,nsp_inference_model_path没有在https://github.com/PaddlePaddle/Knover/blob/plato-2/scripts/distributed/infer.sh 预先定义使用到,需要放到用于指定额外arguments的infer_args里 > > infer.py只会定义一个主模型,用于完成给定task的inference,一些与task相关的辅助模型(如对话生成任务的后排序模型)是定义在Task里面,可以看https://github.com/PaddlePaddle/Knover/blob/plato-2/tasks/dialog_generation.py 我使用了类似 plato-2/scrips/24L_plato_infer.sh的脚本,但是我发现nsp_predictor的输出是NAN。

果然是训练的时候是NAN.. ``` #!/bin/bash set -eux export CUDA_VISIBLE_DEVICES=0 # change to Knover working directory SCRIPT=`realpath "$0"` KNOVER_DIR=`dirname ${SCRIPT}`/../.. cd $KNOVER_DIR model_size=24L export vocab_path=./package/dialog_cn/med.vocab export spm_model_file=./package/dialog_cn/med.model export config_path=./package/dialog_cn/plato/${model_size}.json nsp_init_params=./${model_size}/NSP nsp_init_params=output_nsp/step_250000/ if [...