bart topk sample result is wrong

I'm using a bart model for text_generation.i modified

https://github.com/lonelydancer/algorithm/blob/master/ls_bart2.py

https://github.com/lonelydancer/algorithm/blob/master/hf_bart_export.py

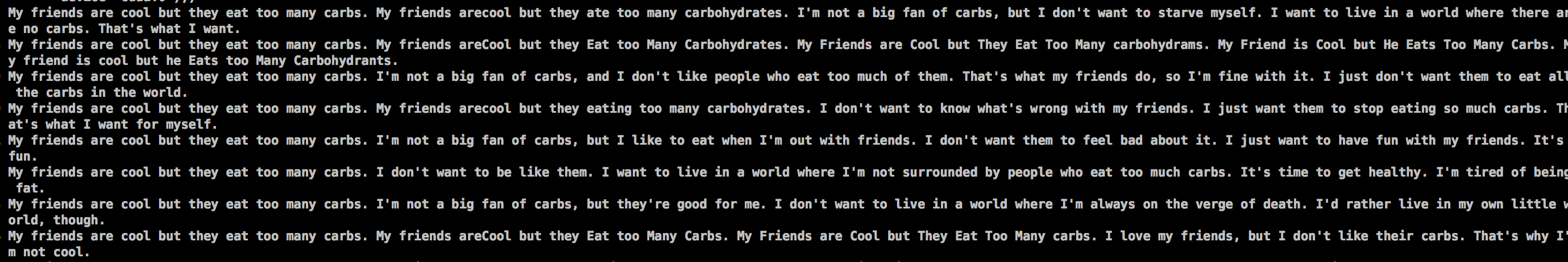

the generated text is wrong.

@Taka152 i tried, it doesn't work. My model is a generate model train by myself.

Sampling doesn't have multiple outputs. You can feed a batch of the same inputs to get multiple outputs. For the wrong results, you can compile with debug info to find out. The example huggingface model works fine with topk sampling.

=

Sampling doesn't have multiple outputs. You can feed a batch of the same inputs to get multiple outputs. For the wrong results, you can compile with debug info to find out. The example huggingface model works fine with topk sampling.

- i can generate multi output use the method you mentioned.

- i change the task to a generate model, not the mask filled model in the demo.

For your own model, I suggest compiling with debug info and checking each layer's output. If you can reproduce the wrong result on an open model, I can find out if it is a bug.

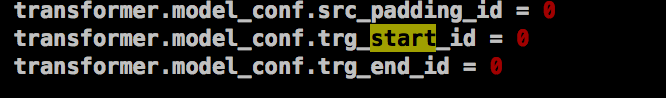

I think it may relate to tokenizer bos and eos config, you can check if they are passed to model_config correctly.

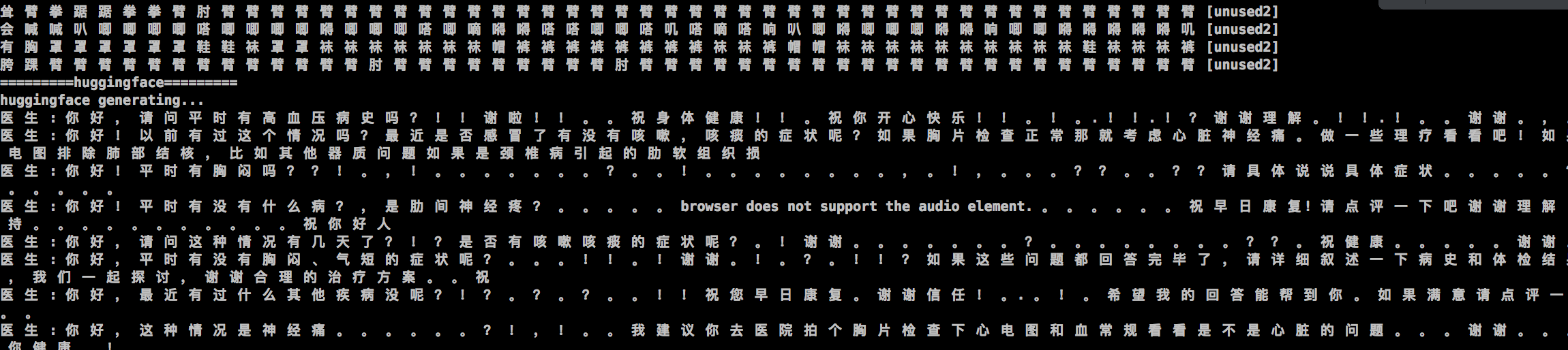

vocab.txt

My vocab is above, and i set

the result is still wrong.

噢 噢 噢 , 昨 天 刚 刚 刚 刚 刚 忙 叨 忙 啦 唉 ! 唉 唉 呀 唉 唉 唉 啊 呀 呀 呀 哇 啊 哎 呀 啊 啦 哒 哎 呀 呀 呗 哟

i don't know the explain of the tgt_start_id, tgt_end_id ; i think i have only one vocabulary for the decoder. Is it ok i use a berttokenizer?

the result is still wrong.

噢 噢 噢 , 昨 天 刚 刚 刚 刚 刚 忙 叨 忙 啦 唉 ! 唉 唉 呀 唉 唉 唉 啊 呀 呀 呀 哇 啊 哎 呀 啊 啦 哒 哎 呀 呀 呗 哟

i don't know the explain of the tgt_start_id, tgt_end_id ; i think i have only one vocabulary for the decoder. Is it ok i use a berttokenizer?

For your own model, I suggest compiling with debug info and checking each layer's output. If you can reproduce the wrong result on an open model, I can find out if it is a bug.

i use the open model, facebook/bart-large-cnn, i think there is bug in export scripts?

the code is here

https://github.com/lonelydancer/algorithm/blob/master/hf_bart_export_en_summary.py

https://github.com/lonelydancer/algorithm/blob/master/ls_bart_summary.py

https://github.com/lonelydancer/algorithm/blob/master/test_bart.py

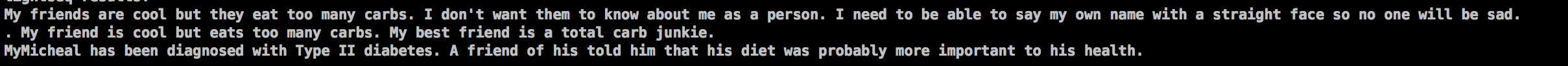

huggingface

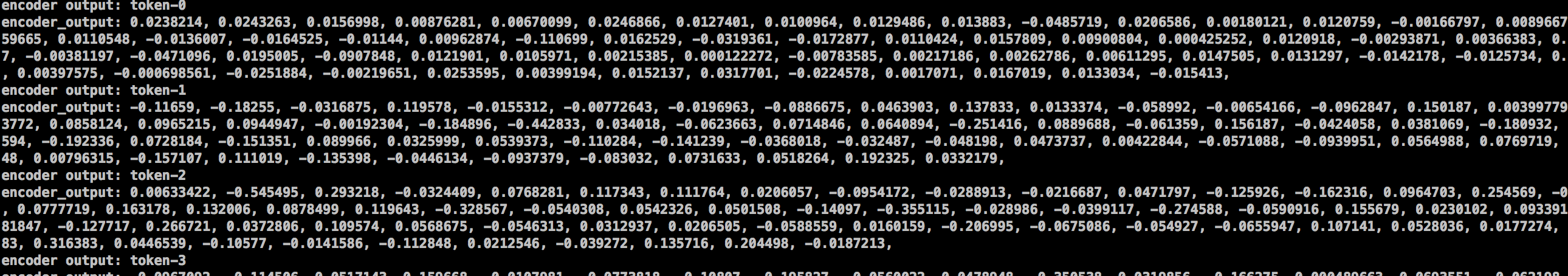

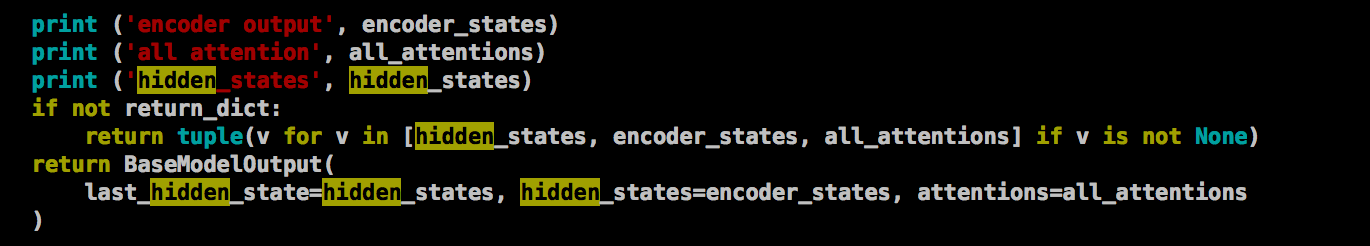

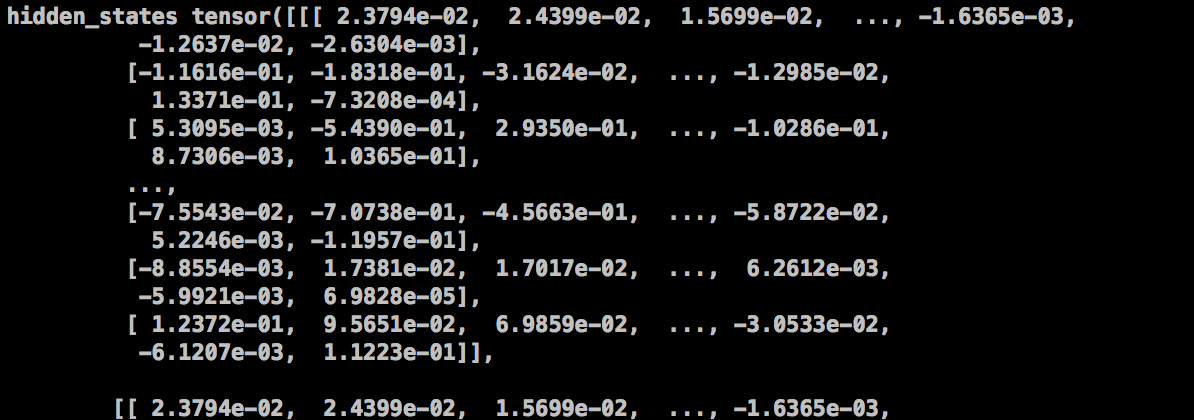

in BartEncoder forward funtion

lightseq