Xin Yao

Xin Yao

Great job. However, when I train to the 3rd epoch in the QNLI task, I encounter the following problem, but the CoLA or Squad tasks do not encounter this problem....

oomkilled  默认的脚本 `set -x export BS=${BS:-16} export MEMCAP=${MEMCAP:-0} export GPUNUM=${GPUNUM:-1} export MODLE_PATH="facebook/opt-${MODEL}" model_name_or_path=./opt6.7b \# HF_DATASETS_OFFLINE=1 TRANSFORMERS_OFFLINE=1 torchrun \ --nproc_per_node ${GPUNUM} \ --master_port 19198 \ train_gemini_opt.py \ --mem_cap ${MEMCAP} \...

### 脚本: ``` set -x export BS=${BS:-1} export MEMCAP=${MEMCAP:-40} export MODEL=${MODEL:-"30b"} export GPUNUM=${GPUNUM:-8} mkdir -p ./logs export MODLE_PATH="facebook/opt-${MODEL}" torchrun \ --nproc_per_node ${GPUNUM} \ --master_port 19198 \ train_gemini_opt.py \ --mem_cap ${MEMCAP}...

Amazing work. But may I ask how to solve the following problem?

Cool job, but I am getting the following error after running convert-gptq-to-ggml.py.

No output after `.\quantize.exe .\models\7B\ggml-model-f16.bin \models\7B\ggml-model-q4_0.bin 2` Ask for help,thanks!!!

请问有交流群吗?

如上,希望大佬拉我一下,感激不尽!!!

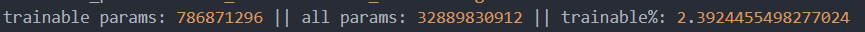

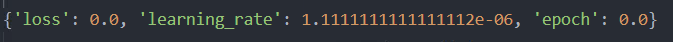

code is: ``` model = LlamaCausalLM.from_pretrained(path, load_in_8bit=True,device_map="auto") model = prepare_model_for_int8_training(model) mdoel = get_peft_model(model, peft_config) model.print_trainable_parameters() ```   platform: ``` torch1.12cu113 python3.8.16 peft=0.3.0.dev0 transformers=4.29.0.dev0 ``` And the model works well...