ColossalAI

ColossalAI copied to clipboard

[BUG]: OPT30B CUDA out of memory

脚本:

set -x

export BS=${BS:-1}

export MEMCAP=${MEMCAP:-40}

export MODEL=${MODEL:-"30b"}

export GPUNUM=${GPUNUM:-8}

mkdir -p ./logs

export MODLE_PATH="facebook/opt-${MODEL}"

torchrun \

--nproc_per_node ${GPUNUM} \

--master_port 19198 \

train_gemini_opt.py \

--mem_cap ${MEMCAP} \

--model_name_or_path ${MODLE_PATH} \

--batch_size ${BS}

Environment

torch: torch1.13cu117 GPU: 8*A100 48G python: 3.9

The GPU memory is not enough. Please try smaller models such as opt1.3b and see if it works.

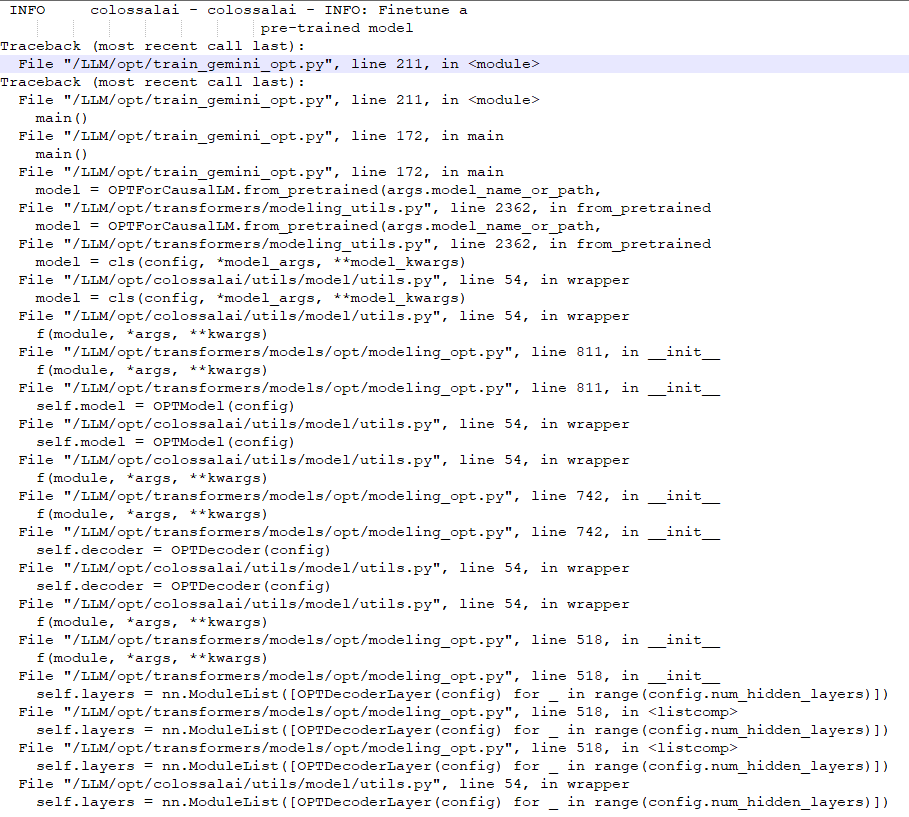

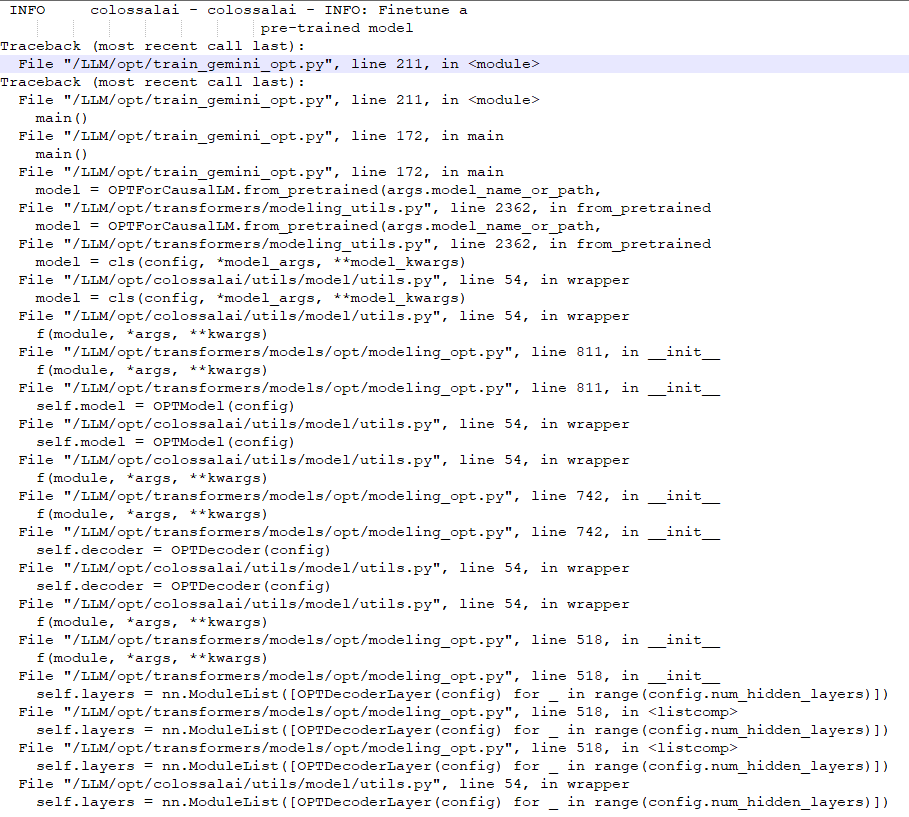

OPT13B是可以的,但是OPT-30B日志显示应该是出错在模型加载那里

Bot detected the issue body's language is not English, translate it automatically. 👯👭🏻🧑🤝🧑👫🧑🏿🤝🧑🏻👩🏾🤝👨🏿👬🏿

OPT13B is ok, but the OPT-30B log shows that there should be an error in the model loading

Hi @iMountTai The most likely cause is insufficient CPU memory. This issue was closed due to inactivity. Thanks.