SomanathSankaran

SomanathSankaran

I also tried to validate the returned dataflow activity using validate() and got below error df_act.validate() msrest.exceptions.ValidationError("Parameter 'ExecuteDataFlowActivity.data_flow' can not be None."

thanks @mohanbaabu1996 eagerly waiting for the dashboard

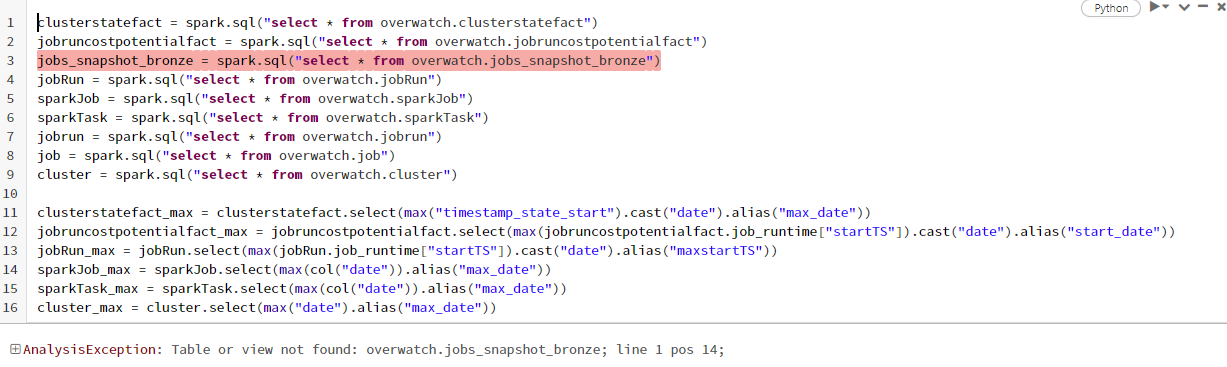

hi @mohanbaabu1996 I am facing below error

I am not sure if something I missed while collecting data . https://databrickslabs.github.io/overwatch/assets/GettingStarted/azure_runner_docs_example_060.html I used this notebook to create the required tables.

Arguments passed for the same val storagePrefix = "/mnt/overwatch_data".toLowerCase // PRIMARY OVERWATCH OUTPUT PREFIX val etlDB = "overwatch_etl".toLowerCase val consumerDB = "overwatch".toLowerCase val secretsScope = "OVERWATCH-SCOPE" val dbPATKey = "DBPAT"...

Please let me know if I missed something while collecting the data

@mohanbaabu1996 I am available at 12:30 pm

Observation : Since it is default python for each notebooks are not copied to bundle bundle source folder SQL tasks queries are not copied to bundle source folder

databricks asset bundles deployment bind creating multiple copies of job and dashboard when deploying after bind . Is it necessary to generate everytime when using bind . My assumption is...