jiyunjie

jiyunjie

I further read your evaluation scripts, and found that your evaluation metrics are too strict. In your code, if an argument is correctly classified, its corresponding predicted trigger should also...

Query masking is unnecessary? cause the padded query will be masked out by next block's key masking?

应该是代码效率的问题。在同样的硬件条件和参数配置下,我用别的开源代码,在相同结构的Bi-LSTM+CRF模型下,训练速度很快。 @XINGXIAOYU

> 同问,是否考虑发布基于LLaMA-7B的模型 已经发布LLAMA-7B,基于200万数据训练。

> > > Thank you for reporting this issue. 130 TFLOPS is indeed too low for the H100. I quickly reviewed your script and have some suggestions: > > >...

> Hi, thanks for the suggestions. > I retested the throuput according to your suggestion. > To be more specific: > > 1. Update Megatron-LM the latest commit (https://github.com/NVIDIA/Megatron-LM/commit/ba773259dbe5735fbd91ca41e7f4ded60b335c52) >...

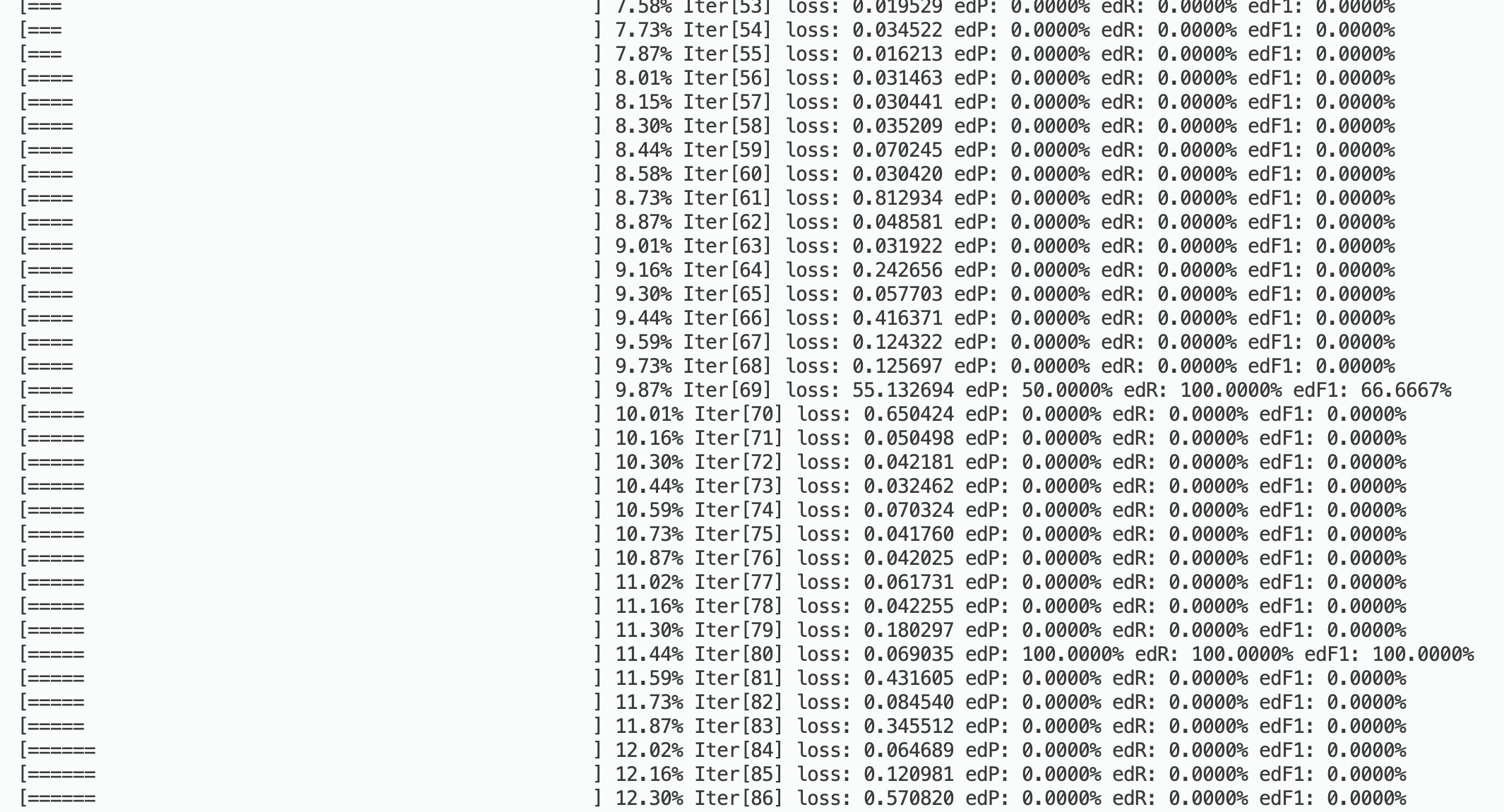

> Hi, > During my test I got the same problem. Even after many epoch most time precision, recall, and f1 are 0. >  > Did you find the...

Actually you can train or infer with fp16 by simple settings, [this doc]( https://huggingface.co/docs/transformers/v4.13.0/en/performance#fp16) may help you. We are going to provide some mixed-precision training and inference demo code as...

same issue