zjzdy

zjzdy

@Kapeli Could you try to test lrzip ? http://ck.kolivas.org/apps/lrzip/ You can use -l or -Ul to comparison(use lzo) if you want to fast decompress. benchmark: http://ck.kolivas.org/apps/lrzip/README.benchmarks

@Kapeli @trollixx Dash needn't all decompress that tgz file .(? I'm not sure whether you want to express this meaning? ) But zeal also need all decompress that tgz file...

Kind reminder, can extract the index file in advance, because the index file is IO intensive

我的日志,不确定是不是同一个问题 [2018-04-30 21:49:22] [ERROR] 第5次请求“http://s.web2.qq.com/api/get_user_friends2”时出现 网络错误 HTTPConnectionPool(host='s.web2.qq.com', port=80): Read timed out. (read timeout=30), html='' [2018-04-30 21:49:22] [ERROR] 获取 好友列表 失败

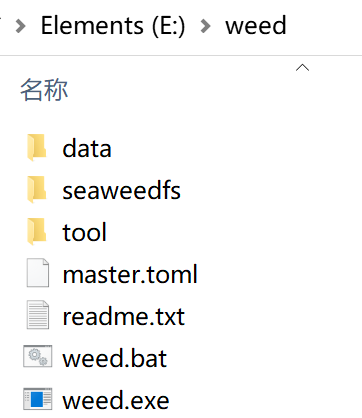

The last directory screenshot not enough? Need directory path?

I run the command to fix problems. ``` weed.exe fix E:/weed/data/99.dat weed.exe fix E:/weed/data/104.dat ``` But command like `weed.exe -volumeId 99 E:/weed/data/` NOT print anything and NOT modify idx file....

I exit weed. Offline run weed fix.

oh, yes. So, do you want to implement this feature? I am looking forward to it, because use rclone mount seaweedfs's s3 api or use win/RaiDrive mount webdav to write...

I use a hack method to fix the error: change `getElementsByTagName('xxx')` to `getElementsByTagNameNS("*", "xxx")`