jirongq

jirongq

see operator controller has reported about this error ``` log E0804 16:26:05.774418 1 tidb_cluster_controller.go:126] TidbCluster: myapp/basic, sync failed TidbCluster: myapp/basic .Status.PD.Synced = false, can't failover, requeuing E0804 16:26:35.762262 1 pd_member_manager.go:190]...

if [#25716](https://github.com/pingcap/tidb/issues/25716) to be fixed, is this still a potential problem?

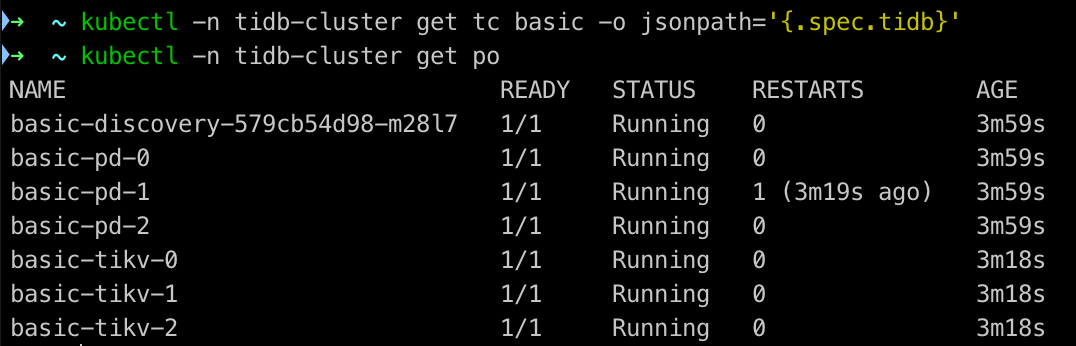

maybe use `kubectl api-resources` to collect all (needed) config can work for this? kubectl api-resources --verbs=list -n `tidb-cluster` -o name | **`grep -Ei "tidbclusters.pingcap.com|pods"`** | xargs -n 1 kubectl get...

hi, you can first scale-in tidb to replica:2, let cluster become normal, then operator can proceed the sync logic, after changing the affinity rules, you can scale-out back tidb-2 if...

hi hoyhbx, this error message is reported from https://github.com/pingcap/tidb-operator/blob/v1.4.4/pkg/manager/member/tidb_upgrader.go#L123-L133, it block Operator continue do `upgradeTiDBPod`. Current Operator sync logic is sync each component type one by one in an order,...

if we already in the clear upgrade logic https://github.com/pingcap/tidb-operator/blob/v1.4.4/pkg/manager/member/tidb_member_manager.go#L305 , do we still need check the pod's status? or we can just let it start upgrade. @hanlins

there has no limit in operator, you can deploy `TidbCluster` with `.spec.tidb.replica: 0` to not use tidb, then you need find a way to directly use tikv as a RawKV...

test as csuzhangxc said, it's ok to just omit whole `spec.tidb` part , so there seems no need to add `enabled` field.

I think `helm repo update` is for this https://docs.pingcap.com/tidb-in-kubernetes/stable/upgrade-tidb-operator#online-upgrade

in this doc, there has two different word: - TiDB cluster: in TiDB's view a whole TiDB cluster - TidbCluster: the CR of operator, in operator's view it's some managered...