wchen61

wchen61

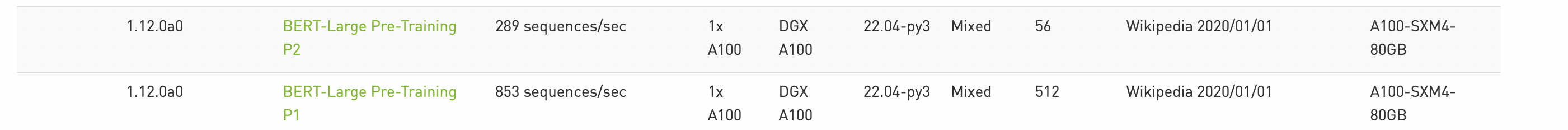

Hi, I have notice that on A100 80G, bert Phase1 and Phase 2 can have a throughput of 853 and 289 sequences/sec respectively.  I want to reproduce this result...

Is this code a typo and should be `half *__restrict grad`? https://github.com/NVIDIA/DeepLearningExamples/blob/b1fc3c46f508201405c76e4811c036b05e9773d7/PyTorch/Recommendation/DLRM/dlrm/cuda_src/dot_based_interact/dot_based_interact_tf32_bwd.cu#L32

**Describe the bug** https://huggingface.co/tiiuae/falcon-7b Unable to quantize Falcon-7b model, throws an assertion error. auto_gptq/nn_modules/qlinear/qlinear_exllama.py: line 69 assert infeatures % self.group_size == 0 auto_gptq v0.7.1 transformers 4.40.0

Hi, I want to know why the marlin kernel in vllm do not need evict_first hint when copy B from global to shared memory. https://github.com/vllm-project/vllm/blob/7abba39ee64c1e2c84f48d7c38b2cd1c24bb0ebb/csrc/quantization/gptq_marlin/marlin.cuh#L71 As this optimization is specifically...