Vincent Lordier

Vincent Lordier

Same problem here : points are moved to the left, unsure why

Will work on - [ ] Conversational reading (https://arxiv.org/pdf/1906.02738.pdf) Any help or suggestion of a better model welcome @abisee

Take a look here : [save location](https://github.com/Tramac/awesome-semantic-segmentation-pytorch/blob/master/scripts/train.py#L285) and here [location argument](https://github.com/Tramac/awesome-semantic-segmentation-pytorch/blob/master/scripts/train.py#L92) defaults are [set here](https://github.com/Tramac/awesome-semantic-segmentation-pytorch/blob/master/scripts/train.py#L87) ``` parser.add_argument('--save-dir', default='~/.torch/models', help='Directory for saving checkpoint models') parser.add_argument('--save-epoch', type=int, default=10, help='save model every checkpoint-epoch')...

@Jason-xin you can generate the trimaps using `./data/gen_trimap.sh` or simply tweak `gen_trimap.py` I found that using ` dilated = cv2.dilate(msk, kernel, iterations=3) * 255` ` eroded = cv2.erode(msk, kernel, iterations=1)...

You can split Trimap and Matting networks by freezing each, and use `requires_grad = False` in `train.py` For instance, after loading the model : ``` # depending on the training...

@kkkmax do you mean that to converge, we need a pre-trained FCN8s like one found here https://github.com/wkentaro/fcn and then set it to `--pretrained_fcn8s` ? Can you elaborate please ?

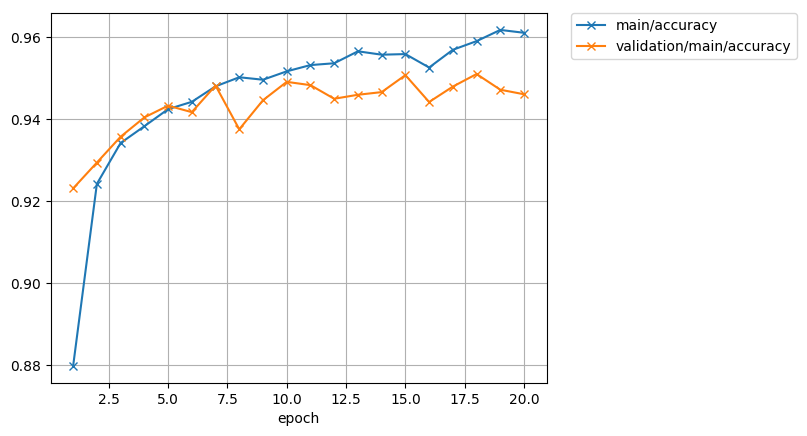

Answer to self, for others : This is the sort of accuracy we get using the pre-trained FCN8 from the link above.

You need to train the model properly first, using a pre-trained FCN model. See https://github.com/takiyu/portrait_matting/issues/22 This is the sort of masks (trimap) you can generate after a just few epochs...

@Squadrick you mentioned you'd implement DCN v2 in TF 2 : is this something you're having progress with ?

There is a TF2 version [here](https://github.com/MrRobot2211/deep-photo-enhance-t2) which anyone can help work on