Po-Han Huang (NVIDIA)

Po-Han Huang (NVIDIA)

@DingJuPeng1 You can try using Polygraphy tool (https://github.com/NVIDIA/TensorRT/blob/main/tools/Polygraphy/how-to/debug_accuracy.md ) to see which layer(s) produce wrong results. Also, I would suggest that you try newer TRT version like TRT 8.4 GA...

Yes, that was a bug already fixed in TRT 8.4.

@MarceJara Which TRT version did you use? Could you try TRT 8.4.1?

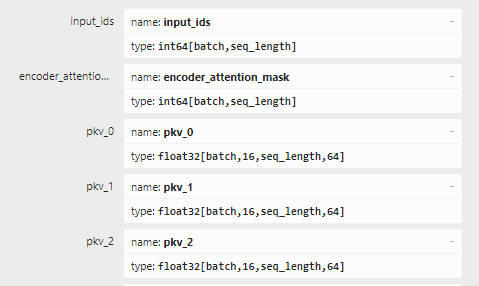

The input shapes of the `input_ids` and `encoder_attention_mask` are both `[batch,seq_length]` in your ONNX model, but the optShapes you provide has different `seq_length` between the...

Thanks. We will debug this. @zerollzeng Could you repro this and file an internal tracker bug? Thanks

By default, TRT assumes that the network inputs/outputs are in FP32 linear (i.e. NCHW) format. However, many tactics in TRT require different formats, like NHWC8 or NC/32HW32 formats, so TRT...

@MaxeeCR Could you check if you have set the calibration profile correctly? See: https://docs.nvidia.com/deeplearning/tensorrt/developer-guide/index.html#int8-calib-dynamic-shapes

@MaxeeCR Could you provide your ONNX file so that we can repro and debug this issue? Thanks

@achigin Could you try TRT 8.4 and see if the issue still exists? Thanks

correct, we don't have tactics for 3d convs with asymmetric padding on old GPUs like Pascal GPUs.