marcusau

marcusau

我己完成word segmentation model,效果令人滿意,如果我希望用閣下的pos tagger去train一個基於分詞結果而判別各word tokens的pos tagger, 應該把語料轉換成怎樣的結構?

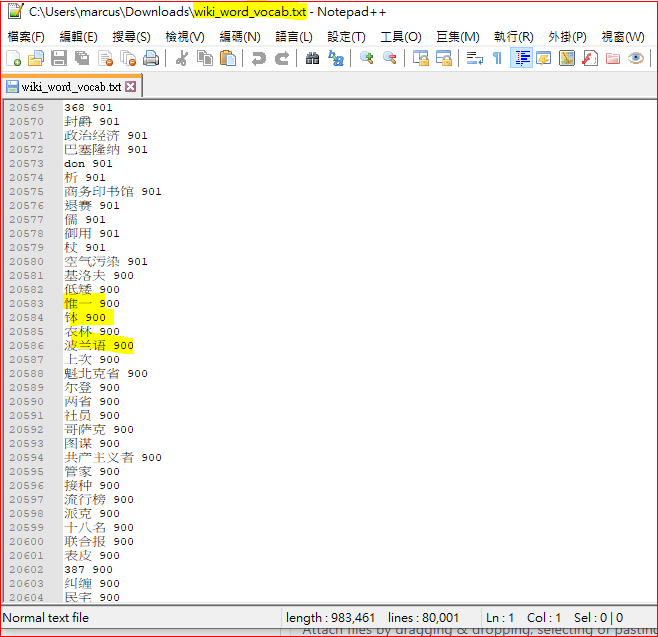

你好, 十分欣賞閣下的model及對中文NLP的深入理解。 當中尤其是, word-based bert 這個model...因為坊間所有bert乃character-based ,使其無法做到對詞搜詞的操作。 可否一問, 我是住香港的nlper. 我使用的是繁體字, 如何可finetune bert_wiki_word_model.bin? 我想過先把近三十萬句繁體字句子先進行分詞, 然後使用閣下build_vocab.py 再把繁體字vocab 加入google的vocab.txt之中。 但在閣下的word-based bert vocab.txt 之內, 詞語旁是有weighting的, 這使我大為不解? 可否請教, 如我使用繁體字, 可如何pretrain bert_wiki_word_model.bin? 然後使用topn_words_dep.py ? 謝謝

May I ask how to resolve this ?? I recommended_score = self.model.predict(user_code, np.array(list_item_codes), user_features=user_features, item_features=item_features, num_threads=multiprocessing.cpu_count() ) --> 144 recommended_score = self.model.predict(user_code, 145 np.array(list_item_codes), 146 user_features=user_features, c:\Users\\Desktop\ENV\lib\site-packages\lightfm\lightfm.py in predict(self, user_ids,...

Hi, Thanks for your contribution. I am seeking alternative solution for deploying NER models on no-GPU production environment. For Bert-Bilstm-CRF, it runs too slow on my production server. If replacing...

The library is amazing, I can easily train a very comprehensive NER model from it. Just one simple question After training the model, any particular way to deploy the model...

應該怎樣修改? 可否提示 謝謝

Thanks for your contribution.

Hi, Thanks for this amazing module. It helps a lot One question.. the usage of this module always causes of out of memory issue (my Device: 16G RAM) even my...

same as topic Thanks a lot

from my server side: from backend.bert_test import NERServeHandler from flask import Flask, request, jsonify from service_streamer import ThreadedStreamer app = Flask(__name__) model = None streamer = None @app.route("/stream", methods=["POST"]) def...