Traly

Traly

If you don't use ddp model in training (like don't use torch.distributed.launch), you can refer this issuse https://github.com/huawei-noah/Efficient-Computing/issues/68

Perhaps you can look at your folder and check that the ckpt is complete.

And you can run tools/infer.py to visualize the bbox.

This problem has been fixed.

Can you give me more details, like your model config and data yaml. Does this affect the mIoU of the model.

>  the model is gold-yolo-s, dataset is coco2017. I have known why the cwd_loss is 0 (beacuse without using --distill_feat), but I still want to know why the cls_loss...

This may be due to the teacher's checkpoints not being aligned with the model, resulting in the error label generated by the teacher. You can set `strict=True` in here [load_state_dict](https://github.com/huawei-noah/Efficient-Computing/blob/master/Detection/Gold-YOLO/yolov6/utils/checkpoint.py#L19)...

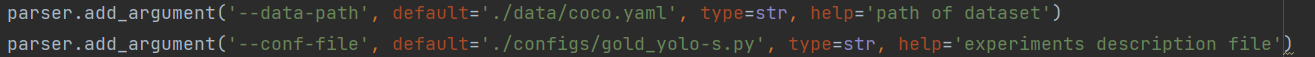

Current code is supported, you should change the SyncBN to BN in model config, and you can start single GPU training with the following command: `python tools/train.py --batch 32 --conf...

Maybe somewhere still not corrected correctly, you should remove `--use_syncbn` in training commend, and change your model config like this: ``` # GoldYOLO-s model use_checkpoint = False model = dict(...