lmxhappy

lmxhappy

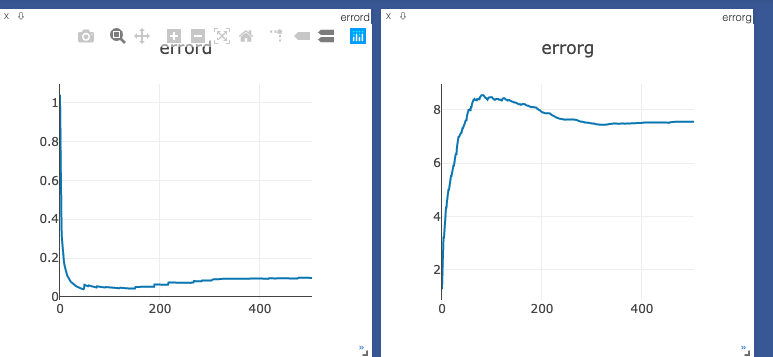

请问。generator的loss怎么会持续升高呢?

代码里可见,判别器的训练次数是生成器的5倍。是已有经验说明,判别器更难训练吗?

改正方法名字

So many papers about gan. I am a fresh man, I do not know which paper I should begin to read and learn. Anyone else could give some suggestion? Thanks.

`best_eval_loss:",math.exp(best_eval_loss) if best_eval_loss

` all_weights['feature_bias'] = tf.Variable(tf.random_uniform([self.features_M, 1], 0.0, 0.0), name='feature_bias') # features_M * 1` elements in all_weights['feature_bias'] are all zeros. so in ` # _________out _________ Bilinear = tf.reduce_sum(self.FM, 1, keep_dims=True) #...

In deepfm, continuous features is multiplied by its responding embedding vector, while is your codes no multiplication was not seen. Can nfm not deal with continuous features?

The same plots are used in 5.3.1 feature extraction and 5.3.2 fine tuning. I checked every points and found they are so similar. I read the book and it told...

----------------------------------------------- [START 2019-10-29 19:04:31] --------------------------------------------------- |------------ Train -------|----------- Valid ---------|----------Best Results---|------------| mode iter epoch | acc loss f1_macro | acc loss f1_macro | loss f1_macro | time | -------------------------------------------------------------------------------------------------------------------------| Downloading:...