jesumyip

jesumyip

I guess the problem is in https://github.com/open-telemetry/opentelemetry-python/blob/6b9f389940ec0123d5dafc7cb400fc23c6f691c6/opentelemetry-sdk/src/opentelemetry/sdk/_logs/_internal/__init__.py#L474 it is only doing `body=record.getMessage()`. So, it is completely ignoring any formatters that has been configured. so a possible fix would be to...

small update, since I don't use args, this code: ``` msg = self.format(record) record.msg = msg self._logger.emit(self._translate(record)) ``` should be changed to: ``` msg = self.format(record) record.msg = msg record.args...

Is there a fix for this?

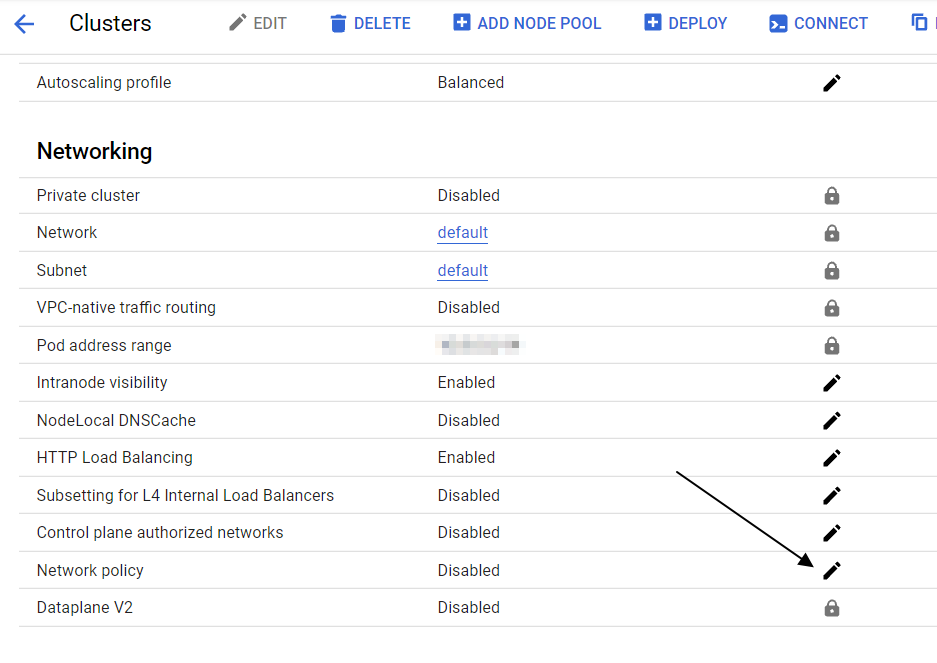

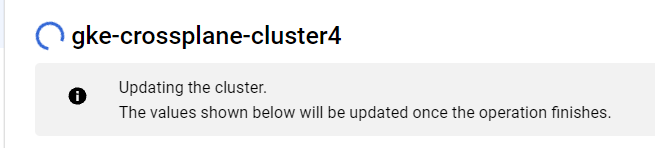

Repeated the test with **spec.forProvider.initialCluster = 1.18**, and **spec.forProvider.addonsConfig.networkPolicyConfig.disabled = true** ``` apiVersion: container.gcp.crossplane.io/v1beta2 kind: Cluster metadata: name: gke-crossplane-cluster4 spec: providerConfigRef: name: default forProvider: initialClusterVersion: "1.18" networkConfig: enableIntraNodeVisibility: true loggingService:...

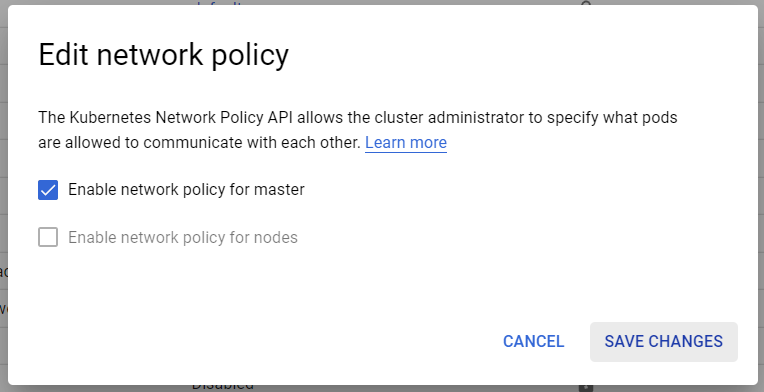

Enabling the network policy using GCP's web GUI also produces the same result.    I have filed an issue report with Google.

How does this solve the problem? In Vault, I can specify `/auth/kubernetes` as one auth path. I can also specify `/auth/myauth` as another. Both use kubernetes as the auth scheme....

Nevermind. I have decided to abandon Spark. Found something much better.

@gibchikafa Bytewax.