Hubert Lu

Hubert Lu

Hi @jekbradbury , I got the IPs for the cloud TPU v2-32 on GCP as follows: ``` 10.230.196.132 10.230.196.130 10.230.196.133 10.230.196.131 ``` Then, I ran the command ``` python3 file.py...

Thank you for the troubleshooting. Following the steps you mentioned I am able to use pmap collectively for all of the TPU hosts I have.

I haven't got ppermute working yet but I am testing it for my simulation. I will share some results or reopen this issue later if I bump into some problems....

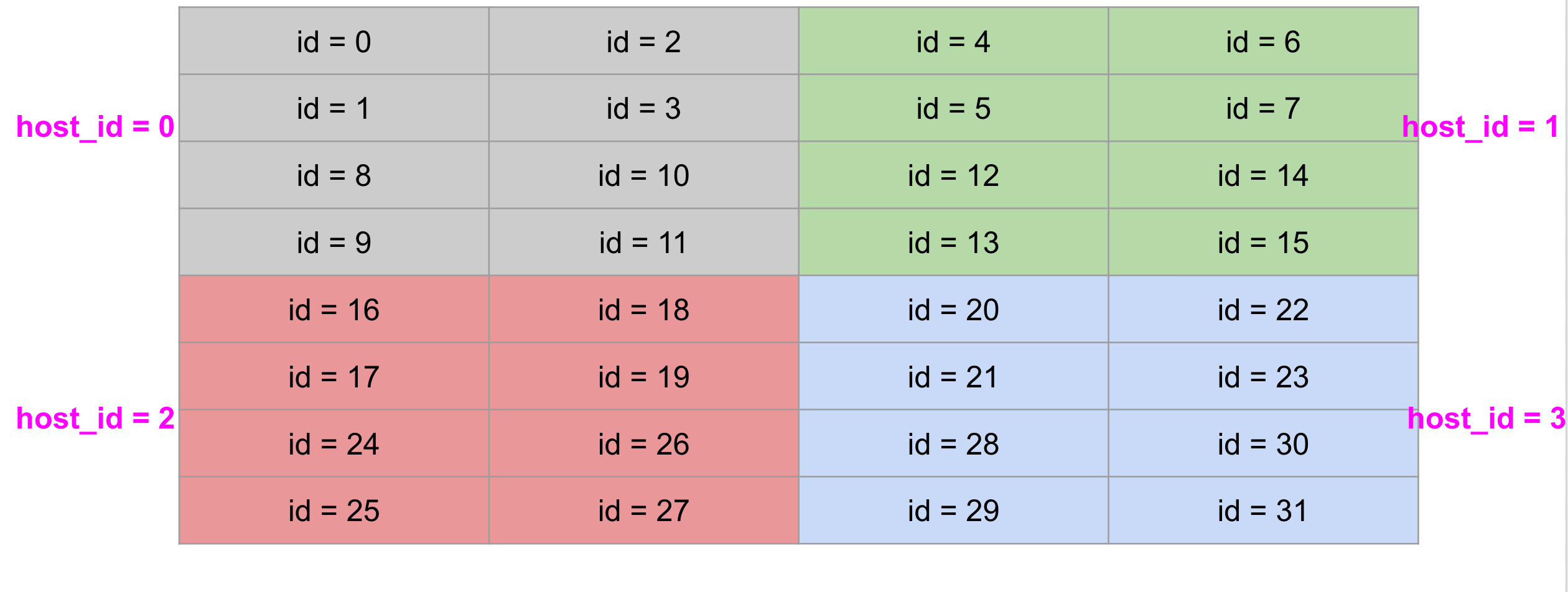

I created a simple test for `ppermute` on multi-host pmap with Cloud TPU v2-32. The layout of TPU cores is as follows:  The permutation operation is to send data...

Thanks for the reply. I also tried to provide all 31 permutations to `ppermute` and rewrite `_ppermute_translation_rule` function in **lax_parallel.py** from ``` def _ppermute_translation_rule(c, x, replica_groups, perm, platform=None): group_size =...

Hi @sabreshao, Great work! I am wondering if you could add `multimodal-gen-test` (https://github.com/sgl-project/sglang/blob/main/.github/workflows/pr-test.yml#L319-L343) to AMD CI (https://github.com/sgl-project/sglang/blob/main/.github/workflows/pr-test-amd.yml).

/tag-and-rerun-ci

@saienduri could you please help set up the gpu runners?

/tag-and-rerun-ci

/tag-and-rerun-ci 11/26