elcotek

elcotek

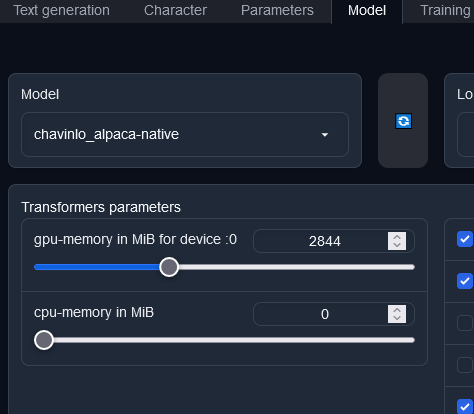

Additional memory of the GPU is required for text generation buffer in Vram, which must be kept free, otherwise the error mentioned above occurs. Use this in your command line...

Oobabooga could possibly dynamically adapt the Vram usage to the text length to be transmitted, but I don't know if the Vram can be dynamized as required.

You can also set this on the interface under the Model settings.

Then use the command line like i say before.

Ok i found out, i have to load the model manuelly for now in the model settings, after the server has started

Ok i found out, i have to load the model manuelly for now in the model settings, after the server has started

All right, i use the --model option for now. This solved my problem.

I had already downloaded johnson templates from huggingface.co for txt to txt to test, but I don't understand the exact procedure for training. There was always a message for me.....

The same for me, it is independent of the GPU Vram use. After a while, the Cai Bot starts to lose his context to the actual requests. Also, it seems...

The reason is quite simple: your vram is simply overloaded and the nodes are then accessing non-existent memory due to a bug. You need to use a smaller resolution for...