Marc

Marc

same here, I have not found a clear pattern, although I confirm that the times it has happened to me, it has been with DDIM.

> Card 2060 doesn't work after update( [f894dd5](https://github.com/AUTOMATIC1111/stable-diffusion-webui/commit/f894dd552f68bea27476f1f360ab8e79f3a65b4f) ), with `--precision full --no-half --medvram` RTX 2060 with 6GB vRAM here, try with: --medvram --opt-split-attention --precision autocast Note: not sure if...

https://github.com/gradio-app/gradio/issues/735#issuecomment-1790199534 https://www.gradio.app/docs/image `sourceslist[Literal[('upload', 'webcam', 'clipboard')]] \| Nonedefault: None | List of sources for the image. "upload" creates a box where user can drop an image file, "webcam" allows user to...

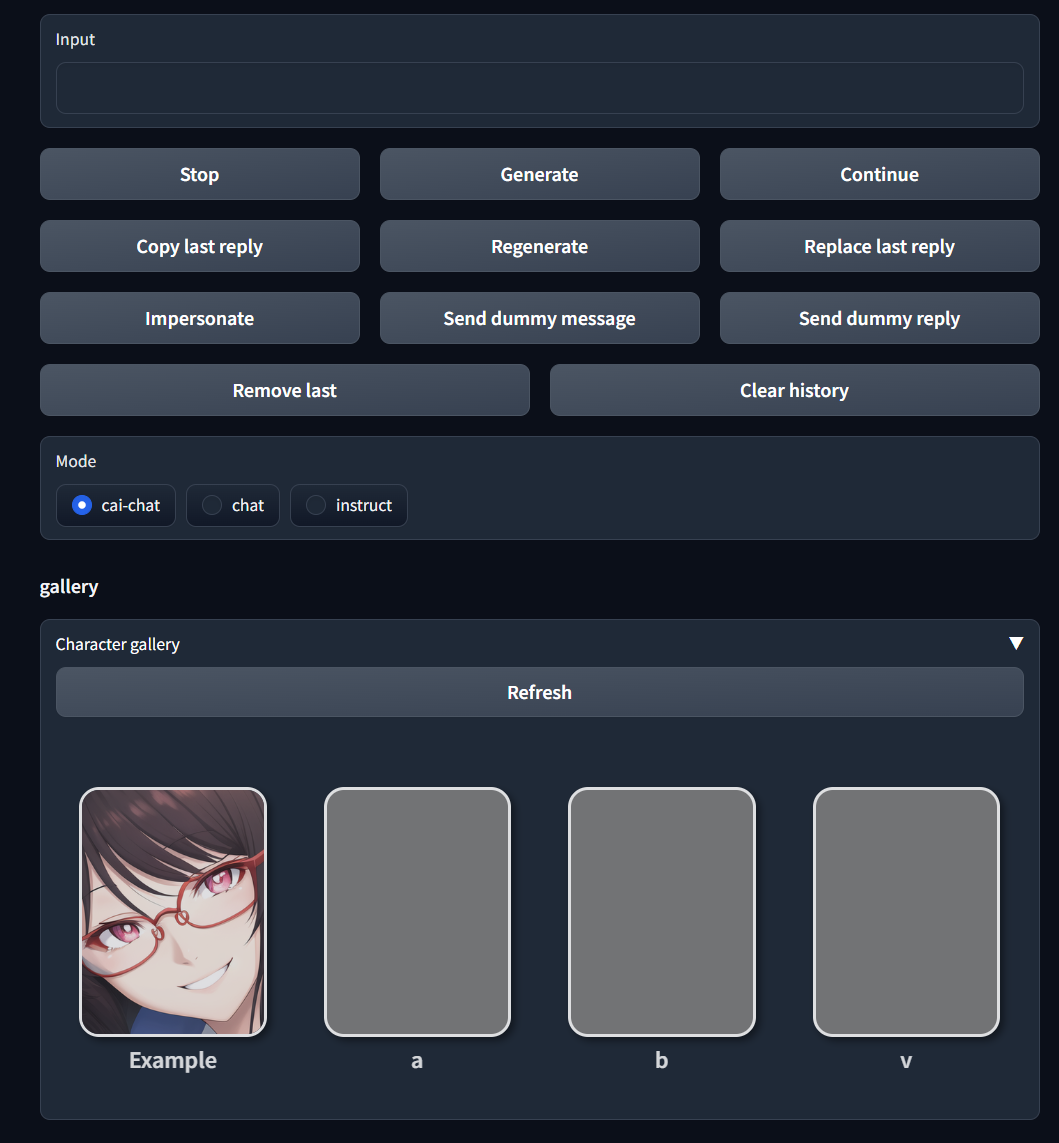

Sorry but I am completely unable to see those options :S

Ok, with peft package to 0.2.0, seems to work :)

I'm amble to do "things" with raw (short) texts, but What I get is almost nothing :( https://github.com/oobabooga/text-generation-webui/discussions/1199#discussioncomment-5622935 Example: Raw text (copy paste) from here: https://en.wikipedia.org/wiki/Louis_H._Bean Training ok (any warning)...

fixed the issue with pydantic==1.10.8

Same problem, same workaround, now working.

Trying with bitsandbytes version from https://github.com/oobabooga/text-generation-webui I'm getting this: ``` (minigpt4) J:\gpt\MiniGPT-4>python demo.py --cfg-path eval_configs/minigpt4_eval.yaml Initializing Chat Loading VIT Loading VIT Done Loading Q-Former Loading Q-Former Done Loading LLAMA ===================================BUG...

Still searching and remixing :S Don't know why its saying that having in mind that https://github.com/oobabooga/text-generation-webui is working in CUDA ¬¬'