YZW-explorer

YZW-explorer

Does anyone know the source code of channel calculation in pytorch?

Dear author, I would like to confirm one thing with you. Does the quantization layer of Brevitas use the value after inverse quantization when calculating the convolution? (Floating point numbers),Shouldn't...

Hello author, I am still trying to use pytorch to simulate the quantization and dequantization forward reasoning process of brevitas. I have the following two questions to ask you: 1....

Hello author, I am trying to implement this code https://github.com/Xilinx/finn/blob/master/notebooks/end2end_example/cybersecurity/2-export-to-finn-and-verify.ipynb I want to ask what the version of FINN is, my .onnx file is exported using onnx version 1.8.1

How can I quantify my model

I want to implement the deployment of 4bit quantization on the PYNQ board. I saw that the MobileNet-v1 example in the example is quantified to 4bit, but I saw it...

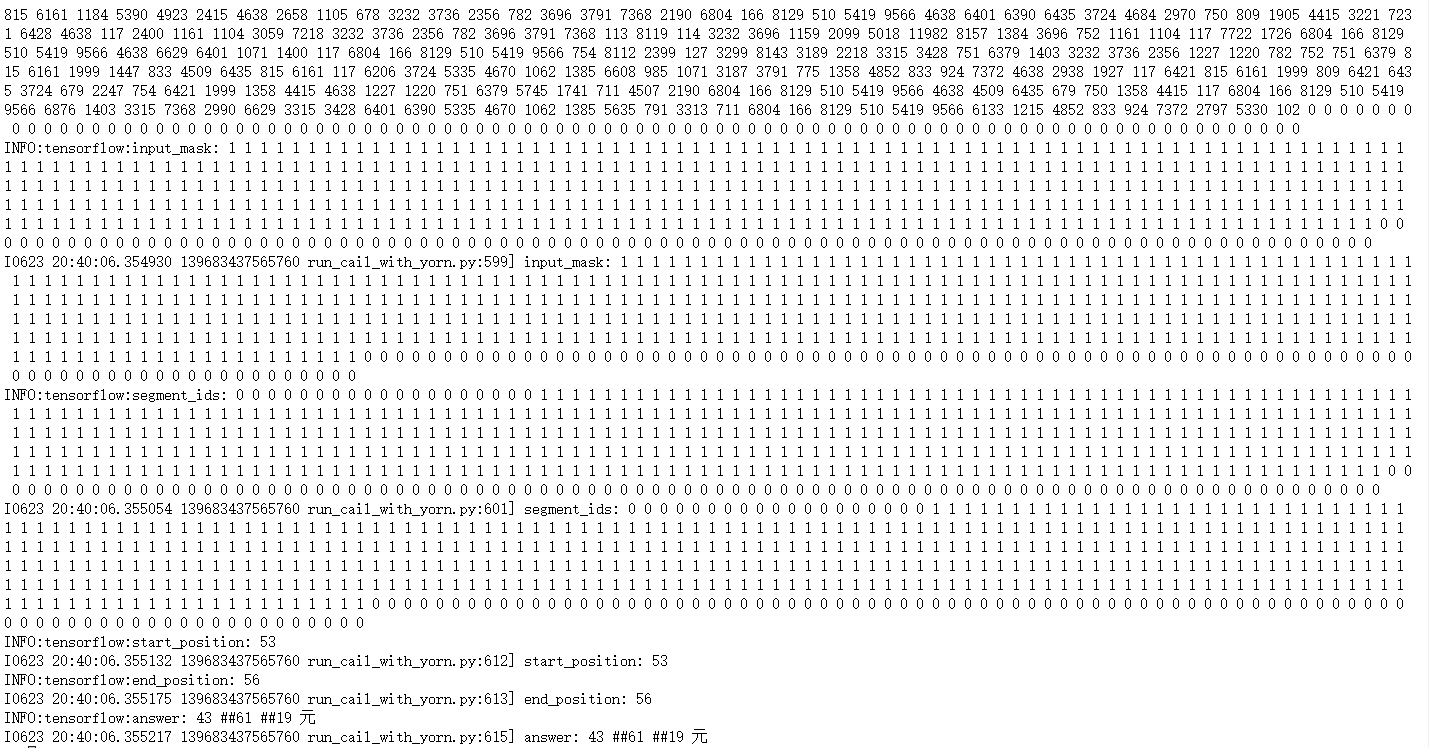

作者您好,很有幸使用了您的代码。我在尝试跑通您的代码,但是在运行的时候,终端疯狂有输出,请教一下您为什么,不胜感激!

各位大佬好!预训练次数是多少啊,以及,我如何通过生成器损失值,判别器损失值和内容损失值,这三个属性判断我的模型训练争取,我的数据如下所示,不胜感激! Pre-training start! [1/50] - time: 340.47, Recon loss: 20.638 [2/50] - time: 337.64, Recon loss: 16.235 [3/50] - time: 337.67, Recon loss: 14.199 [4/50] - time: 337.59, Recon loss:...

### PR types New features ### PR changes APIs ### Description PaddleNLP设计逻辑: llm/fp8quant.py 定义FP8的量化逻辑,将FP8UniformObserver写入QuantConfig中 llm/fp8finetune_generation.py 调用llm/fp8quant.py中的量化逻辑,完成全部量化过程

描述:定义新的FP8 observer,目前只支持e4m3格式 功能:统计量化对象的abs_max,并根据abs_max和fp8格式的取值范围计算scale PaddleSlim设计逻辑: paddleslim/quant/observers/fp8uniform.py 定义FP8UniformObserverLayer类用以统计量化对象的abs_max以及根据用户选择的要量化到的FP8类型设置正确的量化区间,并最终计算出scale,方便FP8LinearQuanterDequanter进行量化和反量化操作 paddleslim/quant/observers/__init__.py 将FP8UniformObserver添加到__init__中方便外部调用