ShiMinghao0208

ShiMinghao0208

And,How to deal with these errors

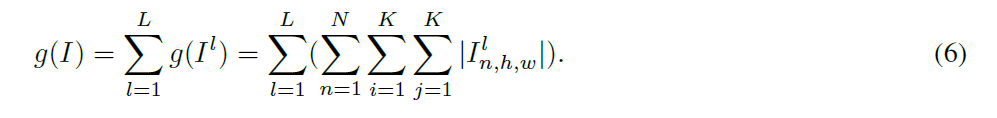

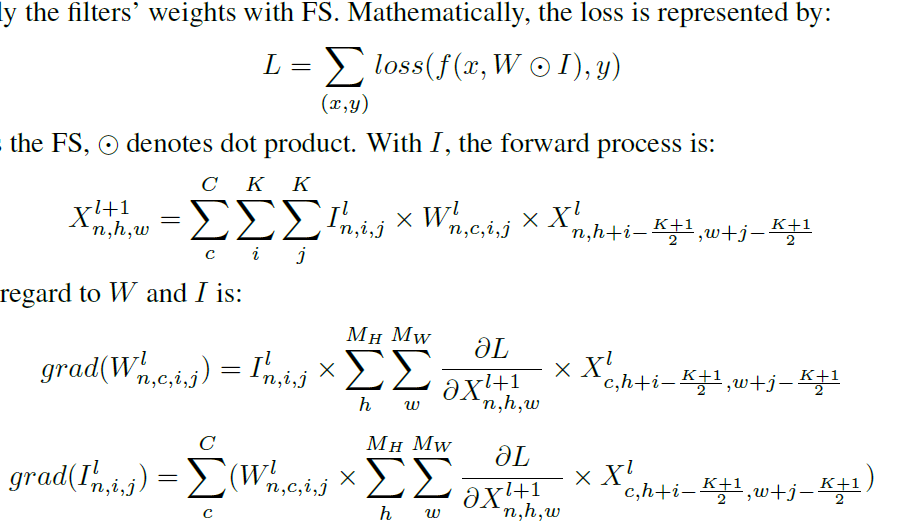

好的,十分感谢。另外有个问题是文中出现的l1范数 g(I)对应代码的哪里呢?我大致看了一遍代码有点对应不上

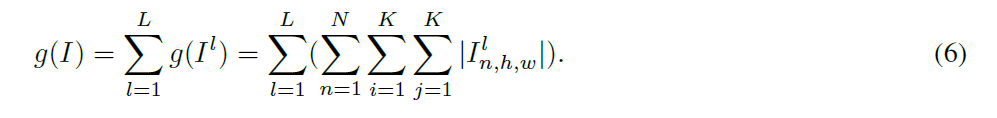

> 感谢关注, > 简单来说,我们使用FS来学习滤波器形状,使用l1norm来稀疏化FS,当FS为零,对应的一条参数就可以被裁剪掉了。 > 所以l1norm是范数惩罚,你可以用任何惩罚函数来稀疏化FS。 还有一个问题是对于文中g(I)的计算表达式下标h,w是笔误吗,因为前面求和的索引是i,j

> > 好的,十分感谢。另外有个问题是文中出现的l1范数 g(I)对应代码的哪里呢?我大致看了一遍代码有点对应不上 > > https://github.com/fxmeng/Pruning-Filter-in-Filter/blob/a41cdcf772a359ed00b1fe21a07adc1628e46c4e/models/stripe.py#L58 奥奥,这里是直接计算更新所以不需要再在loss上加上g(I)了是吧。。 另外我指的文中笔误好像跟代码没关系啊...我是指文章前面都是i,j包括求和的符号也是   不知是我理解还是有什么问题吗 多多打扰,不好意思

> > > > 好的,十分感谢。另外有个问题是文中出现的l1范数 g(I)对应代码的哪里呢?我大致看了一遍代码有点对应不上 > > > > > > > > > https://github.com/fxmeng/Pruning-Filter-in-Filter/blob/a41cdcf772a359ed00b1fe21a07adc1628e46c4e/models/stripe.py#L58 > > > > > > 奥奥,这里是直接计算更新所以不需要再在loss上加上g(I)了是吧。。 > > 另外我指的文中笔误好像跟代码没关系啊...我是指文章前面都是i,j包括求和的符号也是 > >  >...

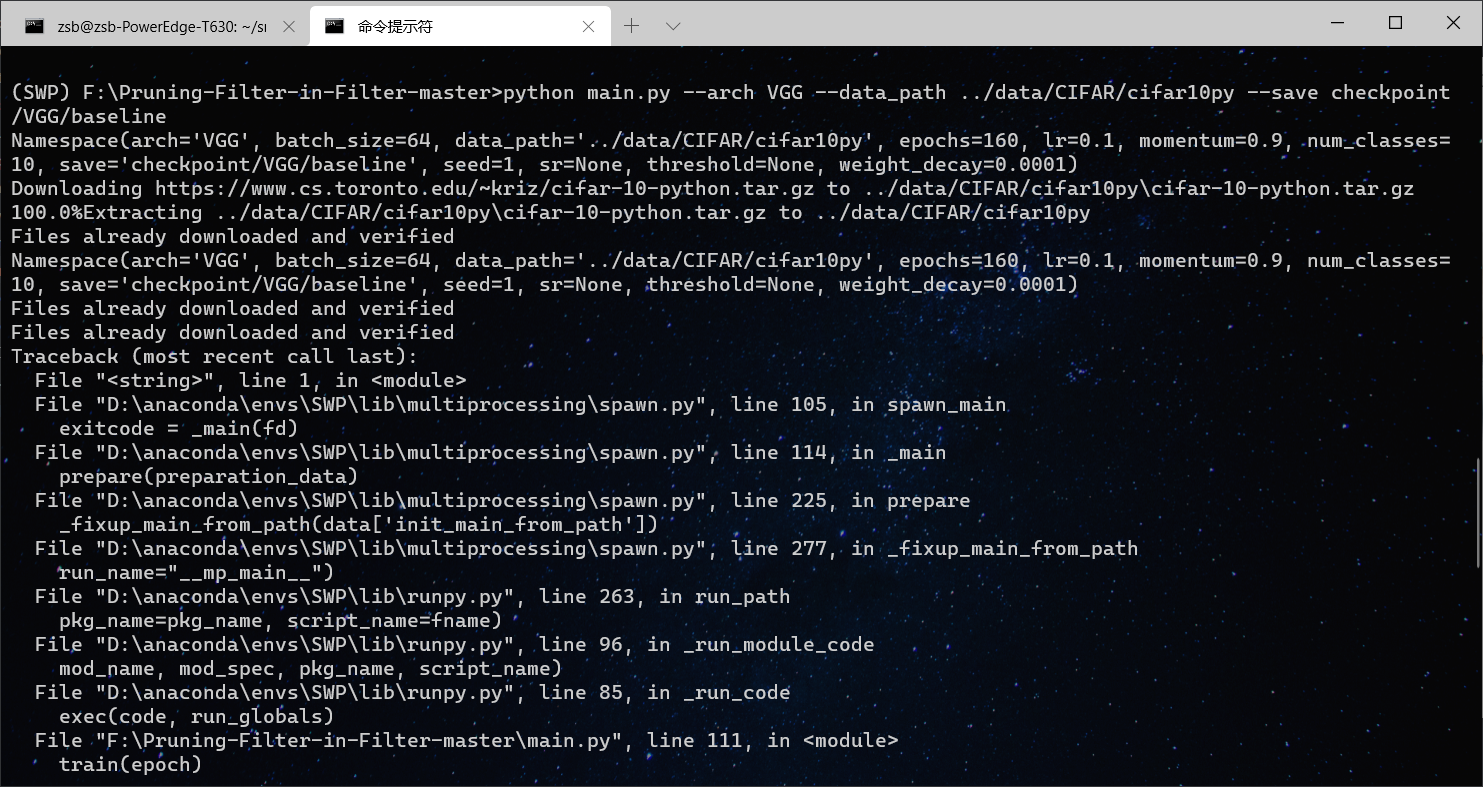

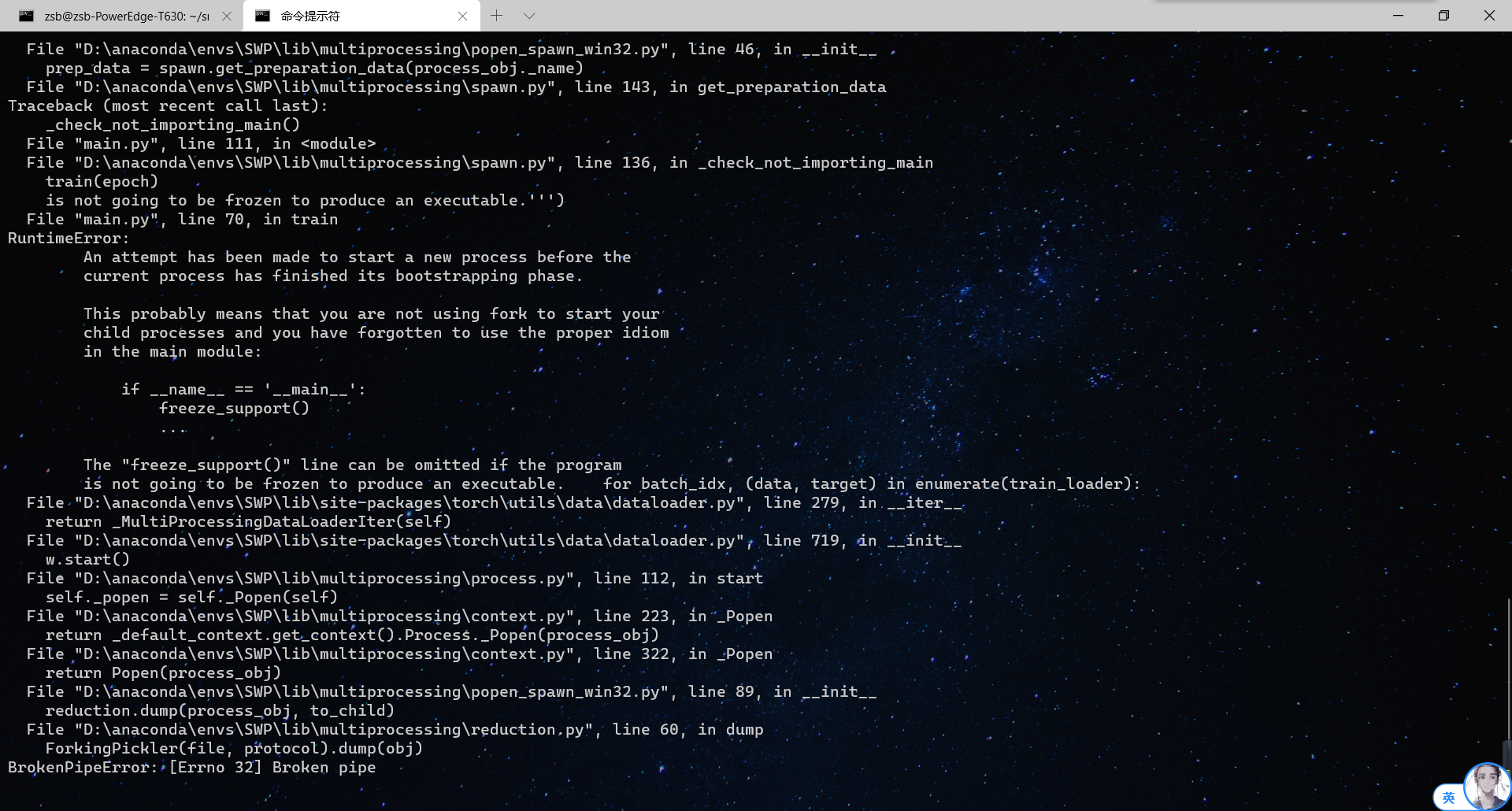

> Your code should be out-of-date. Could you pull the master branch? thank u,but my question is a little wiered problem. Please have a look at my newly uploaded pictures....

> It seems you have multiple versions installed. > Could you try `pip uninstall -y torch-encoding` multiple times to uninstall all old versions and reinstall the master branch? i cant...

> That's wired. Try reinstalling anaconda environment? alright,i will have a try

> I have solved this problem by [link](https://github.com/kijai/ComfyUI-SUPIR/issues/143) `pip install open-clip-torch==2.24.0` When running the SDXL source code, I was mad by this bug, thanks!!!