One-punch24

One-punch24

Hi, it seems to have been implemented in [modeling.py ](https://github.com/google-research/adapter-bert/blob/1a31fc6e92b1b89a6530f48eb0f9e1f04cc4b750/modeling.py#L321). I guess it would work as all those parameters of the projection is pretty small.

>  > > Bazel test is failed as per below log from terminal. please see the attached screenshot as well. > > FAIL: //mesh_renderer:mesh_renderer_test (see /home/preksh/.cache/bazel/_bazel_preksh/887e388b830f4415bdb0c32c0787f49a/execroot/tf_mesh_renderer/bazel-out/k8-fastbuild/testlogs/mesh_renderer/mesh_renderer_test/test.log) FAIL: //mesh_renderer:rasterize_triangles_test (see...

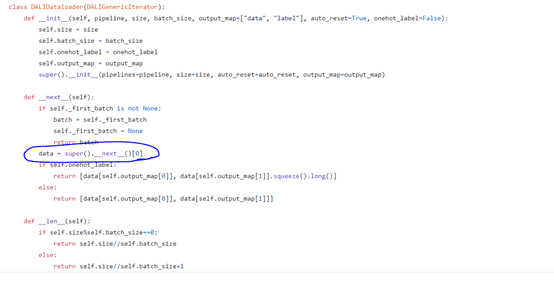

the problem seems to be here? I am not sure, and want somebody to teach me where is wrong?

Thank you so much for replaying. I am a little bit busy these days for some exams. Can I consult you later? I was so happy and amazed that you...

I seemed to have solved this problem but still got segment fault. I really want to consult you later to solve some strange problem. Thank you for your help again!...

I think it is for better and faster tuning. Maybe you could take a look at Figure3 (b) of the original paper.

Sorry to ask a question after a long period of time :-). I am still not very clear about the effect of **encoder attention mask** in GPT2. I understand that...

The part highlighted in red is missing.

Hi, could someone answer my question, thanks a lot!