Imorton-zd

Imorton-zd

ClassifierChain can not be adaptive to multi-label deep learning? version: python 3.6.3 scikit-multilearn 0.2.0 My code: ``` def create_model_single_class2(input_dim, output_dim): # create model model = Sequential() model.add(Dense(12, input_dim=input_dim, activation='relu')) model.add(Dense(8,...

``` Traceback (most recent call last): File "test_multi_label_baselines.py", line 7, in from skmultilearn.ext import Keras ImportError: cannot import name Keras ``` Why?

Thanks for your git, which gives me a lot of inspiration. To my best knowledge, the attention or pointer mechanism is popular in sequence to sequence tasks such as chatbot....

``` json_string = tweet_model.to_json() open(r'models\tweet_model_architecture.json', 'w', encoding = 'utf-8').write(json_string) tweet_model.save_weights(r'models\tweet_model_weights.h5',overwrite = True) File "C:\Anaconda2\lib\site-packages\spyderlib\widgets\externalshell\sitecustomize.py", line 699, in runfile execfile(filename, namespace) File "C:\Anaconda2\lib\site-packages\spyderlib\widgets\externalshell\sitecustomize.py", line 74, in execfile exec(compile(scripttext, filename, 'exec'), glob,...

您好,看了您的Git受益匪浅,有个小疑问:AttentionDecoder 输入的time_step必须要和encoder的time_step一样,那么AttentionDecoder 输出的time_step也就等于encoder的time_step,可是输入和输出的time_step数量一般情况下是不一样的啊?比如输入的是个问题,输出的是个答案,问题和答案的词数量一般都不一样。

Thanks for your wonderful study. Would you please provide the dataset that has been split?

``` decoder_inputs = Input(shape=((None, num_decoder_tokens))) decoder_layer = AttGRUCond(latent_dim,return_sequences=True) decoder_outputs, states = decoder_layer(decoder_inputs,encoder_outputs,state) decoder_dense = Dense(num_decoder_tokens, activation='softmax') decoder_seqs = decoder_dense(decoder_outputs) ``` When using the AttGRUCond layer, the above code snippet yields...

``` InvalidArgumentError (see above for traceback): Multiple OpKernel registrations match NodeDef 'decode_1/decoder/GatherTree = GatherTree[T=DT_INT32](decode_1/decoder/TensorArrayStack_1/TensorArrayGatherV3, decode_1/decoder/TensorArrayStack_2/TensorArrayGatherV3, decode_1/decoder/while/Exit_14)': 'op: "GatherTree" device_type: "CPU" constraint { name: "T" allowed_values { list { type: DT_INT32...

Hi, I hope I'm not bothering you. Recently, I have implemented a simple autoencoder with keras for text classification to do domain adaptation, but it performs worse than the original...

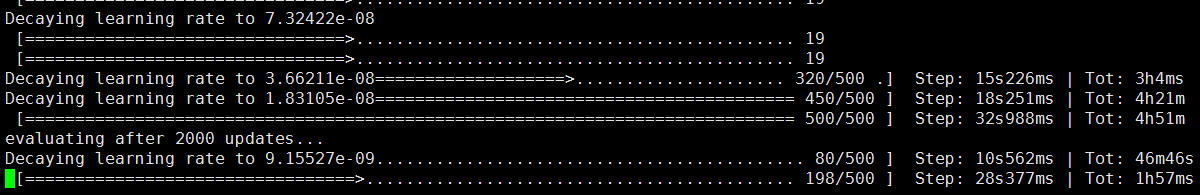

For example, my actual target file has 2500 samples, but the prediction of candidate file only has 1700 lines. The training process seems normal. What's wrong?