FredFirestone

FredFirestone

Converting a 320 GB image via `qemu-img` took about 30h roughly an average of about 3 MB/sec. /proc/spl/kstat/zfs/dbgmsg contains ``` 1631126526 zio.c:1969:zio_deadman(): zio_wait waiting for hung I/O to pool 'tank_01'...

May be related to #9130 as txg_sync can take long and will effectively stop all other io. Obviously when overwriting data I'm also hit by too many free tasks (#3976),...

In another issue I found a convenient smart report which seems relevant: ``` ZPOOL_SCRIPTS_AS_ROOT=1 zpool iostat -c smart capacity operations bandwidth pool alloc free read write read write health realloc...

Actually there are two different models (ST8.000NM0.105-1VS112, ST8.000VX0.022-2EJ112) involved, but disks 3 to 8 are the same (ST8.000VX0.022-2EJ112). In the list above the command timeout counter is extraordinary high just...

I've chosen SkyHawks because they are CMR, without helium, according to specs good for 7x24 and 180 TB/year. Even if they aren't top notch performance drives they should do better...

Issue #2885 also seems related: Larger amounts of data bring write performance down, which is what happens in my case due to the small reads, too. I just found https://openzfs.org/w/images/8/8d/ZFS_dedup.pdf...

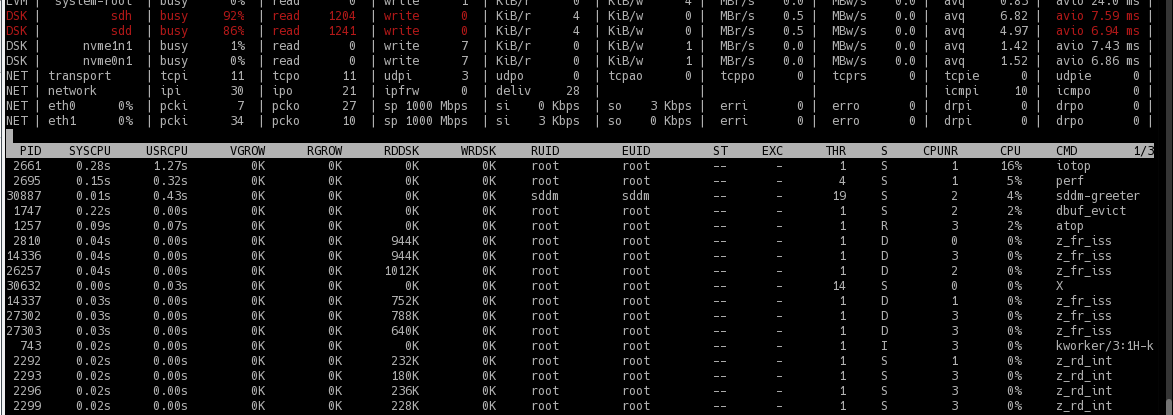

I just deleted several GB and got again almost continuous "4k" reads on two disks:  So my theory is * DDT got for some reason clustered on these two...

While looking further on information about DDT I stumbled on special vdevs (for dedup) which I weren't aware of. Also I found https://www.truenas.com/community/goto/post?id=604334 for heavy 4k reads (and hint to...

Even with l2arc copying a larger amount of data is still slow, I'd guess around 10-20 MB/s (considerably higher as the initial 2,4 MB/s). So contrary to #7971 it seems...

Well, I consider dedup a much neglected zfs feature and understand some may view its use as incorrect per se, which I would not agree too. I'm also not too...