Fazzie

Fazzie

> Any update @binmakeswell ? It would be very great to fasten training process. > > I found [train_dreambooth.py](https://github.com/hpcaitech/ColossalAI/blob/a9b27b9265c31175192643e3974187e5ea112c1d/examples/images/dreambooth/train_dreambooth.py) and [train_dreambooth_colossalai.py](https://github.com/hpcaitech/ColossalAI/blob/a9b27b9265c31175192643e3974187e5ea112c1d/examples/images/dreambooth/train_dreambooth_colossalai.py) that are based on diffusers. Thank you for your...

Flash attention is not a stable feature in ColossalAi, Flash attention is an approximation algorithm for attention, the dim size must be the second power of 2

we fix the use_ema in https://github.com/hpcaitech/ColossalAI/pull/1986/files

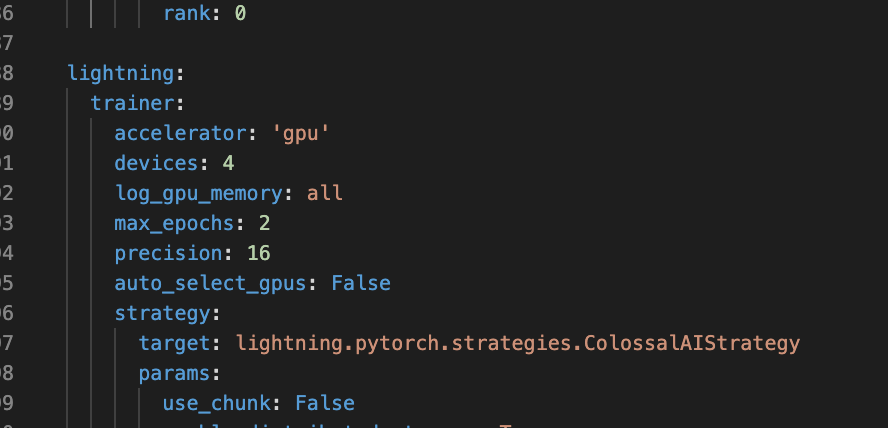

you can try train_colossalai.yaml

the train_pokemon.yaml is not completed

> Is this a useless project? It have been fixed in https://github.com/hpcaitech/ColossalAI/pull/2561

Nosie problem by colossal veriosion have been fixed in https://github.com/hpcaitech/ColossalAI/pull/2561

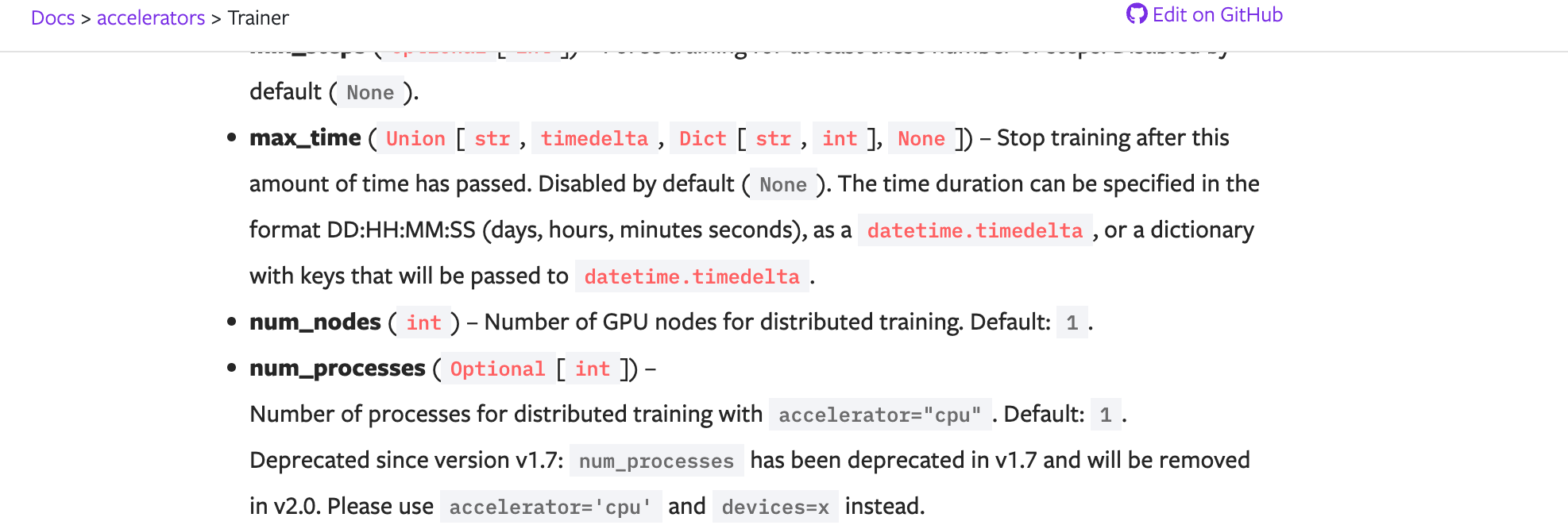

https://pytorch-lightning.readthedocs.io/en/latest/api/pytorch_lightning.trainer.trainer.Trainer.html#pytorch_lightning.trainer.trainer.Trainer