Michael

Michael

@vince62s i want to export pytorch model to onnx model like this (MT task using transformer,with no modifications to the original code): def main(opt): dummy_parser = configargparse.ArgumentParser(description='train.py') opts.model_opts(dummy_parser) dummy_opt =...

@vince62s you said change the way attentions flow, currently a dictionary but this does not work, requires list or tuple (https://github.com/OpenNMT/OpenNMT-py/issues/638#issuecomment-434765232), but now you said but again given some code...

@vince62s ONNX itself is native to pytorch 1.0 as the model output format, may the output be the format of ONNX ?

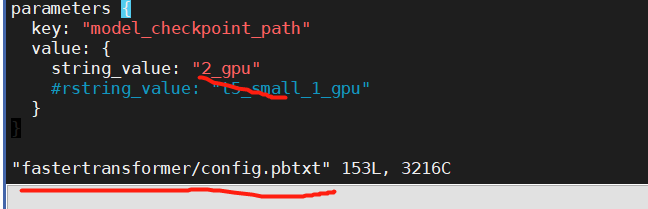

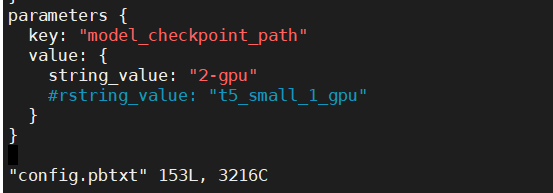

[I do as README, but i met some errors as follows: Firstly, i start the server(the model path cannot be setted like --model-repository=/workspace/build/fastertransformer_backend/all_models/t5/fastertransformer/ or --model-repository=/workspace/build/fastertransformer_backend/all_models/t5/fastertransformer/1 , because the program will...

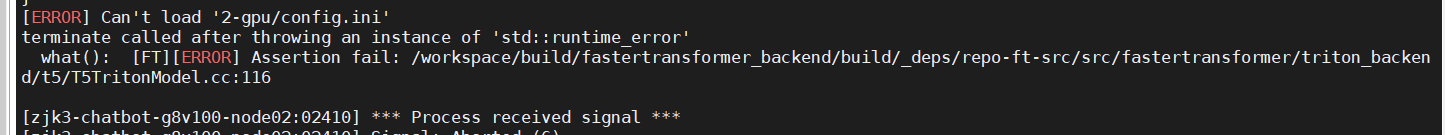

After i mv the model_checkpoint_path to 2-gpu  then i run the command:mpirun -n 1 --allow-run-as-root /opt/tritonserver/bin/tritonserver --model-repository =/workspace/build/fastertransformer_backend/all_models/t5 i got this error , it sames the file name is...

I se t the model_checkpoint_path to 2-gpu  then i also get the error.

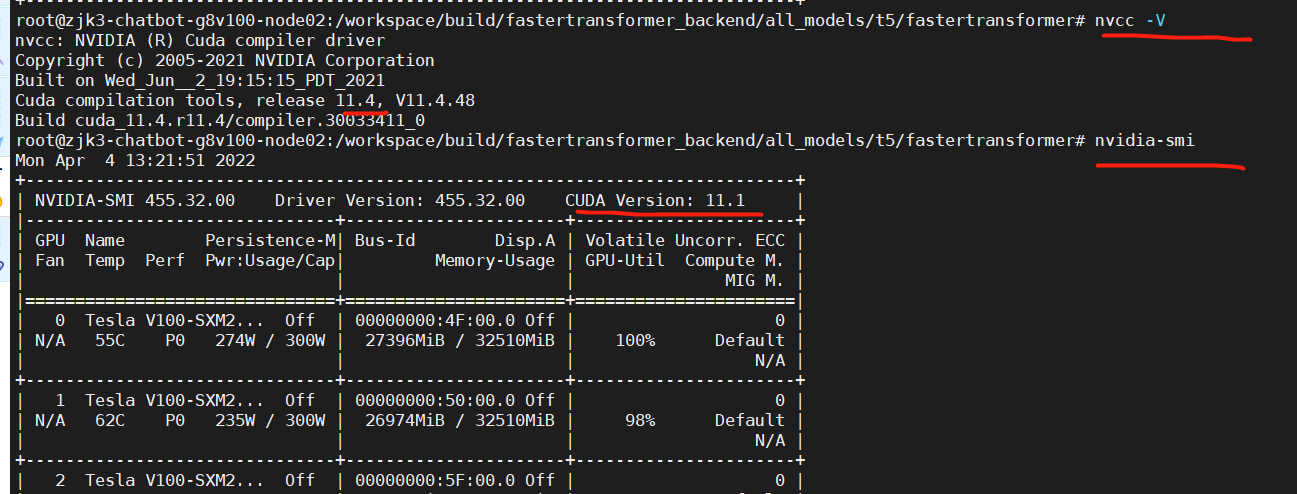

Thanx, it worked! but another question the cuda version must be 11.4 if i want to run t5 in triton ?

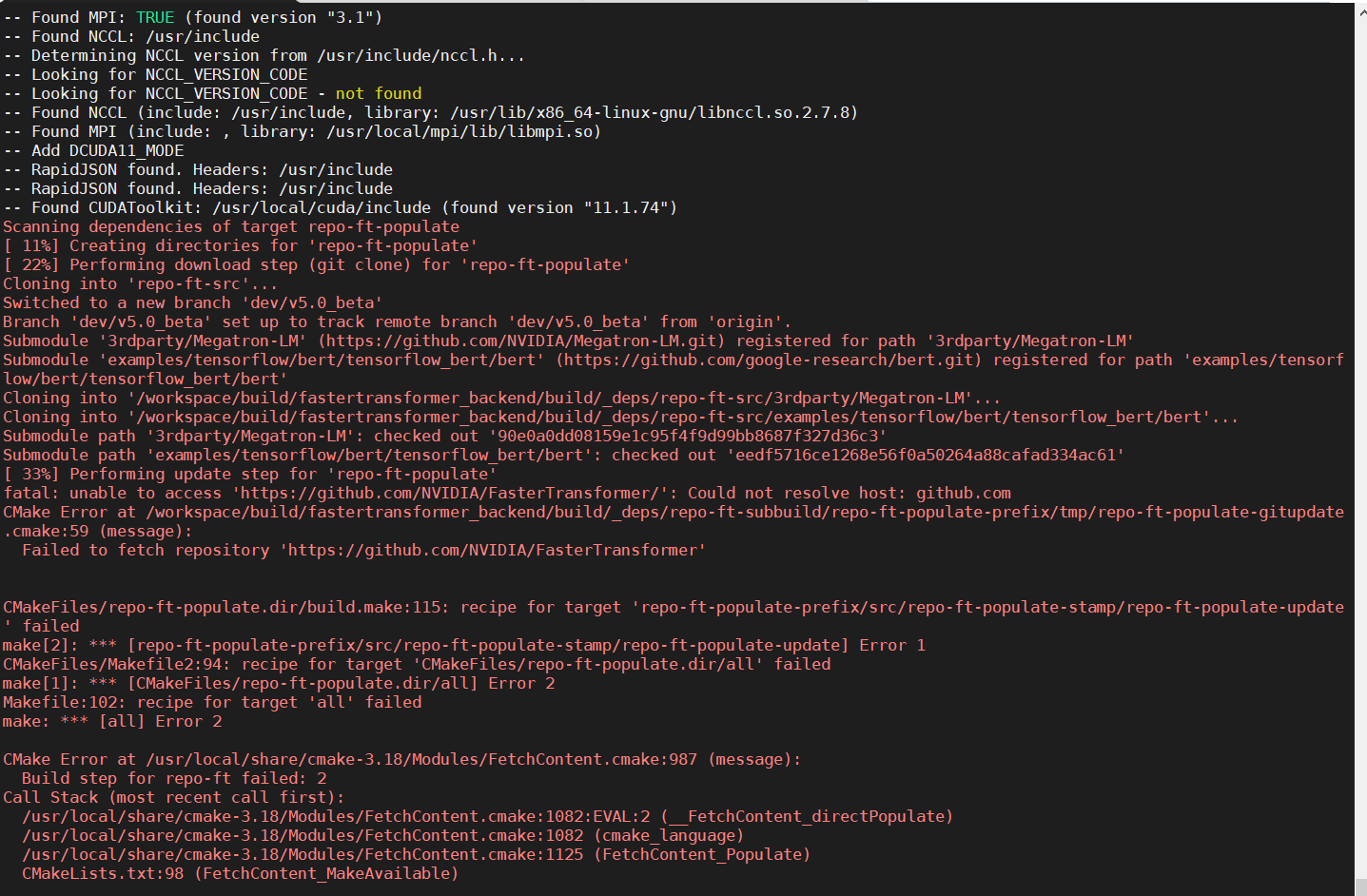

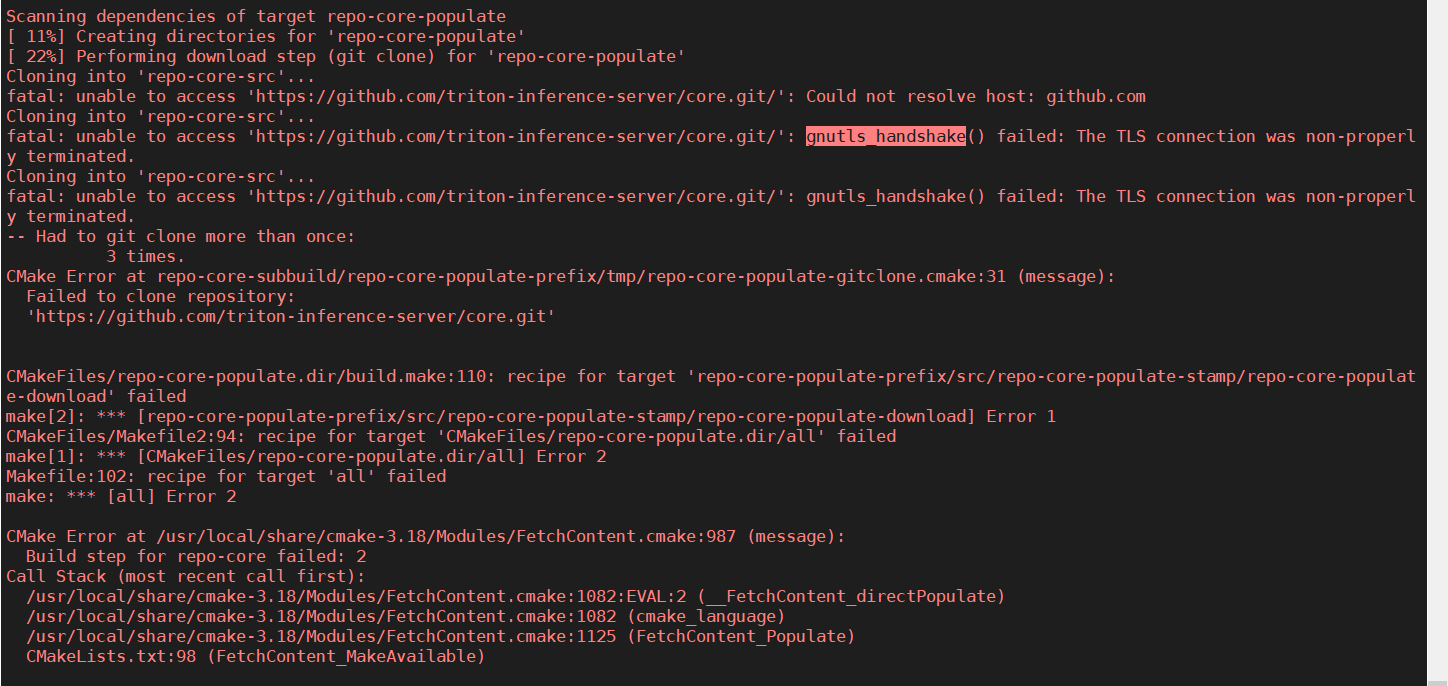

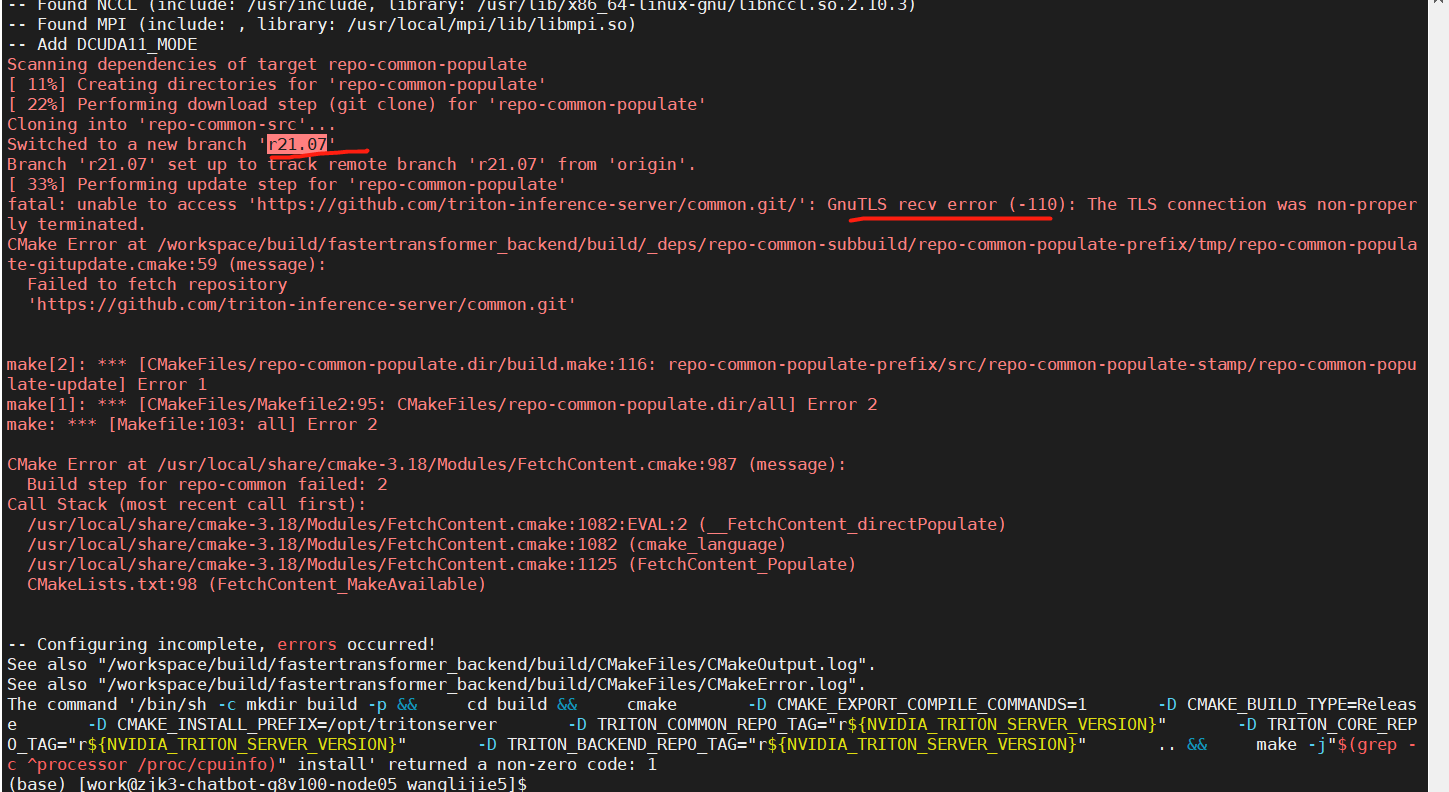

I set the CONTAINER_VERSION=20.10 it sames the github access errors in cmake file  or  Then i change the https to http in fastertransformer_backend/CMakeLists.txt, then i get this error:...

I can build the Dockerfile successfully with default 21.08 CONTAINER_VERSION, then i met the cuda mismatch error. So i change the the CONTAINER_VERSION to 20.10 as you said and build...

I try 21.07, it's the same error.  And i can find the 20.10 in the https://github.com/triton-inference-server/server/branches/all?page=5 Maybe it's the network error. Should i do some config about github in...