Batch sizing for aws_s3 sink does not work

A note for the community

- Please vote on this issue by adding a 👍 reaction to the original issue to help the community and maintainers prioritize this request

- If you are interested in working on this issue or have submitted a pull request, please leave a comment

Problem

Vector pushes only small files to s3 and the configuration of the buffer and batch properties seem to have no effect.

Expectation: If the 'batch.max_events' and 'batch.timeout_secs' properties are set, I expect vector to push files to s3 having that max_event size or wait until the timeout is reached.

What I experience is, that it does not matter what I configure here, there are hundreds of files pushed every minute and they have a size of 300-500KB including 400-600 records per file. I tried to increase and decrease the batch properties and also the buffer properties but nothing changed.

We consume the data from a Kafka topic that has 60 partitions. Does the batching maybe depend on the Kafka partitioning?

Please see the config below. If this is not a bug but I made a configuration mistake please let me know. Thanks for your help.

Configuration

data_dir = "/data/vector"

[sources.kafka_in_aiven]

type = "kafka"

topics = ["testTopic"]

bootstrap_servers = "some-bootstrap-servers"

group_id = "kafka-test-ingester"

auto_offset_reset = "latest"

tls.enabled = true

... security configs ...

decoding.codec = "json"

[transforms.timestamp_to_ingestion]

type = "remap"

inputs = ["kafka_in_aiven"]

source = '''

.timestamp = now()

'''

[sinks.s3]

type = "aws_s3"

inputs = [ "timestamp_to_ingestion" ]

encoding.codec = "json"

bucket = "some-bucket-path"

key_prefix = "data-path/year=%Y/month=%m/day=%d/hour=%H/"

auth.assume_role = "aws-arn"

filename_extension = "json.gz"

storage_class = "ONEZONE_IA"

batch.timeout_secs = 120

batch.max_events = 25000

buffer.max_events = 50000

framing.method = "newline_delimited

Version

with k8s and docker: timberio/vector:0.24.0-distroless-libc

Debug Output

No response

Example Data

No response

Additional Context

No response

References

No response

Does the batching maybe depend on the Kafka partitioning?

Batching shouldn't depend on the Kafka partitioning. It should be just the batch settings you set, and it's partitioned by the key_prefix you set, but that seems to only change once per hour.

I did notice you aren't setting the max_bytes, which defaults to 10Mb for the S3 sink. You are seeing files that are 300-500 KB, but this setting is the uncompressed size, and what you are seeing is the gzip compressed size, so you are likely hitting the size limit here.

Try setting the max_bytes to a higher value, if that doesn't help we can keep investigating what might be going on here.

I'm also having a similar issue and can't seem to resolve it despite setting all there parameters (timeout_secs, max_bytes, max_events) to very large numbers. Vector created 136 chunk files for a 20 MB test file I generated (I would've expected it to create one file). Here's my config:

aws_s3:

type: aws_s3

inputs:

- application_logs

batch:

timeout_secs: 1800 # 30 Minutes

max_bytes: 31457280 # 30 MB

max_events: 100000000 # 100,000,000 Events

bucket: '${VECTOR_DATA_BUCKET}'

key_prefix: '${VECTOR_LOG_STORAGE_PREFIX}{{ file }}/'

compression: gzip

encoding:

codec: text

region: '${VECTOR_AWS_REGION}'

healthcheck:

enabled: true

Any indication as to whether this is an internal bug or a configuration error?

I am also having a similar issue. Even though the max_bytes is set to 5 MB and timeout is set to 3 mins, the files that are written almost every 10 seconds and are smaller than 20 kB in size. Any reason for this behaviour?

Details: Vector version: 0.20.0

Config:

[sinks.s3_sink]

type = "aws_s3"

inputs = [ "<input_name>" ]

bucket = "<bucket_name>"

key_prefix = "<key_prefix>"

compression = "gzip"

region = "eu-central-1"

[sinks.s3_sink.batch]

timeout_secs = 180 # default 300 seconds

max_bytes = 5242880 # 5 MB

[sinks.s3_sink.buffer]

type = "memory" # default

max_events = 3000 # default 500 events with memory buffer

[sinks.s3_sink.auth]

assume_role = "<iam_role_arn>"

[sinks.s3_sink.encoding]

codec = "text"

I am also having a similar issue. Even though the max_bytes is set to 5 MB and timeout is set to 3 mins, the files that are written almost every 10 seconds and are smaller than 20 kB in size. Any reason for this behaviour?

I could definitely see 10-15KB uncompressed from gz being ~5MB, the batch size is uncompressed - could you uncompress a sample and provide that size?

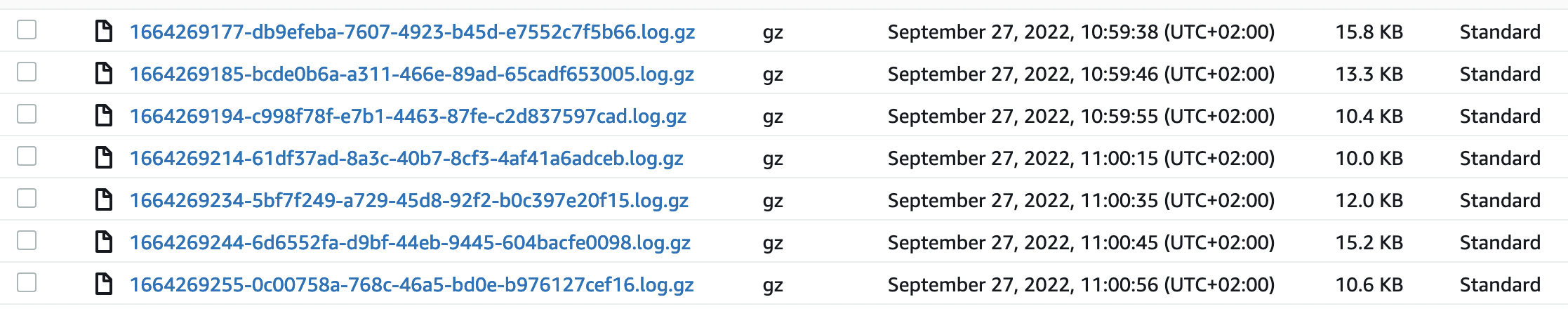

@spencergilbert: Compressed: 15.8 KB, Uncompressed: 963 KB

We're seeing similar numbers to @amanmahajan26 -- does anyone know if there's a stable version that we can downgrade to that proper batch sizing worked on?

I am also having a similar issue. Even though the max_bytes is set to 5 MB and timeout is set to 3 mins, the files that are written almost every 10 seconds and are smaller than 20 kB in size. Any reason for this behaviour?

This still seems likely to me to be the same root cause as https://github.com/vectordotdev/vector/issues/10020. Does bumping the batch size by, say, 10x have any effect?

@jszwedko: I increased the batch-size by 4x and the uncompressed file size increased to almost 2x

@jszwedko: I increased the batch-size by 4x and the uncompressed file size increased to almost 2x

Gotcha, yeah, that does make it sound a lot like https://github.com/vectordotdev/vector/issues/10020 then. I am hoping that is something we can tackle in Q4.

Thank you, folks. Increasing the max_bytes by factor 10 had an effect, so I guess the byte size we tried before was too low. But I also agree on fixing https://github.com/vectordotdev/vector/issues/10020 would bring benefits.

Slightly off-topic, but can someone please explain what's the difference between batch.max_events and buffer.max_events and what effect will these have on my pipeline?

Slightly off-topic, but can someone please explain what's the difference between

batch.max_eventsandbuffer.max_eventsand what effect will these have on my pipeline?

buffer.* controls a durability and backpressure mechanism in Vector. This hasn't been published yet, so it may be incomplete or have typos - but more details can be found here: https://master.vector.dev/docs/about/under-the-hood/architecture/buffering-model/