Twitter Prioritizing Tweets with High Engagement and the Need for a 'Not Helpful' Option

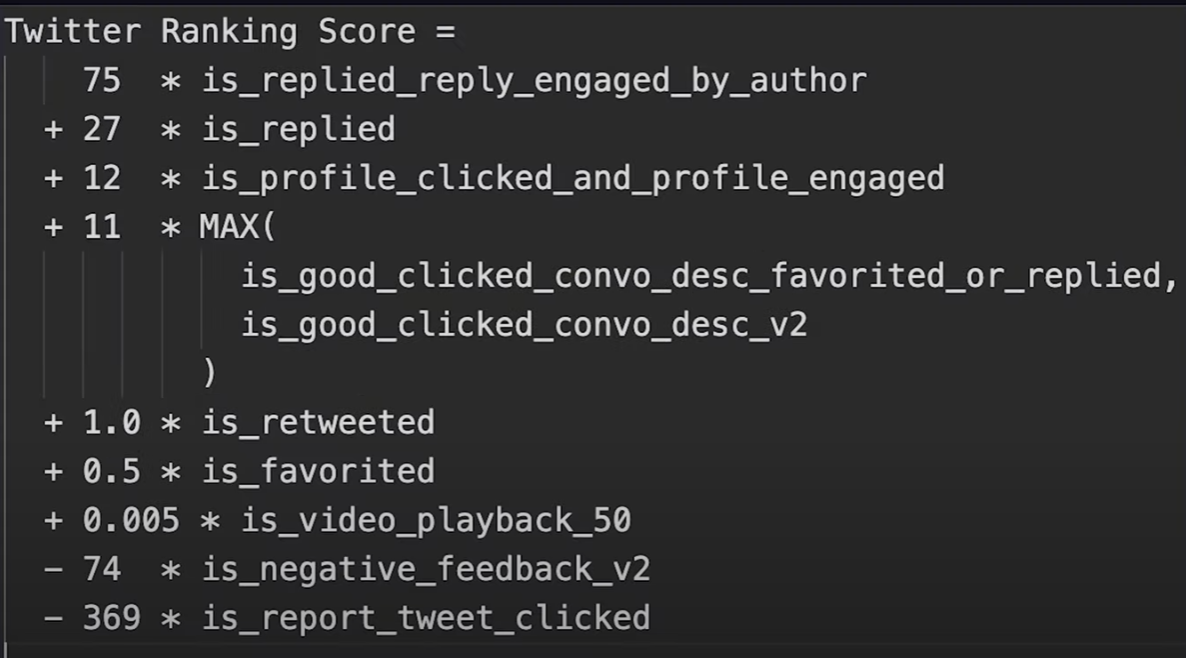

What's Happening? Twitter currently prioritizes tweets with high engagement, such as likes, retweets, and comments, in users' timelines and search results. While this may seem like a good way to surface popular content, it also encourages the spread of low-quality, toxic content and amplifies voices of a small, but vocal minority.

The Problem The problem with prioritizing engagement is that it does not necessarily reflect the quality of the content or the value it brings to the users. As a result, tweets that are controversial or divisive often receive the most engagement, leading to a toxic environment where negative interactions are incentivized. This can discourage users from engaging in meaningful conversations and ultimately lead to a decline in user satisfaction.

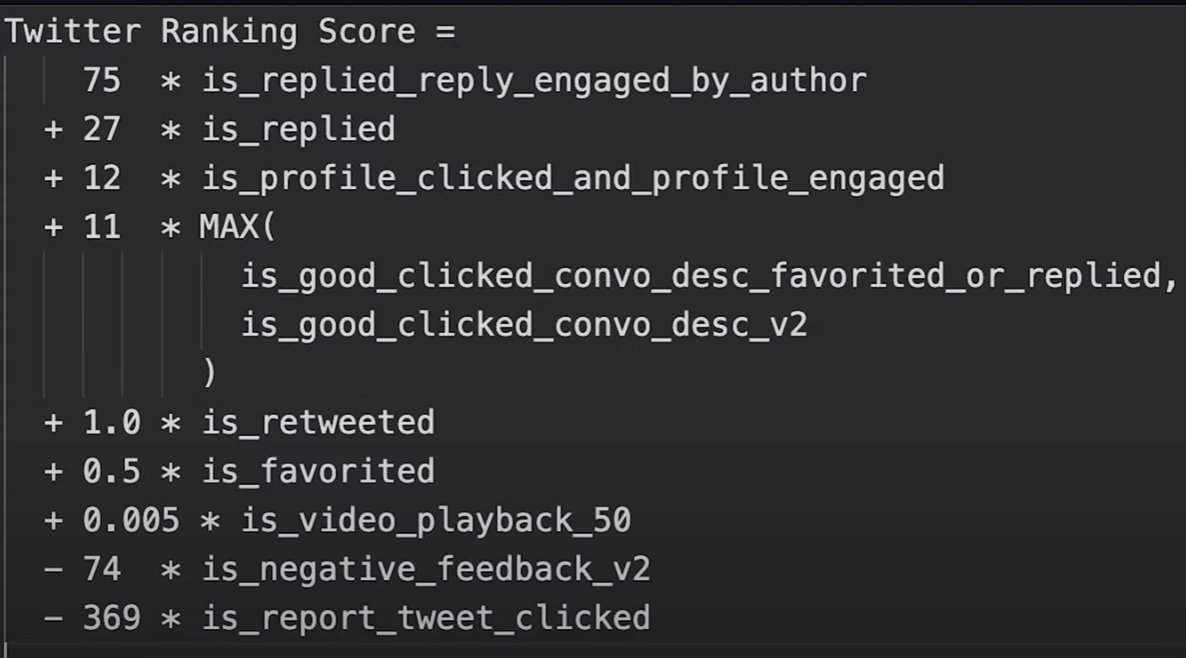

The Solution To address this issue, I suggest implementing a 'Not Helpful' option similar to other social media platforms. This would allow users to signal their disapproval of low-quality or controversial content without engaging in negative interactions. The Not Helpful feature could also provide valuable feedback to Twitter on the type of content users want to see, helping to improve the quality of the platform overall.

Alternatives Considered

I believe that implementing a 'Not Helpful' option could help to balance the engagement-based algorithm and promote healthier interactions on Twitter. I urge Twitter to consider this option and work towards creating a more positive and inclusive environment for all users.

Example for Context

(Tweet used for demonstration purposes only)

(Tweet used for demonstration purposes only)

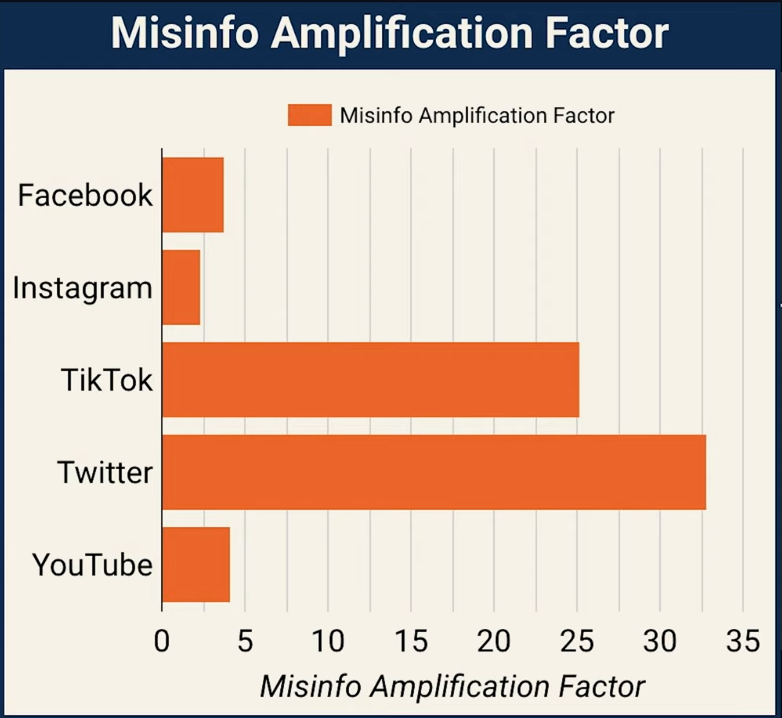

Twitter is the most susceptible to misinformation because not everyone knows blocking or reporting will debuff tweets, instead they engage and unintentionally promote the tweet.

Twitter is the most susceptible to misinformation because not everyone knows blocking or reporting will debuff tweets, instead they engage and unintentionally promote the tweet.

Right here is where we could add the feature. Example:

Right here is where we could add the feature. Example:

-269 * is_not_helpful_clicked

I strongly believe in this initiative and that Twitter has the potential to become the leading platform for up-to-date news on this issue. However, in order to make this a reality, we need Twitter's approval. I, therefore, propose to raise awareness of the issue and promote our solution in order to garner support from the Twitter community and persuade Twitter to take action.

This is excellent. Seconded.

The problem you describe is the cause of virality. The real problem is how they compute the metrics. I'm trying to find the code... I'll use this instead.

My suggestion would be to reweight positive and neg sentiments.

- Suppose case A: 99 pos, 1 neg. The sample size is small. 1% means nothing here.

- case B: 1e10 pos, 1e8 neg. The sample size is huge and 1% means more.

Because the neg cases are high in case B, but still a minority, it might not signify a clear issue, yet it is. 100M neg is 1%. The solution is to amplify neg as it scales so that minority votes count more as the evidence piles up. If something is very offensive to a small minority population, it's offensive, but they will never have the votes without neighbor/community support. A simple solution to this naive example problem is additive smoothing (similar to the bayesian avg) and changing neg to neg^1.05 or a similar approach.

An alternate approach is to copy Youtube's current algo. They promote content with 20 views and no reputation or signaled interest from me because it "relates" to stuff I would watch. They intended to squash virality for mental health sake.

This is not an easy problem because it's MAB (balancing act). Hopefully someone finds this useful.

I'm unsubscribed (not going to read a reply). Thanks for bringing this up.

This is out of my hands for now. Someone who can better handle this issue of overly negative tweets would require careful consideration and testing of different approaches, as well as ongoing evaluation and refinement on user feedback and data.

Right here is where we could add the feature. Example:

Right here is where we could add the feature. Example:

-269 * is_not_helpful

I thought it would've been more difficult, but it looks much easier than I thought. All we need is a Twitter developer to approve and develop this.

Twitter has/had a downvote button; it was showing on one of my accounts until that account was shadowbanned.

What you and they want is an echo chamber, which is great for BTS fans but horrible for politics. Twitter actively discourages showing people wrong: you do that to a snowflake and then they block/report you, decreasing your Social Score.

See the ranking algorithm in my More Speech project. I put that together in about an hour, it only looks at the content of the tweet, and it's objectively better than the Twitter system (see the More Speech reports).

What you and they want is an echo chamber, which is great for BTS fans but horrible for politics. Twitter actively discourages showing people wrong: you do that to a snowflake and then they block/report you, decreasing your Social Score.

The goal of this is not to adjust social score, but decrease that specific problematic tweet from exacerbating.

#1760 In my suggestion I work with the balance of the echo chamber. I still think hiding certain things is important to the user when it doesn't create an echo chamber. For example, I have no interest in sports or romance. I don't like certain music genres either. I don't think hiding sports is the same as making an echo chamber within the design where it is objectively harmful.

If this works in a complementary way with my suggestion, basically the system would check if when stating that something is not useful it would have to do with the opposing ideological positions of the user. In that case, the button would lose effect. However, if the use of the button has to do with preference without ideological relation, the button would have an effect. I could say that chocolate ice cream is not useful, but strawberry ice cream is useful and the system shows more about strawberry ice cream.

Snow is triggered by Trump and Bubba is triggered by Biden. If they want to see a Trump- or Biden-free view of Twitter - like when parents spell out words so kids don't hear them - then they should be able to do that. They can live in their own perfect little fantasy world.

The problem is when the incompetent Twitter authoritarians use them filtering out Trump or Biden to affect Trump or Biden. I've been blocked by dozens and probably hundreds of people - including lots of blue checks - for showing them wrong. I criticized Shellenberger cozying up to Dilbert and got blocked. I called a neighborhood activist on punching way down at the homeless and got blocked. I showed DrPanMD wrong and got blocked. Likewise with ZitoSalena. In fact, both of the last are infamous for blocking critics. Twitter always sides with the blockers, not the blockees. They always side with the crowd (ochlocracy), never the voice in the wilderness.

@darkdevildeath thanks for bringing this up and proposing an implementable solution.

I agree this is totally complementary and orthogonal, this feedback mostly act as a additional content filter and it does clearly improve the comfort of users (by reducing the risks of them being manipulated by third-parties).

I'm not sure to understand what is preventing this feature to be implemented as soon as possible?