ValueError on TimeSeriesSVR when having input temporal length of more than 405

Describe the bug ValueError when having a long time series input

To Reproduce

from tslearn.generators import random_walk_blobs

X, y = random_walk_blobs(n_ts_per_blob=10, sz=406, d=2, n_blobs=2)

y = y.astype(np.float) + np.random.randn(20) * .1

reg = TimeSeriesSVR(kernel="gak", gamma="auto")

reg.fit(X, y)

Expected behavior The model should not return an error

Environment (please complete the following information):

- OS: macOS Mojave 10.14.6

- tslearn version 0.3.0

Additional context I'm not sure why this is happening, but the max temporal length of not getting an error is 405.

The issue comes from tslearn.metrics.cdist_gak: The returned array contains NaN values for some reason. This is not expected though.

import numpy as np

from tslearn.generators import random_walk_blobs

from tslearn.metrics import cdist_gak

X, y = random_walk_blobs(n_ts_per_blob=10, sz=406, d=2, n_blobs=2, random_state=42)

X_new = cdist_gak(X, sigma=1000)

np.isnan(X_new).any()

I can try to find why this is happening.

cdist_gak returns the product of three matrices:

return (diagonal_left.dot(matrix)).dot(diagonal_right)

where matrix contains the pairwise values of unnormalized_gak.

unnormalized_gak calls _gak_gram followed by njit_gak.

If the value for sigma is really high, the values in gram

gram = _gak_gram(s1, s2, sigma=sigma)

will be close to 1.

Then, the value for gak_val

gak_val = njit_gak(s1, s2, gram)

will be really high (close to 3 ** n if n is the number of time points, i.e. n=sd). This value becomes np.inf because it is too high.

Then, when doing the dot product, 0 * np.inf will produce np.nan values.

Then sklearn.svm.SVR is not happy because the precomputed kernel contains NaN values.

sigma is computed using:

-

self.gamma_ = gamma_soft_dtw(X) -

sigma=numpy.sqrt(self.gamma_ / 2.)

which can be high for long time series.

To me, there are two possibilities:

- either using a non-normalized version of GAK is not great because it leads to floating point overflow,

- or there is a bug in the code.

But I don't know anything of GAK so I will let people who know more about it answer.

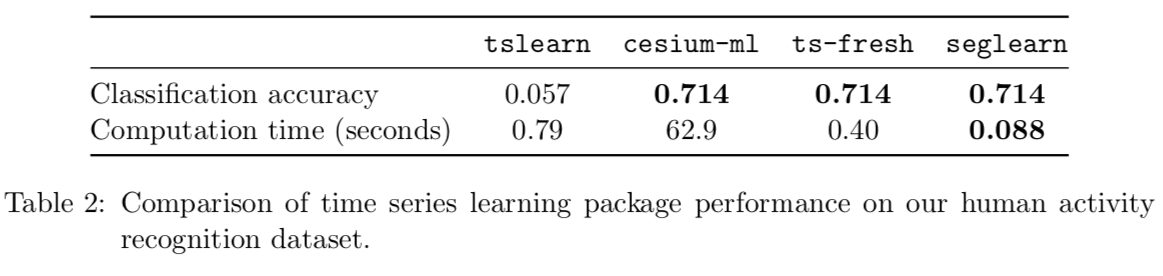

This might explain why the results reported in the seglearn paper were not great.

The same problem arises while using TimeSeriesSVC()

(Maybe obvious)

Yes it makes sense, both algorithms have the same preprocessing step, which is computing the kernel matrix. Then the SVR and SVC classes from scikit-learn are called. The issue arises when computing the kernel matrix.

I am also experiencing this issue. Same exact symptoms (the unnormalized gak matrix is all inf) and I indeed have timeseries that have > 500 steps.

The automatic ('auto') computation of gamma can lead to this behavior. Could you try with a smaller value of gamma (or sigma if you are using the GAK functions directly)? It's an important hyper-parameter (like gamma for the RBF kernel), but the computation of the GAK kernel is a bit more complex and the current implementation can lead to overflow issues.

Yep, gamma=0.1 works for my timeseries. Thanks I'll try to keep it low!

I ran into a similar issue, and lowering gamma worked for me. However, an alternative I found was to use scipy.signal.resample to downsample. My timeseries had 800 values and downsampling to 400 (allowing gamma='auto') achieved higher classification performance, compared to setting a low gamma.

The most confusing thing for me about the errors generated when 'gak' has bad values in it is that the error message reports that the issue is with the data. I spent a long time checking and double checking that my X data infact did not have any nans. Perhaps a warning should be generated in the pipeline that points to a poorly formed kernel instead?