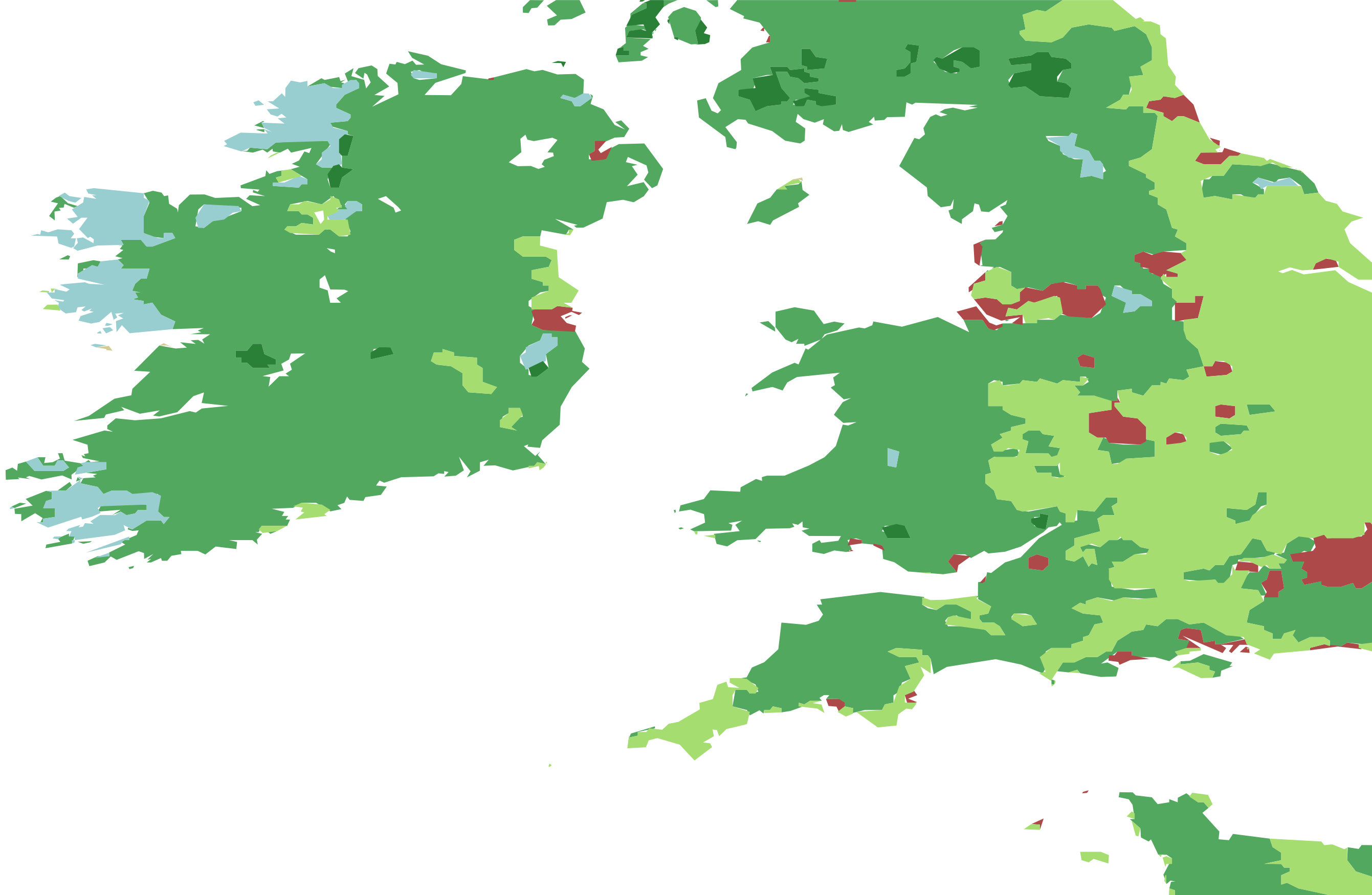

Missing landcover "green" areas at low- and mid-zooms

OpenStreetMap provides good landuse coverage in some countries, but not globally. Investigate bringing in another open dataset at zooms ~ 0-13 to provide global coverage in same schema – namely from Marble Cutter's landcover config.

We'd keep landuse like park from OSM, but landcover like forest and grass would come from Marble Cutter. The raster landcover will need to be vectorized. This landcover will end up in the landuse layer.

Similar to https://github.com/tilezen/vector-datasource/issues/1885 but not breaking change (this one keeps in single layer, that issue proposes splitting apart / and probably better merging between two datasets across the zoom 13 / 14 cross over).

See also:

- https://github.com/mojodna/marblecutter/issues/102

- https://github.com/mojodna/marblecutter/issues/103

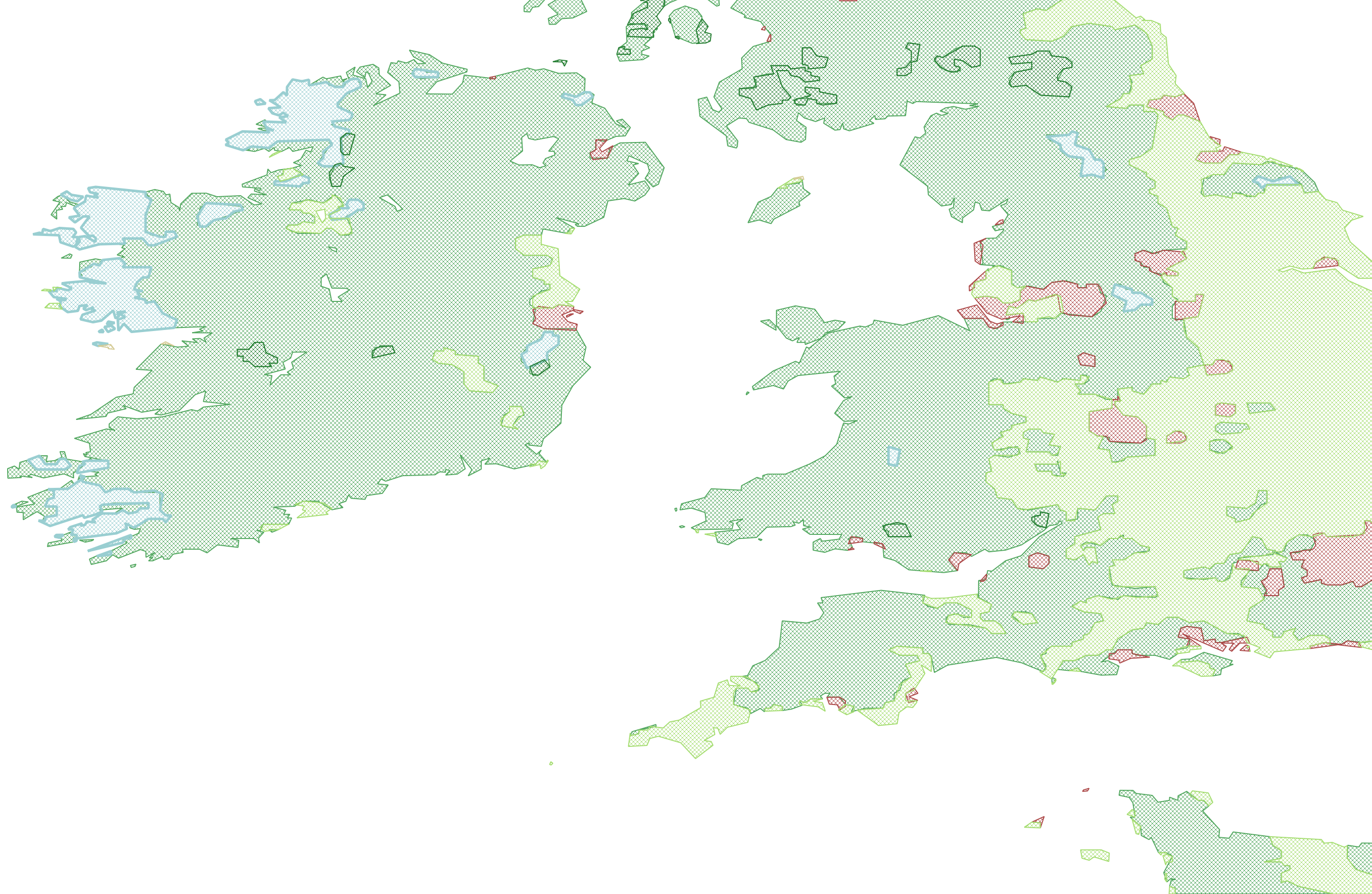

This is (very roughly) implemented as a series of passes:

- Download and stitch together raster PNG landcover tiles.

- Apply a filter which "dilates" or "buffers" the non-water (

wetlandis not considered water) pixel types out into the water. This, combined with the later clipping, should mean that landcover polygons don't overlap water or vice-versa. - Apply a filter which chooses the modal (i.e: most frequent) pixel in a neighbourhood. This helps to consolidate pixels into blocks of solid colour, as the raw landcover can contain a lot of single-pixel blobs or areas which look "dithered" or half-toned.

- Apply GDAL's "sieve" filter, which removes blobs smaller than a certain threshold. This helps to get rid of any remaining dots after the modal filter.

- Apply GDAL's raster polygonize function. This gives us polygons for every landcover type, and we drop the water.

- Clip all the landcover polygons against the water layer, which came from NaturalEarth or OSM depending on the zoom level.

As part of the usual Tilezen pipeline, the polygons are later simplified. This opens up some "cracks" between polygons, as can be seen if we look closely at polygon boundaries:

@meetar had some suggestions about how we could fix those, so I'll see if I can implement those.

I've since been able to wrangle this into working Docker images and do a partially successful global build. The changes to tileops are in the zerebubuth/1905-landcover branch, corresponding to the zerebubuth/1905-landcover branch here in vector-datasource.

This means we've been able to get a mostly-built version of the low zoom tiles with landcover polygons. These have temporarily been assigned kind:landcover and kind_detail one of:

-

barren -

cultivated -

desert -

developed -

forest -

glacier -

herbaceous -

shrubland -

wetland

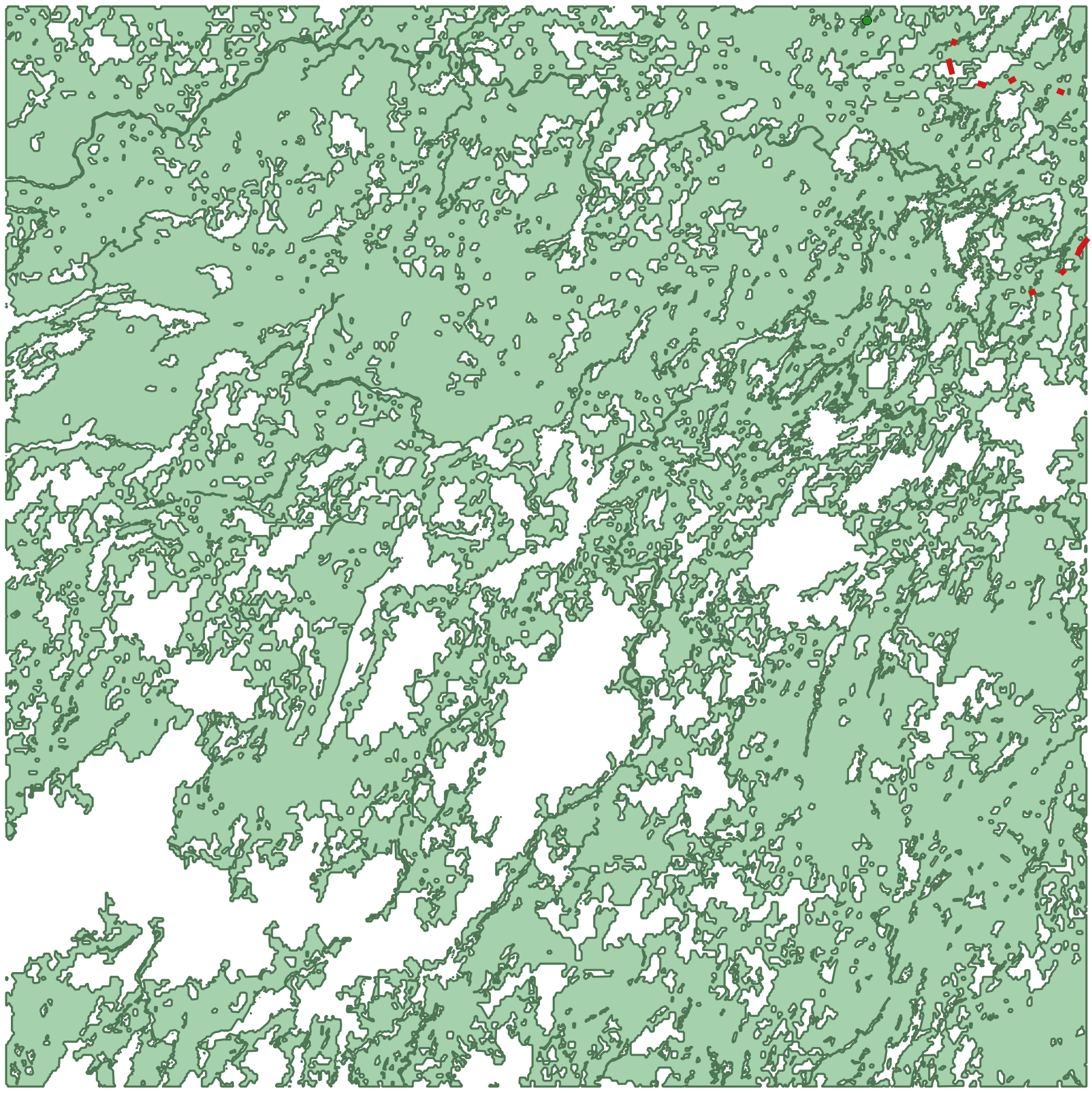

The results look pretty good in many places, however there are still many issues with the code and results. For example, the landcover polygonization can produce extremely complex polygons:

(That's the metatile for job 8/74/87, which is in upstate Quebec. It looks very detailed, but remember it's for an 8x8 grid of tiles.)

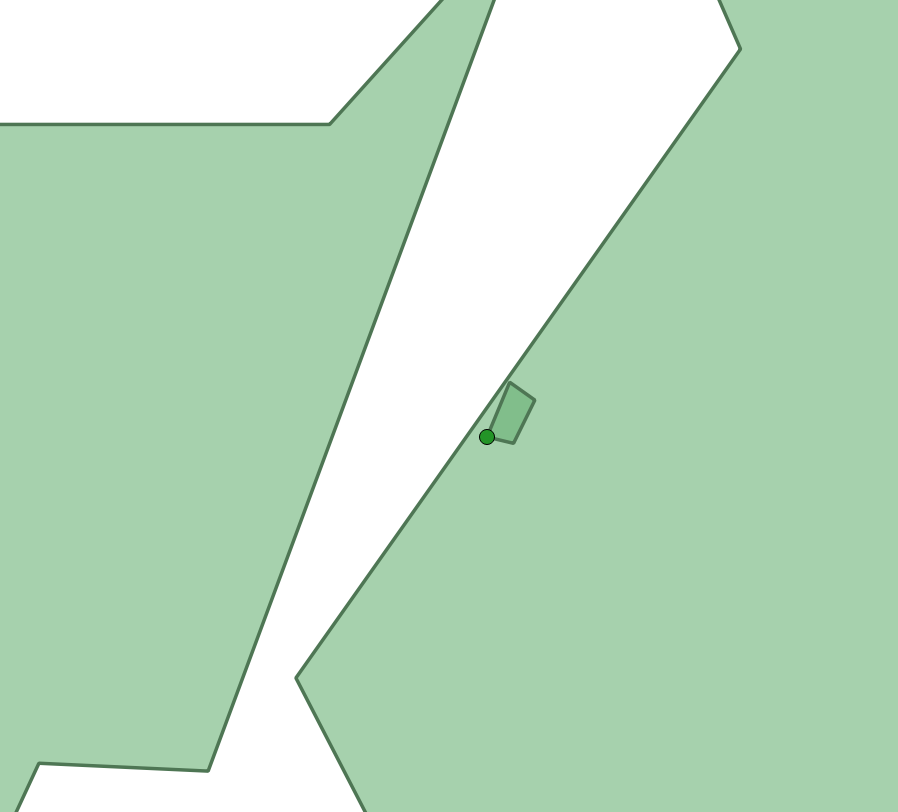

The complexity of the polygon means it's very good at highlighting bugs, e.g: incorrect assumptions about (multi)polygon validity. Which produces stuff like this (zoomed in version of the same polygon above):

Which shows an outer ring of one polygon in the multipolygon inside the outer ring of another, which is invalid. Probably, it was caused when a line segment which curved around it was simplified to a straight line. However, even with that fixed, we're still getting a bunch of other topology errors, so there are still bugs to be found.

Extra TODOs that need to be addressed after v0 is working:

- Implement @meetar's idea to make sure there are no gaps between polygons. Please correct me if I'm getting this wrong, but the central idea is to treat the largest landcover type as the background, and simplify other landcover polygons before subtracting them from the background. This should mean any gaps are the "background colour", so might need some modification to "donate" small, isolated slivers to neighbouring landcover types.

- Instead of downloading all the rasters during tile post-processing, do it up-front and create a vector for each zoom level. These could then be QA'd separately to ensure that no chunks of polygon had gone missing or been broken by

buffer(0). The result could be bundled in the static assets and use the same import pipeline as other shapefiles. Additional benefits: Not needing to call an external API reduces the chance of 502s and consequent retries, not needing to figure out how to disable the post-process stage during unit/integration testing. - The current implementation of the "coastline dilation" and modal filtering is in pure Python and very slow. It seems likely it would be considerably faster if the filters were re-implemented as

numpyoperations. Along the same lines, the current implementation collects tiles in aPIL.Imageand then translates that to a GDALDataset, but (TIL) theDatasetAPI includes aWriteRasterfunction which could be used to directly build the full image directly withoutPIL. I'm not sure this would be much faster, but would cut down on memory allocations and probably be less complex code.

treat the largest landcover type as the background, and simplify other landcover polygons before subtracting them

This is very close – my initial idea was that assuming there's landcover data for the entire planet, you'd start with the entire earth layer as the background, and subtract each landcover type from that. But it seems you already have a process that's working there.

My other assumption was that starting by subtracting the largest landcover type would make for the most expedient procedure, though that's just intuition on my part. Now I think about it more, it may be that starting with the least detailed layer might be better – if you subtract a complex layer first, you create a more complex background shape, which increases the complexity of every subsequent subtraction operation.

PostGIS 3.0 has been released (blog post).

- 4181, ST_AsMVTGeom: Avoid type changes due to validation (Raúl Marín)

- 4183, ST_AsMVTGeom: Drop invalid geometries after simplification (Raúl Marín)

- 4348, ST_AsMVTGeom (GEOS): Enforce validation at all times (Raúl Marín)

Which is related to also new GEOS 3.8.0, which is supposed to address many invalid polygon issues:

CARTO also has a blog post.

One core enhancement that many users will appreciate actually comes from GEOS 3.8, the underlying library that PostGIS uses. In essence, we can now expect fewer topological errors and fewer instances of the infamous “self-intersecting” error, an improvement that lots of GIS users will love to hear.