[Experiment] Squeeze and Excitation

Trying out Squeeze and Excitation.

Looks really good.

Code: https://github.com/tensorflow/minigo/pull/673 (Brian would be nice for you to take a look)

Inspirations:

- https://arxiv.org/abs/1709.01507

- https://github.com/LeelaChessZero/lczero-training/blob/master/tf/tfprocess.py#L533

Results

Trained 6 networks, 2 each of baseline, Squeeze And Excitation (SE) and SE + bias

- Tensorboard shows lower error in all metrics

- Played 40 games for each of the last 5 checkpoints against a fixed external engine (LZ60)

tensorflow code was very slow in inference. It was mentioned that averagepool is slower than reduce_average, I investigated but it appeared and disappeared unclear why.

https://github.com/hujie-frank/SENet the site of the paper

Some links I used to profile performance

add_run_metadata from: https://www.tensorflow.org/guide/graph_viz

chrome tracing from: https://towardsdatascience.com/howto-profile-tensorflow-1a49fb18073d

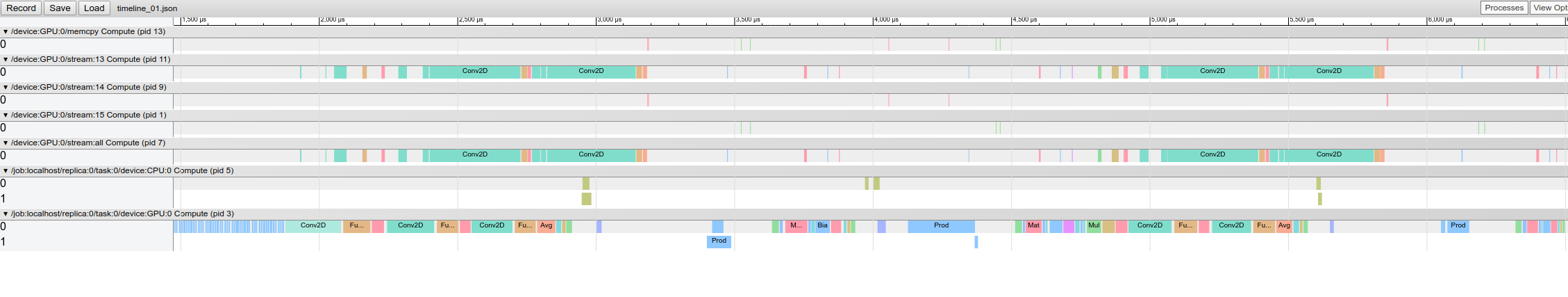

chrome trace of SE:

(was .json renamed for git)timeline_01.txt

@sethtroisi From a quick survey of the paper, the Squeeze-Excite (SE) approach looks very similar to what @lightvector has been doing with global properties. The main difference seems to be that SE only considers average pooling (though they suggest other aggregations), while the later suggests that max pooling might also be useful.

See https://github.com/lightvector/GoNN#update-oct-2018 for some further reading of his research into the topic. He also discuss a bunch of other topics you might find inspiring for similar enhancements.

What do you want to do with this issue, now that we're doing it? :)

I'm planning to include details from v17 in this issue then we'll close it out

Cross Eval is showing v17 as much stronger which I'm going to 80% attribute to this change!!!

great

What's the computational cost (if any) of SE versus non-SE, holding number of blocks constant?

+2% on TPU for training, +1% for inference.

On my personal machine I had to pin some operation to the GPU or it was 2x slower.

they post another paper, i don't know if it is related with go game Gather-Excite: Exploiting Feature Context in Convolutional Neural Networks

Jie Hu, Li Shen, Samuel Albanie, Gang Sun, Andrea Vedaldi https://arxiv.org/abs/1810.12348

@sethtroisi, you might want to update your LeelaChessZero link to: https://github.com/LeelaChessZero/lczero-training/blob/master/tf/tfprocess.py#L645

What if bias is used instead of weighting (gamma)? Can it be confirmed that the presence of the former won't already give the same improvement as having both?