Buffer overflow in Database.run()

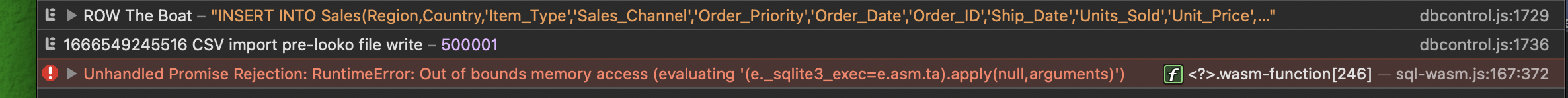

If a large enough string (in my case, 5k INSERT statements) is passed to the Database run() function, this leads to a buffer overflow and some kind of memory corruption. Afterwards, all sql.js functions fail with a memory access out of bounds error.

Either the input length should be unlimited, or the limit should be well documented and better handled to avoid memory corruption.

My workaround is to split into individual statements and run one at a time, which runs a bit slower due to the added overhead.

Bulking the insert statements certainly would help, not sure it's realistic to expect unlimited. If you want a streaming database ksqldb or another might help your use case.

I ran into a similar problem, one insert statement with 5k+ lines

Yup - appears to be a limit somewhere.

I was using db.run(sqlstr); and sqlstr upto 3693 rows worked OK but failed above that.

Was using the sql-wasm on Tauri and Neutralino but both environments gave the same error. If I wrote the sqlstr out to a text file prior it would accept a far larger string (I tested up to 500K rows and it worked albeit a bit slow) so machine memory is not a problem.

Probably have to try the batching trick.

This is definitely a bug in sql.js that should be fixed, but as a side note: if you are inserting a lot of data in a database, you should use prepared statements, not very long sql strings.

Yup I agree about the prepared statements. Just tried a bit of batching on a 500K row CSV import and it works OK - I chunked it at 3600 rows. My databases will be quite small but nice to know that sql.js does have a wee bit of horsepower.