Make BigTable partition size configurable

BigTable is hardcoded to use 4 billion-sized partitions based on the timestamp. While this value can't really be changes for an existing cluster, it is something that should be configurable for new clusters.

As part of this, we should have documentation for how to reason about what a good partition size is based on the expected usage.

Currently for Bigtable the partition period is set to 0x100_000_000L; which roughly equates to ~50 days of values contained in a single row.

This value made more sense when Spotify had datacenters and servers that rarely changed as its more efficient to fetch a single row.

When Heroic started supported resource identifiers to be used for monitoring gke containers that have a higher churn rate this partition causes us to scan over a very large range. Most of the rows being returned are empty since 75% of gke containers are less than 7 days old and 57% are less than 1 day old. This could be made more efficient by lowering the partition to a lower value.

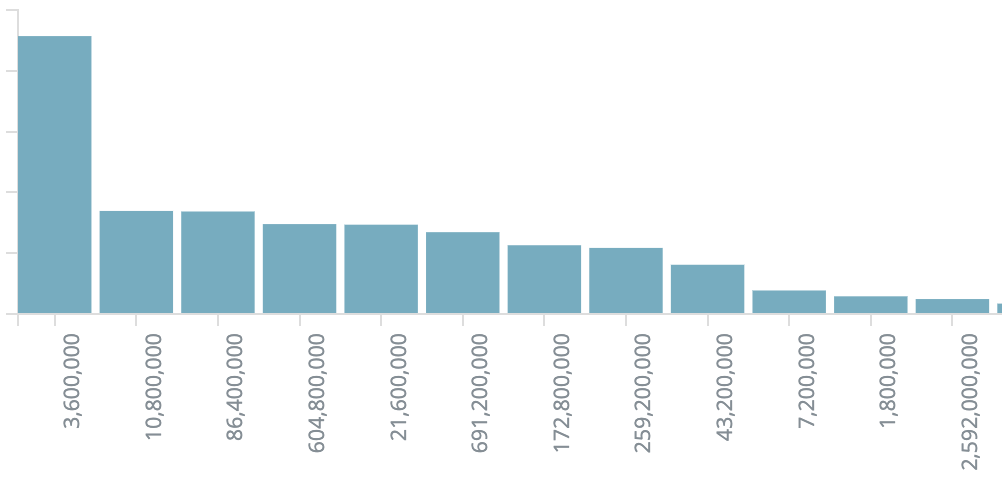

Looking at the time ranges users are searching for the top ones in order are...

- 1 hour

- 3 hours

- 24 hours

- 7 days