Log evidences of EP fit are zero

If I run a simple EP fit:

https://github.com/Jammy2211/autofit_workspace_test/blob/main/ep_examples/toy/model/ep.py

The Log Evidence values printed throughout the fit are being given as 0:

2022-06-02 16:49:11,208 - dataset_9 - INFO - Log Evidence = 0.0

2022-06-02 16:49:11,208 - dataset_9 - INFO - KL Divergence = 194184846802789.72

2022-06-02 16:49:12,018 - PriorFactor2 - INFO - Log Evidence = 0.0

2022-06-02 16:49:12,019 - PriorFactor2 - INFO - KL Divergence = 194184846802789.72

2022-06-02 16:49:12,221 - PriorFactor3 - INFO - Log Evidence = 0.0

2022-06-02 16:49:12,221 - PriorFactor3 - INFO - KL Divergence = 194184846802777.9

2022-06-02 16:49:13,288 - PriorFactor30 - INFO - Log Evidence = 0.0

2022-06-02 16:49:13,289 - PriorFactor30 - INFO - KL Divergence = 194184846802775.25

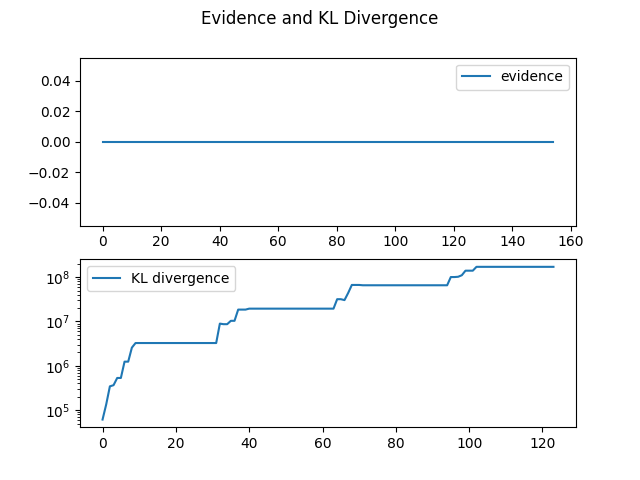

The graph.png is also reflecting this behaviour:

Shouldn't the KL Divergence also be decreasing?

@matthewghgriffiths

I've added a test on a feature branch illustrating that m.AbstractMessage.log_normalisation consistently returns 0.0

Actually I guess that's because only one message is passed in to the function and that is used as self. Seems an odd behaviour that if there is only one message its likelihood is 0?