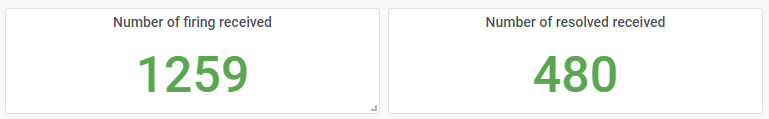

The number of firing and resolved is so different

What did you do? I uploaded the Dashboard for AlertManager on the Grafana.

What did you expect to see? I wanted to see the number of firing received and resolved received.

- Number of firing received

sum(alertmanager_alerts_received_total{status="firing"}) - Number of resolved received 3

sum(alertmanager_alerts_received_total{status="resolved"})

What did you see instead? Under which circumstances?

The number of firing and resolved is so different. What's the reason?

The number of firing and resolved is so different. What's the reason?

Environment

-

System information:

Kubernetes v2.1.0

-

Alertmanager version: 0.19.0

-

Prometheus version: 2.30.1

insert output of

prometheus --versionhere (repeat for each prometheus version in your cluster, if relevant to the issue) -

Alertmanager configuration file:

config.yml: |-

global:

templates:

- '/etc/alertmanager-templates/*.tmpl'

route:

group_by:

- namespace

group_wait: 10s

receiver: slack_demo

repeat_interval: 10s

routes:

- match:

severity: fatal

receiver: slack_demo

- match:

severity: critical

receiver: slack_demo

- match:

severity: warning

receiver: slack_demo

- match:

severity: info

receiver: slack_demo

receivers:

- name: slack_demo

slack_configs:

- api_url: SLACK-WEEBHOOK-URL

send_resolved: true

channel: '#alertmanager'

title: '[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}] Monitoring Event Notification'

text: >-

{{ range .Alerts -}}

*Alert:* {{ .Annotations.title }}{{ if .Labels.severity }} - `{{ .Labels.severity }}`{{ end }}

*Description:* {{ .Annotations.description }}

*Graph:* <{{ .GeneratorURL }}|:chart_with_upwards_trend:>

*Details:*

{{ range .Labels.SortedPairs }} • *{{ .Name }}:* `{{ .Value }}`

{{ end }}

{{ end }}

- Prometheus configuration file:

prometheus.rules: |-

groups:

- name: container memory alert

rules:

- alert: container memory usage rate is very high( > 80%)

expr: sum(container_memory_working_set_bytes{pod!="", name=""})/ sum (kube_node_status_allocatable_memory_bytes) * 100 > 80

for: 1m

labels:

severity: fatal

annotations:

summary: High Memory Usage on

identifier: ""

description: " Memory Usage: "

- name: container CPU alert

rules:

- alert: container CPU usage rate is very high( > 60%)

expr: sum (rate (container_cpu_usage_seconds_total{pod!=""}[1m])) / sum (machine_cpu_cores) * 100 > 60

for: 1m

labels:

severity: fatal

annotations:

summary: High Cpu Usage

.

.

.

.

prometheus.yml: |-

global:

scrape_interval: 5s

evaluation_interval: 5s

rule_files:

- /etc/prometheus/prometheus.rules

alerting:

alertmanagers:

- scheme: http

static_configs:

- targets:

- "alertmanager.monitoring.svc:9093"

Well, alertmanager is designed to repeat its alerts time to time.

repeat_interval: 10s

means that you'll be notified every 10 seconds about every not resolved alert.

so if your alert was in firing state for a minute, before it became resolved, by then you should have received 6 firing notifications, and just a single one resolved