Performance issues

Hey! Thanks for working on Piccolo - it's been great using it so far. I did run into a performance issue though. This is what my tables roughly look like:

class Table1(Table):

some_column = Varchar(length=6, primary_key=True)

...

class Table2(Table):

some_column = ForeignKey(references=Table1)

other_column = Integer()

...

Whenever I execute a raw query like this, the performance is worse than on Tortoise, for example:

# The same query takes about 0.06 sec. on Piccolo and 0.003 sec. on Tortoise.

await Table2.raw('SELECT table2.* FROM table2 INNER JOIN table1 ON table2.some_column = table1.some_column WHERE other_column = 123')

I do expect the tables to have tens of millions of entries so I am slightly concerned. Shoud I even worry about it? Thanks.

@ynnirc The performance should be about the same. They both use the same database adapter under the hood (asyncpg), and the raw queries are quite a thin layer on top.

Having said that, there could be a performance bottleneck we're unaware of.

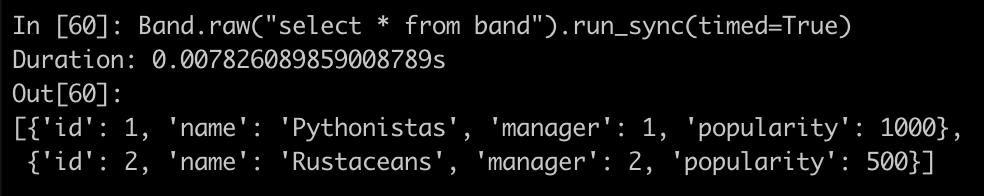

I ran the Piccolo playground targeting Postgres (instructions here). Running this on my MacBook:

>>> Band.raw("select * from band").run_sync(timed=True)

I averaged around 0.008 seconds per query.

The test database didn't have much data in it though, so I need to check it with more data.

As the project has grown, the focus has been on feature development and bug fixing, so I haven't run a profiler on it for a while. I'll see what I can do.

Band.raw("select * from band").run_sync(timed=True)I averaged around 0.008 seconds per query.

I am getting about 0.05 seconds per query on my Windows 10 machine with the same playground database and query. Could this perhaps be a platform-specific issue?

@ynnirc It's a possibility. I might be able to test it inside a Windows 10 VM.

@dantownsend I think having a Github action for benchmarking in different platforms would be a good idea.

@AliSayyah It's a good idea. It seems like Windows VMs are available. They use up twice the number of credits as Linux VMs, but shouldn't be an issue for simple performance testing.

@dantownsend Do you want me to give it a go?

@AliSayyah If you don't mind that would be great.

I've been looking at options for profiling. In the past I used cprofile, but came across these other options too:

- https://github.com/gaogaotiantian/viztracer

- https://github.com/joerick/pyinstrument

They all basically do the same job though.

@dantownsend Thank you. I'll check them out.

@ynnirc We've done a bunch of research into this.

We haven't identified whether there's a problem on Windows yet.

However, we've run a profiler on Piccolo. We've made a few optimisations, which have been released to PyPI. I think the changes are most noticeable if you're pulling lots of rows from the database.

The thing which will make the biggest difference to performance is making sure a connection pool is running.

Creating the connections is really expensive, so being able to reuse them has a big impact on performance. For example, I was able to pull 1000 rows in < 2 ms when a connection pool was running, vs 8 ms without it.