alba purge-osd : decommissioned

Hello,

I'm pretty sure the following device is not in use and missing from alba plugin GUI.

In [31]: [ c for c in claimed_osds if c['ips'][0]==u'192.168.20.33' and c['id']==0 ]

Out[31]:

[{u'alba_id': u'9d7bdd52-b704-465b-9253-7156e1bf088d',

u'albamgr_cfg': None,

u'checksum_errors': 0,

u'claimed_since': 1543828700.586121,

u'decommissioned': True,

u'endpoints': None,

u'errors': [[1563349242.58375,

u'Asd_client.BadLongId("wKpwkiXci7jHuDfAfFwnLCploMijii1e", "J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8")'],

[1563349239.129,

u'Asd_client.BadLongId("wKpwkiXci7jHuDfAfFwnLCploMijii1e", "J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8")'],

[1563349234.427287,

u'Asd_client.BadLongId("wKpwkiXci7jHuDfAfFwnLCploMijii1e", "J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8")'],

[1563349185.722885,

u'Asd_client.BadLongId("wKpwkiXci7jHuDfAfFwnLCploMijii1e", "J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8")'],

[1563349179.758825,

u'Asd_client.BadLongId("wKpwkiXci7jHuDfAfFwnLCploMijii1e", "J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8")'],

[1563349176.255778,

u'Asd_client.BadLongId("wKpwkiXci7jHuDfAfFwnLCploMijii1e", "J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8")'],

[1563349130.081002,

u'Asd_client.BadLongId("wKpwkiXci7jHuDfAfFwnLCploMijii1e", "J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8")'],

[1563349113.89867,

u'Asd_client.BadLongId("wKpwkiXci7jHuDfAfFwnLCploMijii1e", "J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8")'],

[1563349113.796267,

u'Asd_client.BadLongId("wKpwkiXci7jHuDfAfFwnLCploMijii1e", "J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8")'],

[1563349110.6479,

u'Asd_client.BadLongId("wKpwkiXci7jHuDfAfFwnLCploMijii1e", "J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8")']],

u'id': 0,

u'ips': [u'192.168.20.33'],

u'kind': u'AsdV1',

u'long_id': u'wKpwkiXci7jHuDfAfFwnLCploMijii1e',

u'node_id': u'MtRUTNalzCedpLjg',

u'port': 8601,

u'prefix': None,

u'preset': None,

u'read': [1563102254.102759,

1563102253.740176,

1563102253.592432,

1563102230.184313,

1563102225.22527,

1563102225.157166,

1563102200.238294,

1563102200.157486,

1563102175.467823,

1563102169.76271],

u'seen': [1543828696.673785],

u'total': 1199613935616,

u'use_rdma': False,

u'use_tls': False,

u'used': 516273112914,

u'write': [1563102284.511966,

1563102284.003097,

1563102283.920963,

1563102283.790112,

1563102283.721009,

1563102283.24091,

1563102283.184723,

1563102283.171877,

1563102283.153193,

1563102282.971391]}]

So I purged it

[root@node1 ~]# alba purge-osd --long-id wKpwkiXci7jHuDfAfFwnLCploMijii1e --config arakoon://config/ovs/arakoon/sas-back-abm/config?ini=%2Fopt%OpenvStorage%2Fconfig%2Farakoon_cacc.ini

2019-07-17 15:27:06 513210 +0800 - node1 - 22276/0000 - alba/cli - 0 - info - Albamgr_client.make_client :sas-back-abm

2019-07-17 15:27:06 515326 +0800 - node1 - 22276/0000 - alba/cli - 1 - info - Albamgr_client.make_client :sas-back-abm

2019-07-17 15:27:06 516047 +0800 - node1 - 22276/0000 - alba/cli - 2 - info - Connecting to ADDR_INET(192.168.0.34,26408)

2019-07-17 15:27:06 516176 +0800 - node1 - 22276/0000 - alba/cli - 3 - info - Connecting to ADDR_INET(192.168.0.34,26408)

2019-07-17 15:27:06 516228 +0800 - node1 - 22276/0000 - alba/cli - 4 - info - connect_with 192.168.0.34 26408 None Net_fd.TCP (fd:8) succeeded

2019-07-17 15:27:06 516339 +0800 - node1 - 22276/0000 - alba/cli - 5 - info - connect_with 192.168.0.34 26408 None Net_fd.TCP (fd:3) succeeded

But this device is still in list-osds.

Best regards, Yongsheng

There was this error all the time before I removed and reclaimed a hdd.

Jul 17 11:36:28 node1 alba: 2019-07-17 11:36:28 238863 +0800 - node1 - 34177/0000 - alba/maintenance - 4018 - info - connect_with failed: 192.168.20.33 8601 None Net_fd.TCP (fd:44): (Unix.Unix_error "Connection refused" connect "")

Now it's gone. I 'm not sure which operation took effect. I guess it's ok to see that device is still in list as there's decommissioned field. Thanks!

These logs still keep on showing up:

Jul 18 10:18:49 node1 alba[3606]: 2019-07-18 10:18:49 684183 +0800 - node1 - 3606/0000 - alba/proxy - 87431 - info - wKpwkiXci7jHuDfAfFwnLCploMijii1e "Asd_client.BadLongId(\"wKpwkiXci7jHuDfAfFwnLCploMijii1e\", \"J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8\")" was unforeseen

Jul 18 10:18:49 node1 alba[3606]: 2019-07-18 10:18:49 684206 +0800 - node1 - 3606/0000 - alba/proxy - 87432 - info - wKpwkiXci7jHuDfAfFwnLCploMijii1e "Asd_client.BadLongId(\"wKpwkiXci7jHuDfAfFwnLCploMijii1e\", \"J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8\")": should_invalidate:true should_retry:false

Jul 18 10:18:49 node1 alba[3606]: 2019-07-18 10:18:49 684624 +0800 - node1 - 3606/0000 - alba/proxy - 87433 - info - Could not yet requalify osd 0, trying again in 60.000000 seconds: Asd_client.BadLongId("wKpwkiXci7jHuDfAfFwnLCploMijii1e", "J98Ei4HdWR6L7oaJqq9TfjuqLrrZL7p8")

Please let me abuse this ticket a bit . There are the following errors all the time. I'm wondering if it's related to the FAILURE state of a router node on GUI.

Jul 17 17:25:29 node1 volumedriver_fs.sh: 2019-07-17 17:25:29 458386 +0800 - node1 - 3706/0x00007fc49f7fe700 - volumedriverfs/MetaDataServerRocksTable - 000000000099a6b0 - error - multiget: quoth rocksdb: IO error: /mnt/ssd1/sas-pool_db_mds_2/db/351926.sst: No such file or directory

Jul 17 17:25:29 node1 volumedriver_fs.sh: 2019-07-17 17:25:29 458440 +0800 - node1 - 3706/0x00007fc49f7fe700 - volumedriverfs/MDSMetaDataBackend - 000000000099a6b1 - error - MDSMetaDataBackend: d234c655-14f4-4d19-8120-f3093ffb23f0: failed to create MDSMetaDataBackend: IO error: /mnt/ssd1/sas-pool_db_mds_2/db/351926.sst: No such file or directory multiget

Jul 17 17:25:29 node1 volumedriver_fs.sh: 2019-07-17 17:25:29 458472 +0800 - node1 - 3706/0x00007fc49f7fe700 - volumedriverfs/PeriodicActionPoolTaskImpl - 000000000099a6b2 - error - run: mds-poll-namespace-d234c655-14f4-4d19-8120-f3093ffb23f0: caught exception: IO error: /mnt/ssd1/sas-pool_db_mds_2/db/351926.sst: No such file or directory multiget - ignored

Jul 17 17:25:29 node1 alba: 2019-07-17 17:25:29 512078 +0800 - node1 - 34177/0000 - alba/maintenance - 53129 - info - reading from fd 250: 3497237B in 110 steps (took:0.025182)

Jul 17 17:25:29 node1 arakoon: 2019-07-17 17:25:29 523320 +0800 - node1 - 3482/0000 - arakoon - 1034177 - info - 192.168.0.33:client_service:session=0 connection=192.168.0.33:client_service_342642 socket_address=ADDR_INET 192.168.0.35,50358

Jul 17 17:25:29 node1 arakoon: 2019-07-17 17:25:29 523609 +0800 - node1 - 3482/0000 - arakoon - 1034178 - info - exiting session (0) connection=192.168.0.33:client_service_342642: "End_of_file"

Jul 17 17:25:29 node1 arakoon: 2019-07-17 17:25:29 523629 +0800 - node1 - 3482/0000 - arakoon - 1034179 - info - 192.168.0.33:client_service_342642: closing

Jul 17 17:25:29 node1 alba: 2019-07-17 17:25:29 671678 +0800 - node1 - 3606/0000 - alba/proxy - 49165 - error - Unexpected exception in proxy while handling request: Client_helper.MasterLookupResult.Error(0)

Jul 17 17:25:29 node1 alba: 2019-07-17 17:25:29 671794 +0800 - node1 - 3606/0000 - alba/proxy - 49166 - error - Request ReadObjectFs ("f59748b4-a90c-4040-870b-5500415651d5","snapshots.xml",_,_,_) errored and took 2.002753

Jul 17 17:25:29 node1 volumedriver_fs.sh: 2019-07-17 17:25:29 671893 +0800 - node1 - 3706/0x00007fc49effd700 - volumedriverfs/AlbaConnection - 000000000099a6b3 - error - convert_exceptions_: read object: caught Alba proxy exception: Client_helper.MasterLookupResult.Error(0)

Jul 17 17:25:29 node1 volumedriver_fs.sh: 2019-07-17 17:25:29 671997 +0800 - node1 - 3706/0x00007fc49effd700 - volumedriverfs/BackendConnectionInterfaceLogger - 000000000099a6b4 - error - ~Logger: Exiting read for f59748b4-a90c-4040-870b-5500415651d5 snapshots.xml with exception

Jul 17 17:25:29 node1 volumedriver_fs.sh: 2019-07-17 17:25:29 672014 +0800 - node1 - 3706/0x00007fc49effd700 - volumedriverfs/BackendConnectionInterfaceLogger - 000000000099a6b5 - error - ~Logger: Exiting read for f59748b4-a90c-4040-870b-5500415651d5

Jul 17 17:25:29 node1 volumedriver_fs.sh: 2019-07-17 17:25:29 672075 +0800 - node1 - 3706/0x00007fc49effd700 - volumedriverfs/BackendInterface - 000000000099a6b6 - error - do_wrap_: f59748b4-a90c-4040-870b-5500415651d5: problem with connection: Client_helper.MasterLookupResult.Error(0)

Jul 17 17:25:29 node1 volumedriver_fs.sh: 2019-07-17 17:25:29 672093 +0800 - node1 - 3706/0x00007fc49effd700 - volumedriverfs/BackendInterface - 000000000099a6b7 - warning - do_wrap_: f59748b4-a90c-4040-870b-5500415651d5: retrying with new connection (retry: 1, sleep before retry: 1000 milliseconds)

Jul 17 17:25:29 node1 alba: 2019-07-17 17:25:29 672149 +0800 - node1 - 3606/0000 - alba/proxy - 49167 - info - server: (fd:79) closed

Jul 17 17:25:29 node1 alba: 2019-07-17 17:25:29 730687 +0800 - node1 - 34177/0000 - alba/maintenance - 53130 - info - reading from fd 242: 612206B in 21 steps (took:0.015180)

Jul 17 17:25:29 node1 alba: 2019-07-17 17:25:29 977607 +0800 - node1 - 22278/0000 - alba/asd - 99234 - info - Request Apply took 1.272000

Jul 17 17:25:30 node1 alba: 2019-07-17 17:25:30 011844 +0800 - node1 - 22278/0000 - alba/asd - 99235 - info - Request MultiGet2 took 0.524695

Jul 17 17:25:30 node1 alba: 2019-07-17 17:25:30 091683 +0800 - node1 - 3606/0000 - alba/proxy - 49168 - error - Unexpected exception in proxy while handling request: Client_helper.MasterLookupResult.Error(0)

Jul 17 17:25:30 node1 alba: 2019-07-17 17:25:30 091765 +0800 - node1 - 3606/0000 - alba/proxy - 49169 - error - Request ReadObjectFs ("ea9722c0-b295-42cf-992f-3071dafb1ec4","snapshots.xml",_,_,_) errored and took 2.002930

Jul 17 17:25:30 node1 volumedriver_fs.sh: 2019-07-17 17:25:30 091850 +0800 - node1 - 3706/0x00007fc49ffff700 - volumedriverfs/AlbaConnection - 000000000099a6b8 - error - convert_exceptions_: read object: caught Alba proxy exception: Client_helper.MasterLookupResult.Error(0)

Jul 17 17:25:30 node1 volumedriver_fs.sh: 2019-07-17 17:25:30 091978 +0800 - node1 - 3706/0x00007fc49ffff700 - volumedriverfs/BackendConnectionInterfaceLogger - 000000000099a6b9 - error - ~Logger: Exiting read for ea9722c0-b295-42cf-992f-3071dafb1ec4 snapshots.xml with exception

Jul 17 17:25:30 node1 volumedriver_fs.sh: 2019-07-17 17:25:30 092003 +0800 - node1 - 3706/0x00007fc49ffff700 - volumedriverfs/BackendConnectionInterfaceLogger - 000000000099a6ba - error - ~Logger: Exiting read for ea9722c0-b295-42cf-992f-3071dafb1ec4

Jul 17 17:25:30 node1 volumedriver_fs.sh: 2019-07-17 17:25:30 092059 +0800 - node1 - 3706/0x00007fc49ffff700 - volumedriverfs/BackendInterface - 000000000099a6bb - error - do_wrap_: ea9722c0-b295-42cf-992f-3071dafb1ec4: problem with connection: Client_helper.MasterLookupResult.Error(0)

Jul 17 17:25:30 node1 volumedriver_fs.sh: 2019-07-17 17:25:30 092085 +0800 - node1 - 3706/0x00007fc49ffff700 - volumedriverfs/BackendInterface - 000000000099a6bc - warning - do_wrap_: ea9722c0-b295-42cf-992f-3071dafb1ec4: retrying with new connection (retry: 1, sleep before retry: 1000 milliseconds

Is there any clue how to locate the reason why the storage router shows failure state although it is still working well? Please find the attached complete sys log for 2 hours in this post. Thanks!

Best regards, Yongsheng

I find this task ovs.storagerouter.ping never shows up on node1 since last reboot a week ago. The other two nodes (master) still have this task logged every minute.

I also find this error once a minute in [email protected]

closing AMQP connection <0.1095.106> (192.168.0.33:53762 -> 192.168.0.33:5672):

connection_closed_abruptly

=WARNING REPORT==== 22-Jul-2019::16:25:05 ===

closing AMQP connection <0.1170.106> (192.168.0.35:42262 -> 192.168.0.33:5672):

connection_closed_abruptly

=WARNING REPORT==== 22-Jul-2019::16:25:07 ===

closing AMQP connection <0.1260.106> (192.168.0.34:53716 -> 192.168.0.33:5672):

connection_closed_abruptly

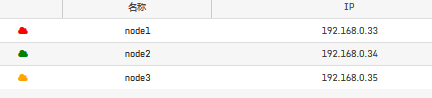

All 3 nodes including itself can't make connection to 192.168.0.33.

This issue may have caused FAILURE state of storage router node1.

Checked celery:

celery inspect ping -b amqp://ovs:[email protected]// --timeout=5

-> celery@node1: OK

pong

-> celery@node3: OK

pong

-> celery@node2: OK

pong

This ticket is quite messy and losing consistency with its title, so I'm opening a new ticket for the issue of storage router's failure state. Then I will delete those posts in this ticket.