Filter DataFrame with rules for e.g. crawler bots

Problem

Especially in anonymous mode, crawler bots like Algolia and Google create many sessions/users that are not relevant to analysis, but do skew the numbers. Similarly, other irrelevant sources such as CI environments and internal IP addresses can skew the numbers as well. Filtering out this traffic is currently a manual job for every analysis.

Proposed solution

A helper function in ModelHub that allows to specify rules that filter out data, e.g. based on certain user_agent patterns and IP addresses.

Alternatives

Alternatively, these rules could be applied as some sort of enrichment on the backend to create a 'clean' table that every analysis can use. Potentially those rule definitions are compatible with an implementation on the ModelHub side as well.

Context

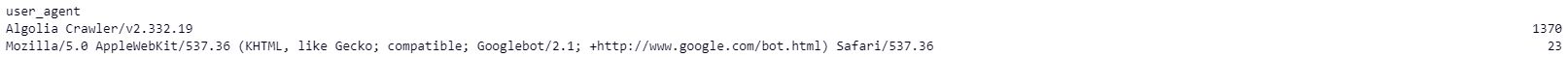

User agents in our own traffic for Algolia and Google Bot: