[WIP] nan-training-debug

Got to this function be tracing nans back from model outputs up the stack. Found that before the call to

coord_stack = camera_utils.radial_and_tangential_undistort(coord_stack, distortion_params) the coord_stack param is not nan and is nan after. When debugging within this function, I had to switch of torch scripting to insert a breakpoint. This caused the issue to go away, and none of the downstream breakpoints were hit either. When going to reference function in comment they do not seem to script this function. Not sure what within scripting is causing this to break

To reproduce the old error uncomment the torch.jit.script - and you should hit the breakpoint

If we are happy with this solution - I can remove the breakpoint / comment

Great find! It does seem to fix the NAN issue on the redwood2 scene. The only downside is that there is a non-negligible performance hit (I'm seeing that training is ~10% slower). Lets investigate this a bit more before merging, I would be nice if we didn't need to remove jitting. Maybe @kerrj has an idea, he originally added the jitting.

A quick idea would be changing eps in that function to something larger (maybe 1e-4 or something), it's possible lines 373 and 374 are exploding when the denominator is too small. If that doesn't fix it, pinpointing which line exactly is causing nans would be helpful, but that might be tricky inside a jitted function.

Tested to 30k iterations with eps=1e-3 and it seems to work

Tested to 30k iterations with eps=1e-3 and it seems to work

Yeah this seems like a better solution - it works for me as well, Ive reverted the jit and updated the eps value

Would be good to do a quality comparison to make sure it doesn't affect results

Would be good to do a quality comparison to make sure it doesn't affect results.

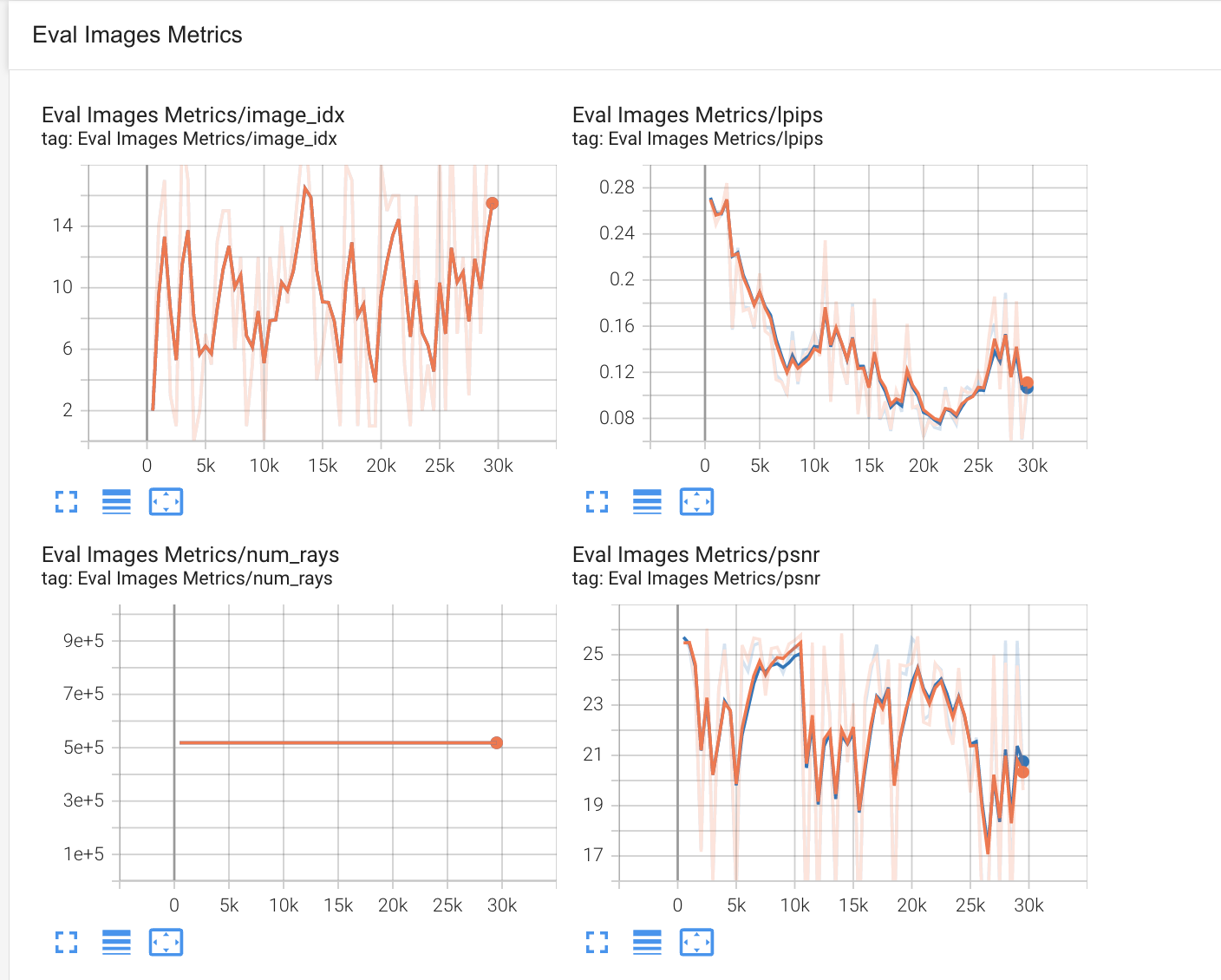

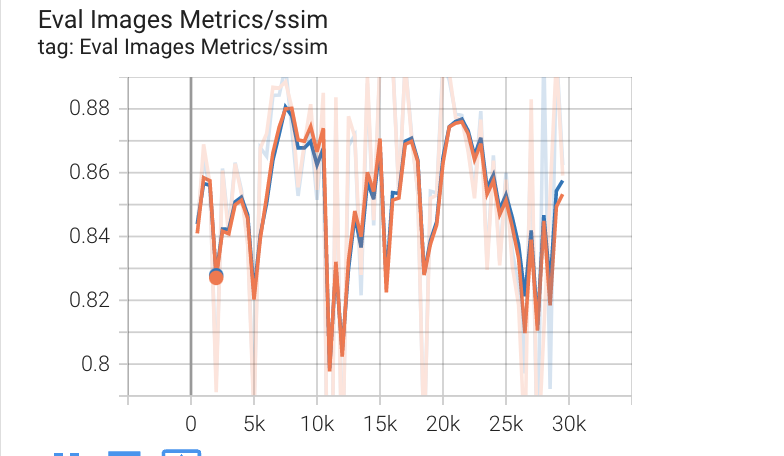

Here is what I get when testing on the poster dataset -

orange is eps 1e-3 and blue 1e-9 - seems fine to me

Tested on a fisheye dataset. Seems to work fine.