Crop intersecting bounding boxes to improve precision

🚀 The feature

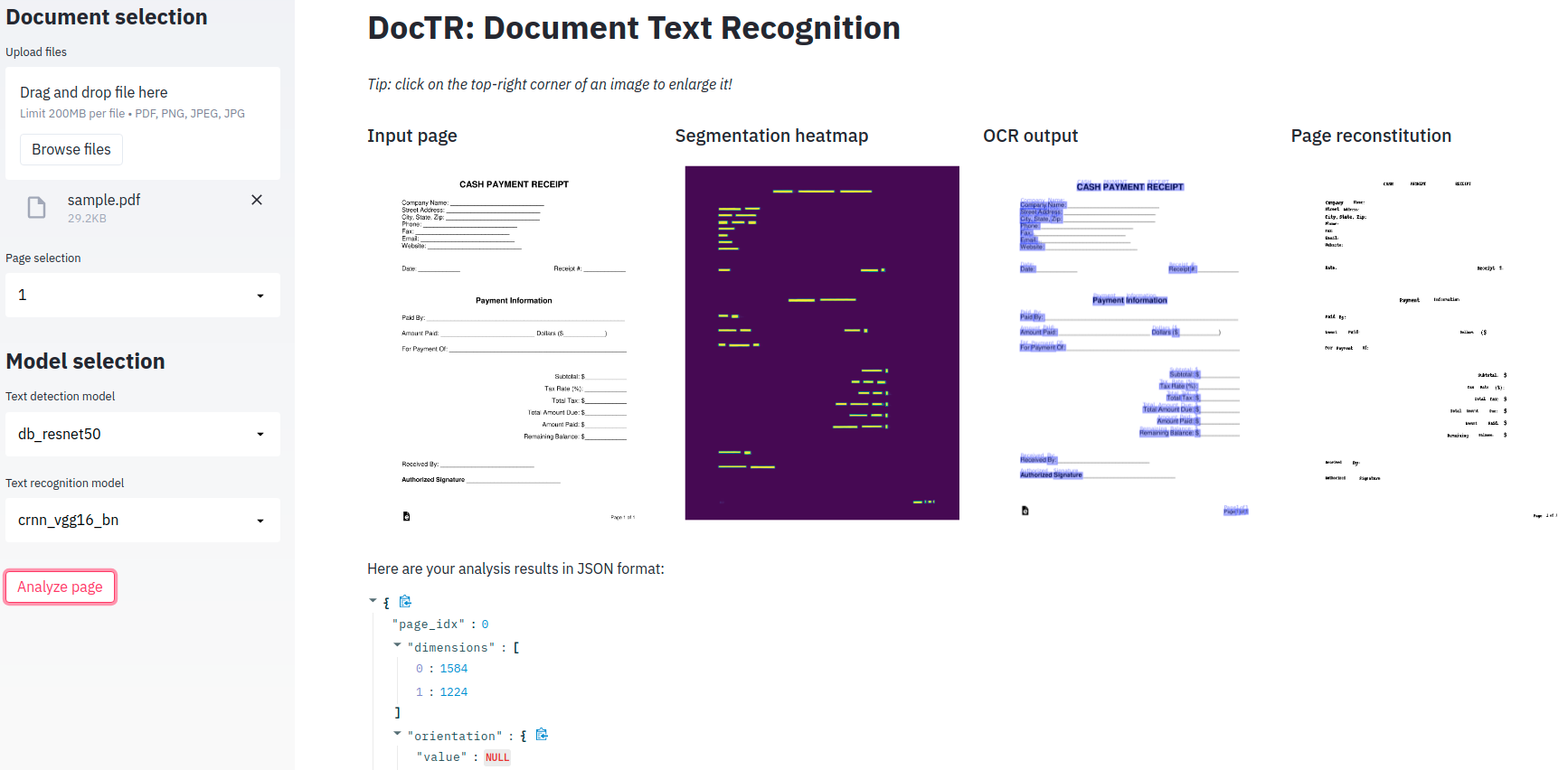

The "detector" inside the ocr_predictor often generates bounding boxes that intersect with each other horizontally, see examples :

When this happens the ocr results contains some characters that are doubled or some extra wrong characters that appear, for the examples above here is the text extracted :

Tip: click ont thet top-rightc tcorner rofanimaget toe enlargei it!

Document: selection

Here are youra analysis results inJ JSONf format:

I believe that adding a post-processing step after the detector that checks whether the next box horizontally intersects with the current box and crops it in that case would help correct the issue.

Motivation, pitch

I'm working on a project where we have to read the french social security numbers in a variety of documents. I found in my benchmarks that even if doctr is able to extract a lot more text from most documents, it is worse at reading the social security than tesseract because of this particular error. I believe that adding this post-processing step would largely improve results.

Alternatives

No response

Additional context

Code for reproducing the issue :

%matplotlib inline

import os

# Let's pick the desired backend

os.environ['USE_TORCH'] = '1'

from doctr.io import DocumentFile, Document

from doctr.models import ocr_predictor

predictor = ocr_predictor(pretrained=True, assume_straight_pages=True, preserve_aspect_ratio=False)

doc = DocumentFile.from_images(r"C:\Users\jcapellgracia\Downloads\demo_update.png")

result = predictor(doc)

print(result.pages[0].render())

result.show(doc)

Image used :

Hi @zas97 :wave: ,

thank you for bringing this to the table. This is already a known issue (#330). If you want to address the issue, please feel free to open a pull request. ping @charlesmindee at this point

Hi @zas97, This is indeed a good idea! This only reserve is that it could considerably slow down the end-to-end model, because we would have to compute intersections between nearest neighbors of each box or at least between successive boxes, and after that editing the overlapping boxes. Do you have an idea to implement it in a light way ? Anyway we could put it as an option in the predictor, to be used only in case of dense documents.

Good idea @zas97 :+1:

Let's explore this for v0.6.0. To move forward with this, there are a few things to discuss:

-

assuming we have all the intersection information: what do we want to do? let's take the example of two boxes on a given text "Hello world", we have a few cases if there is an intersection:

- boxes ("Hello", "oworld")

- boxes ("Hellow", "oworld")

- boxes ("Hellow", "world")

The problem now is that we don't have accurate spatial cues for each predicted characters. So the safest case is when we have both a text intersection & a spatial intersection, but what do we do then? Random directional (left or right) cropping? Without semantic, again since we don't know if there is more white space left or right of the letter "o" in the first example, we can't do much confidently :/

-

evaluating intersections: do we want a symmetrical 1-D figure (like IoU) to define this intersection? Or something more fine-grained?

What do you think?

Hello, I'm not sure if I can help you with the implementation but in my option the easier and best solution is to crop the box that has the higher "horizontal_length / nb_chars". The reason for that is because the box that has included a char from another box has also included the space which means that it will probably hava a bigger length/nb_chars ratio.

It won't correct the boxes ("Hellow", "oworld") case but it will at least solve correctly the rest

Hello, I'm not sure if I can help you with the implementation but in my option the easier and best solution is to crop the box that has the higher "horizontal_length / nb_chars". The reason for that is because the box that has included a char from another box has also included the space which means that it will probably hava a bigger length/nb_chars ratio.

Just to make sure we are talking about the same process, could you illustrate almost programmatically your suggestion please? :pray:

Also, the denominator "nb_chars", you're talking about the predicted number of characters right?

Yes nb_chars = the predicted number of characters

Lets say that we have the words "hello world" but the ocr recognize "hello oworld". You have two bounding boxes with same y and assuming that the width of the characters is always 1 the bounding boxes will be the following :

"hello": x1 = 0, x2 = 5 "oworld": x1 = 4, x2 = 11 (x1=4 because the bounding box starts between "hell" and "o" and includes the space between hello world)

For the first bb horizontal_length / nb_chars (5 - 0) / len("hello") = 1 For the first bb horizontal_length / nb_chars = (11 - 4) / len("oworld") = 1.16

That means that we should crop the second bounding box since it has the higher "horizontal_length / nb_chars".

This approach will work assuming that we are not in a case like ("Hellow", "oworld") and assuming that the x1 of the first word and the x2 of the second word are precise

Nice, however perhaps making the horizontal & character length of a word a priority, means that it will be a bit random in the case of :

- "thew world" (first word is shorter, I agree there will be more space, but the localization is a prediction so it's not perfect if the "world" crop has some room in it, while "thew" is cropped before the end of the "w")

From a visual perspective, the only trustable cue I can see is the space between the overlapping character ("w" in my example) is not the same right vs left. Arguably, if the prediction has an error, it means that the vertical histogram distribution density of "thew" is heterogeneous while it isn't for the "world". Down to the earth, that would be the case if:

- first bbox is very tight over "the w" and part of the "w" is cropped (density isn't uniform)

- second bbox is quite loose including potential spaces left and right of the world " world " (density is uniform in the center)

Perhaps we could combine both criteria? Now if we go down that road, we need a proxy for this vertical histogram:

- either actually computing the histogram

What do you think?

Yeah you're right that my proposition will be random in too many cases, using an histogram will probably be better.

@zas97 @frgfm I have to throw a very naive approach into this topic 😅 If I am right we talk about overlapping segmentation masks .. currently there are really low threshold values (0.1-0.3) which should lead to 'bigger' boxes .. so the range to overlap is really high what if we increase this values .. normally i think it will lead to less overlap in fact that the size of the masks will decrease wdyt ? Anything like pred_mask = (pred_mask > 0.7) (self.bin_tresh>=0.7)

@felixdittrich92 circling back to this, what "low threshold values" were you referring to? Do you mean the binarization threshold or the expansion value for the base text detection segmentation?

@frgfm the threshold value for prob_map where we decide 0 or 1 :sweat_smile: this should minimize distortion at the edges and thus reduce the overlap while converting to bbox (i have had a similar problem on semantic segmentation and this has solved my problem)

Binarization threshold then, we could increase the value but the manual tuning will never end either way :sweat_smile: This type of threshold should be a hyperparam tuned for each detection model :/

That will be mostly fixed with the next release. For custom detection result manipulation we added an way to interact with the results in the middle of the pipeline before cropping and passing the crops to the recognition model Docs: https://mindee.github.io/doctr/latest/using_doctr/using_models.html#advanced-options