[BUG] deepspeed_stage_3 was used in pytorch_lightning。when initialize, it cost huge cpu memory which increase with the grow of gpu num。

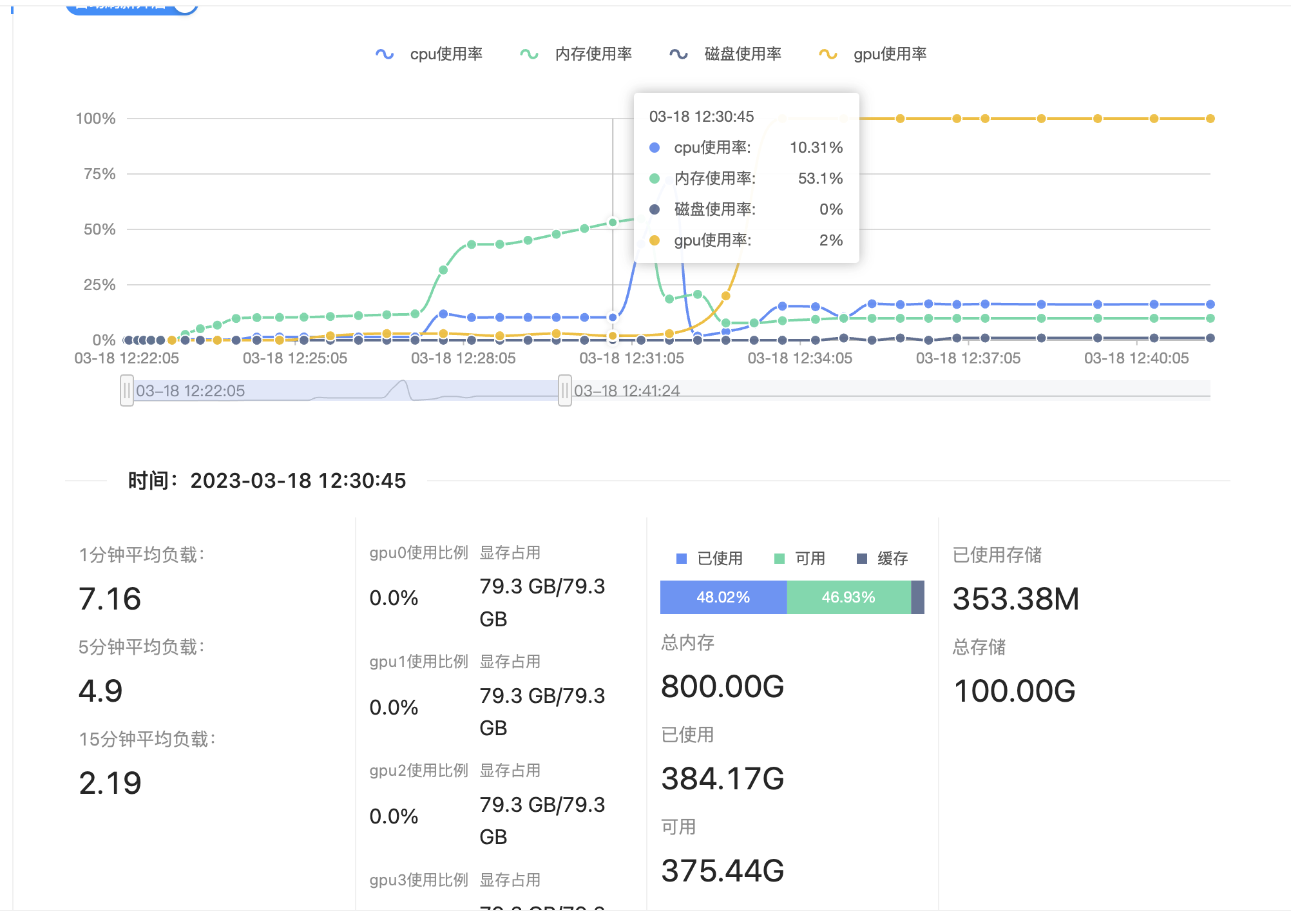

Describe the bug deepspeed_stage_3 was used in pytorch_lightning。when initialize, it cost huge cpu memory 。

the amount of cpu memory used = gpu_number * 2 * model_size

To Reproduce Steps to reproduce the behavior:

- Go to '...'

- Click on '....'

- Scroll down to '....'

- See error

Expected behavior A clear and concise description of what you expected to happen.

ds_report output

Please run ds_report to give us details about your setup.

Screenshots If applicable, add screenshots to help explain your problem.

System info (please complete the following information):

- OS: [e.g. Ubuntu 18.04]

- GPU count and types [e.g. two machines with x8 A100s each]

- Interconnects (if applicable) [e.g., two machines connected with 100 Gbps IB]

- Python version

- Any other relevant info about your setup

Launcher context

Are you launching your experiment with the deepspeed launcher, MPI, or something else?

Docker context Are you using a specific docker image that you can share?

Additional context Add any other context about the problem here.

the pytorch-lightning code was used : `trainer = Trainer( max_epochs=1, devices=args.num_devices, precision=16, strategy="deepspeed_stage_3", accelerator='gpu', num_nodes=args.num_nodes, limit_val_batches=0, # 添加plugins plugins=plugins, # 添加log和profile logger=lighting_logger, profiler=profiler, # 添加callback callbacks=callbacks, # 关闭官方进度条 enable_progress_bar=False )

logger.info("start train #########################")

trainer.fit(model, data_module)`

the amount of cpu memory used = gpu_number * 2 * model_size

Hi @linyubupa, could you describe more details about reproducing this issue? Especially how you measured cpu memory used and model_size

Hi @linyubupa, could you describe more details about reproducing this issue? Especially how you measured cpu memory used and model_size

""" #this is the code that i used: """ ` def main_worker(gpu, args): # prepare for ant environment huggingface.set_env(gpu) display_envs()

# batch related

train_batch_size = 2

gradient_accumulation_steps = 1

gradient_checkpointing = False

# train related

learning_rate = 1e-5

eval_batch_size = 2

eval_steps = 500

save_steps = 20000

num_train_epochs = 1

# log related

logging_steps = 10

disable_tqdm = False

log_level = 'passive' #'debug'

random.seed(42)

output_dir = "/mntnlp/yumu/bloom176/v1/"

# model_name_or_path = '/mntnlp/yumu/bloomz176/models--bigscience--bloomz-mt/snapshots/4f161c36a766d2328a63a26fe4970024a532e945'

model_name_or_path='/mntnlp/yumu/bloomz/models--bigscience--bloomz-7b1-mt/snapshots/13e9b1a39fe86c8024fe15667d063aa8a3e32460'

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, torch_dtype=torch.float16)

model = AutoModelForCausalLM.from_pretrained(model_name_or_path, torch_dtype=torch.float16)

tokenizer.pad_token = tokenizer.eos_token

model.resize_token_embeddings(len(tokenizer))

tokenizer.pad_token_id = tokenizer.eos_token_id

model.config.end_token_id = tokenizer.eos_token_id

model.config.pad_token_id = model.config.eos_token_id

model.config.use_cache = False

# Set up the datasets

user_config = UserConfig()

# table_name="apmktalgo_dev.targeting_graph_data_text_ly_1_glm_predict_100"

train_table = "XXXXXXX"

test_table = "XXXXXX"

model_name_or_path = '/mntnlp/yumu/bloomz/models--bigscience--bloomz-7b1-mt/snapshots/13e9b1a39fe86c8024fe15667d063aa8a3e32460'

max_seq_length = 100

aistudio_reader_num_processes = 2

fields = ['idx', 'text']

train_dataset = ODPSataset(train_table, fields, user_config, max_seq_length, model_name_or_path, aistudio_reader_num_processes)

dev_dataset = ODPSataset(test_table, fields, user_config, max_seq_length, model_name_or_path, aistudio_reader_num_processes)

# Set up the metric

rouge = evaluate.load("rouge")

def compute_metrics(eval_preds):

labels_ids = eval_preds.label_ids

pred_ids = eval_preds.predictions

pred_str = tokenizer.batch_decode(pred_ids, skip_special_tokens=True)

label_str = tokenizer.batch_decode(labels_ids, skip_special_tokens=True)

result = rouge.compute(predictions=pred_str, references=label_str)

return result

# Create a preprocessing function to extract out the proper logits from the model output

def preprocess_logits_for_metrics(logits, labels):

if isinstance(logits, tuple):

logits = logits[0]

return logits.argmax(dim=-1)

# Prepare the trainer and start training

training_args = TrainingArguments(

output_dir=output_dir,

evaluation_strategy="steps",

eval_accumulation_steps=1,

learning_rate=learning_rate,

per_device_train_batch_size=train_batch_size,

per_device_eval_batch_size=eval_batch_size,

gradient_checkpointing=gradient_checkpointing,

half_precision_backend='auto',

fp16=True,

adam_beta1=0.9,

adam_beta2=0.95,

gradient_accumulation_steps=gradient_accumulation_steps,

num_train_epochs=num_train_epochs,

warmup_steps=2,

eval_steps=eval_steps,

save_steps=save_steps,

load_best_model_at_end=True,

logging_steps=logging_steps,

log_level=log_level,

disable_tqdm=disable_tqdm,

save_total_limit=2,

prediction_loss_only=True,

deepspeed="./new_ds_config.json",

remove_unused_columns=False,

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=dev_dataset,

compute_metrics=compute_metrics,

data_collator=default_data_collator,

preprocess_logits_for_metrics=preprocess_logits_for_metrics,

)

trainer.add_callback(ClearCacheCallback(1000))

trainer.train()

#detail_evaluate(trainer)

#trainer.evaluate()

trainer.save_model(output_dir)

def main():

# import deepspeed

gpu_count = torch.cuda.device_count()

# main_worker(gpu_count,sys.argv)

mp.spawn(main_worker, args=(sys.argv,), nprocs=gpu_count, join=True)

`

i think the most cause of this result is

mp.spawn(main_worker, args=(sys.argv,), nprocs=gpu_count, join=True)

and this is the config of ds

{ "train_batch_size": "auto", "fp16": { "enabled": true, "min_loss_scale": 1, "opt_level": "auto" }, "zero_optimization": { "stage": 3, "allgather_partitions": true, "allgather_bucket_size": 5e8, "contiguous_gradients": true }, "optimizer": { "type": "AdamW", "params": { "lr": 1e-05, "betas": [ 0.9, 0.95 ], "eps": 1e-08 } }, "scheduler": { "type": "WarmupLR", "params": { "warmup_min_lr": 0, "warmup_max_lr": 1e-05, "warmup_num_steps": "auto" } } }

Hi @linyubupa, could you describe more details about reproducing this issue? Especially how you measured cpu memory used and model_size

I measured cpu memory by using aistudio tools which is proved correct

Hi @linyubupa, I am not able to run your code. Do you have a smaller test (but complete setup) for reproducing?

Your suspicion on mp.spawn makes sense. See this https://github.com/pytorch/pytorch/issues/38645.

Could you also try measureing cpu usage with deepspeed.runtime.utils.see_memory_usage?

Hi @linyubupa. Closed for now. Feel free to reopen if there is any update.