【BUG】trainig use CPU offload raise OOM error in wsl2 system

cpu/gpu memory is enough to do this job,i run the model gpt-neo-125M in a nvidia 3090-24g and cpu memory is 64g.

system

win10 with a nvidia 3090-24g and cpu memory is 64g.

docker image

REPOSITORY TAG IMAGE ID CREATED SIZE

deepspeed/deepspeed v072_torch112_cu117 b1d3268ea315 6 months ago 14.7GB

docker run --gpus all --name deepspeed -p 10022:22 --ipc=host --shm-size=16g --ulimit memlock=-1 --privileged -v E:\:/mnt/e -it b1d3268ea315 /bin/bash

free

total used free shared buff/cache available

Mem: 50Gi 792Mi 46Gi 1.0Mi 3.1Gi 48Gi

Swap: 13Gi 0B 13Gi

ds_report

--------------------------------------------------

DeepSpeed C++/CUDA extension op report

--------------------------------------------------

NOTE: Ops not installed will be just-in-time (JIT) compiled at

runtime if needed. Op compatibility means that your system

meet the required dependencies to JIT install the op.

--------------------------------------------------

JIT compiled ops requires ninja

ninja .................. [OKAY]

--------------------------------------------------

op name ................ installed .. compatible

--------------------------------------------------

async_io ............... [NO] ....... [OKAY]

cpu_adagrad ............ [NO] ....... [OKAY]

cpu_adam ............... [NO] ....... [OKAY]

fused_adam ............. [NO] ....... [OKAY]

fused_lamb ............. [NO] ....... [OKAY]

quantizer .............. [NO] ....... [OKAY]

random_ltd ............. [NO] ....... [OKAY]

[WARNING] please install triton==1.0.0 if you want to use sparse attention

sparse_attn ............ [NO] ....... [NO]

spatial_inference ...... [NO] ....... [OKAY]

transformer ............ [NO] ....... [OKAY]

stochastic_transformer . [NO] ....... [OKAY]

transformer_inference .. [NO] ....... [OKAY]

utils .................. [NO] ....... [OKAY]

--------------------------------------------------

DeepSpeed general environment info:

torch install path ............... ['/opt/conda/lib/python3.8/site-packages/torch']

torch version .................... 1.12.0a0+8a1a93a

deepspeed install path ........... ['/opt/conda/lib/python3.8/site-packages/deepspeed']

deepspeed info ................... 0.8.1, unknown, unknown

torch cuda version ............... 11.7

torch hip version ................ None

nvcc version ..................... 11.7

deepspeed wheel compiled w. ...... torch 1.12, cuda 11.7

python -m torch.utils.collect_env

Collecting environment information...

PyTorch version: 1.12.0a0+8a1a93a

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.23.1

Libc version: glibc-2.31

Python version: 3.8.13 | packaged by conda-forge | (default, Mar 25 2022, 06:04:10) [GCC 10.3.0] (64-bit runtime)

Python platform: Linux-5.10.102.1-microsoft-standard-WSL2-x86_64-with-glibc2.10

Is CUDA available: True

CUDA runtime version: 11.7.64

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3090

Nvidia driver version: 516.94

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.4.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.4.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.4.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.4.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.4.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.4.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.4.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] pytorch-quantization==2.1.2

[pip3] torch==1.12.0a0+8a1a93a

[pip3] torch-tensorrt==1.1.0a0

[pip3] torchtext==0.13.0a0

[pip3] torchtyping==0.1.4

[pip3] torchvision==0.13.0a0

[conda] mkl 2019.5 281 conda-forge

[conda] mkl-include 2019.5 281 conda-forge

[conda] numpy 1.22.3 py38h99721a1_2 conda-forge

[conda] pytorch-quantization 2.1.2 pypi_0 pypi

[conda] torch 1.12.0a0+8a1a93a pypi_0 pypi

[conda] torch-tensorrt 1.1.0a0 pypi_0 pypi

[conda] torchtext 0.13.0a0 pypi_0 pypi

[conda] torchtyping 0.1.4 pypi_0 pypi

[conda] torchvision 0.13.0a0 pypi_0 pypi

Track log

/opt/conda/lib/python3.8/site-packages/apex/pyprof/__init__.py:5: FutureWarning: pyprof will be removed by the end of June, 2022

warnings.warn("pyprof will be removed by the end of June, 2022", FutureWarning)

[2023-03-09 02:23:09,449] [WARNING] [runner.py:186:fetch_hostfile] Unable to find hostfile, will proceed with training with local resources only.

[2023-03-09 02:23:09,494] [INFO] [runner.py:548:main] cmd = /opt/conda/bin/python3.8 -u -m deepspeed.launcher.launch --world_info=eyJsb2NhbGhvc3QiOiBbMF19 --master_addr=127.0.0.1 --master_port=29500 --enable_each_rank_log=None train_gptj_summarize.py

/opt/conda/lib/python3.8/site-packages/apex/pyprof/__init__.py:5: FutureWarning: pyprof will be removed by the end of June, 2022

warnings.warn("pyprof will be removed by the end of June, 2022", FutureWarning)

[2023-03-09 02:23:10,864] [INFO] [launch.py:135:main] 0 NCCL_VERSION=2.12.10+cuda11.6

[2023-03-09 02:23:10,864] [INFO] [launch.py:142:main] WORLD INFO DICT: {'localhost': [0]}

[2023-03-09 02:23:10,864] [INFO] [launch.py:148:main] nnodes=1, num_local_procs=1, node_rank=0

[2023-03-09 02:23:10,864] [INFO] [launch.py:161:main] global_rank_mapping=defaultdict(<class 'list'>, {'localhost': [0]})

[2023-03-09 02:23:10,864] [INFO] [launch.py:162:main] dist_world_size=1

[2023-03-09 02:23:10,864] [INFO] [launch.py:164:main] Setting CUDA_VISIBLE_DEVICES=0

/opt/conda/lib/python3.8/site-packages/apex/pyprof/__init__.py:5: FutureWarning: pyprof will be removed by the end of June, 2022

warnings.warn("pyprof will be removed by the end of June, 2022", FutureWarning)

/opt/conda/lib/python3.8/site-packages/torch/nn/modules/module.py:1402: UserWarning: positional arguments and argument "destination" are deprecated. nn.Module.state_dict will not accept them in the future. Refer to https://pytorch.org/docs/master/generated/torch.nn.Module.html#torch.nn.Module.state_dict for details.

warnings.warn(

Found cached dataset parquet (/root/.cache/huggingface/datasets/CarperAI___parquet/CarperAI--openai_summarize_tldr-536d9955f5e6f921/0.0.0/2a3b91fbd88a2c90d1dbbb32b460cf621d31bd5b05b934492fdef7d8d6f236ec)

Found cached dataset parquet (/root/.cache/huggingface/datasets/CarperAI___parquet/CarperAI--openai_summarize_tldr-536d9955f5e6f921/0.0.0/2a3b91fbd88a2c90d1dbbb32b460cf621d31bd5b05b934492fdef7d8d6f236ec)

[2023-03-09 02:23:33,945] [INFO] [comm.py:657:init_distributed] Initializing TorchBackend in DeepSpeed with backend nccl

Using the `WANDB_DISABLED` environment variable is deprecated and will be removed in v5. Use the --report_to flag to control the integrations used for logging result (for instance --report_to none).

Using True half precision backend

[2023-03-09 02:23:33,996] [INFO] [logging.py:75:log_dist] [Rank 0] DeepSpeed info: version=0.8.1, git-hash=unknown, git-branch=unknown

[2023-03-09 02:23:35,259] [INFO] [logging.py:75:log_dist] [Rank 0] DeepSpeed Flops Profiler Enabled: False

Using /root/.cache/torch_extensions/py38_cu117 as PyTorch extensions root...

Detected CUDA files, patching ldflags

Emitting ninja build file /root/.cache/torch_extensions/py38_cu117/cpu_adam/build.ninja...

Building extension module cpu_adam...

Allowing ninja to set a default number of workers... (overridable by setting the environment variable MAX_JOBS=N)

[1/3] /usr/local/cuda/bin/nvcc -DTORCH_EXTENSION_NAME=cpu_adam -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\"_gcc\" -DPYBIND11_STDLIB=\"_libstdcpp\" -DPYBIND11_BUILD_ABI=\"_cxxabi1013\" -I/opt/conda/lib/python3.8/site-packages/deepspeed/ops/csrc/includes -I/usr/local/cuda/include -isystem /opt/conda/lib/python3.8/site-packages/torch/include -isystem /opt/conda/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -isystem /opt/conda/lib/python3.8/site-packages/torch/include/TH -isystem /opt/conda/lib/python3.8/site-packages/torch/include/THC -isystem /usr/local/cuda/include -isystem /opt/conda/include/python3.8 -D_GLIBCXX_USE_CXX11_ABI=1 -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_BFLOAT16_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ --expt-relaxed-constexpr -gencode=arch=compute_86,code=compute_86 -gencode=arch=compute_86,code=sm_86 --compiler-options '-fPIC' -O3 --use_fast_math -std=c++14 -U__CUDA_NO_HALF_OPERATORS__ -U__CUDA_NO_HALF_CONVERSIONS__ -U__CUDA_NO_HALF2_OPERATORS__ -gencode=arch=compute_86,code=sm_86 -gencode=arch=compute_86,code=compute_86 -c /opt/conda/lib/python3.8/site-packages/deepspeed/ops/csrc/common/custom_cuda_kernel.cu -o custom_cuda_kernel.cuda.o

[2/3] c++ -MMD -MF cpu_adam.o.d -DTORCH_EXTENSION_NAME=cpu_adam -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\"_gcc\" -DPYBIND11_STDLIB=\"_libstdcpp\" -DPYBIND11_BUILD_ABI=\"_cxxabi1013\" -I/opt/conda/lib/python3.8/site-packages/deepspeed/ops/csrc/includes -I/usr/local/cuda/include -isystem /opt/conda/lib/python3.8/site-packages/torch/include -isystem /opt/conda/lib/python3.8/site-packages/torch/include/torch/csrc/api/include -isystem /opt/conda/lib/python3.8/site-packages/torch/include/TH -isystem /opt/conda/lib/python3.8/site-packages/torch/include/THC -isystem /usr/local/cuda/include -isystem /opt/conda/include/python3.8 -D_GLIBCXX_USE_CXX11_ABI=1 -fPIC -std=c++14 -O3 -std=c++14 -g -Wno-reorder -L/usr/local/cuda/lib64 -lcudart -lcublas -g -march=native -fopenmp -D__AVX256__ -D__ENABLE_CUDA__ -c /opt/conda/lib/python3.8/site-packages/deepspeed/ops/csrc/adam/cpu_adam.cpp -o cpu_adam.o

[3/3] c++ cpu_adam.o custom_cuda_kernel.cuda.o -shared -lcurand -L/opt/conda/lib/python3.8/site-packages/torch/lib -lc10 -lc10_cuda -ltorch_cpu -ltorch_cuda -ltorch -ltorch_python -L/usr/local/cuda/lib64 -lcudart -o cpu_adam.so

Loading extension module cpu_adam...

Time to load cpu_adam op: 16.64772057533264 seconds

Adam Optimizer #0 is created with AVX2 arithmetic capability.

Config: alpha=0.000010, betas=(0.900000, 0.950000), weight_decay=0.000000, adam_w=1

[2023-03-09 02:23:54,821] [INFO] [logging.py:75:log_dist] [Rank 0] Using DeepSpeed Optimizer param name adamw as basic optimizer

[2023-03-09 02:23:54,825] [INFO] [logging.py:75:log_dist] [Rank 0] DeepSpeed Basic Optimizer = DeepSpeedCPUAdam

[2023-03-09 02:23:54,825] [INFO] [utils.py:53:is_zero_supported_optimizer] Checking ZeRO support for optimizer=DeepSpeedCPUAdam type=<class 'deepspeed.ops.adam.cpu_adam.DeepSpeedCPUAdam'>

[2023-03-09 02:23:54,825] [INFO] [logging.py:75:log_dist] [Rank 0] Creating torch.float16 ZeRO stage 2 optimizer

[2023-03-09 02:23:54,825] [INFO] [stage_1_and_2.py:144:__init__] Reduce bucket size 500,000,000

[2023-03-09 02:23:54,825] [INFO] [stage_1_and_2.py:145:__init__] Allgather bucket size 500000000

[2023-03-09 02:23:54,826] [INFO] [stage_1_and_2.py:146:__init__] CPU Offload: True

[2023-03-09 02:23:54,826] [INFO] [stage_1_and_2.py:147:__init__] Round robin gradient partitioning: False

Using /root/.cache/torch_extensions/py38_cu117 as PyTorch extensions root...

Emitting ninja build file /root/.cache/torch_extensions/py38_cu117/utils/build.ninja...

Building extension module utils...

Allowing ninja to set a default number of workers... (overridable by setting the environment variable MAX_JOBS=N)

ninja: no work to do.

Loading extension module utils...

Time to load utils op: 0.08072209358215332 seconds

Rank: 0 partition count [1] and sizes[(125198592, False)]

╭─────────────────────────────── Traceback (most recent call last) ────────────────────────────────╮

│ /mnt/e/trlx/examples/summarize_rlhf/sft/train_gptj_summarize.py:112 in <module> │

│ │

│ 109 │ │ data_collator=default_data_collator, │

│ 110 │ │ preprocess_logits_for_metrics=preprocess_logits_for_metrics, │

│ 111 │ ) │

│ ❱ 112 │ trainer.train() │

│ 113 │ trainer.save_model(output_dir) │

│ 114 │

│ │

│ /opt/conda/lib/python3.8/site-packages/transformers/trainer.py:1543 in train │

│ │

│ 1540 │ │ inner_training_loop = find_executable_batch_size( │

│ 1541 │ │ │ self._inner_training_loop, self._train_batch_size, args.auto_find_batch_size │

│ 1542 │ │ ) │

│ ❱ 1543 │ │ return inner_training_loop( │

│ 1544 │ │ │ args=args, │

│ 1545 │ │ │ resume_from_checkpoint=resume_from_checkpoint, │

│ 1546 │ │ │ trial=trial, │

│ │

│ /opt/conda/lib/python3.8/site-packages/transformers/trainer.py:1612 in _inner_training_loop │

│ │

│ 1609 │ │ │ or self.fsdp is not None │

│ 1610 │ │ ) │

│ 1611 │ │ if args.deepspeed: │

│ ❱ 1612 │ │ │ deepspeed_engine, optimizer, lr_scheduler = deepspeed_init( │

│ 1613 │ │ │ │ self, num_training_steps=max_steps, resume_from_checkpoint=resume_from_c │

│ 1614 │ │ │ ) │

│ 1615 │ │ │ self.model = deepspeed_engine.module │

│ │

│ /opt/conda/lib/python3.8/site-packages/transformers/deepspeed.py:344 in deepspeed_init │

│ │

│ 341 │ │ lr_scheduler=lr_scheduler, │

│ 342 │ ) │

│ 343 │ │

│ ❱ 344 │ deepspeed_engine, optimizer, _, lr_scheduler = deepspeed.initialize(**kwargs) │

│ 345 │ │

│ 346 │ if resume_from_checkpoint is not None: │

│ 347 │

│ │

│ /opt/conda/lib/python3.8/site-packages/deepspeed/__init__.py:125 in initialize │

│ │

│ 122 │ assert model is not None, "deepspeed.initialize requires a model" │

│ 123 │ │

│ 124 │ if not isinstance(model, PipelineModule): │

│ ❱ 125 │ │ engine = DeepSpeedEngine(args=args, │

│ 126 │ │ │ │ │ │ │ │ model=model, │

│ 127 │ │ │ │ │ │ │ │ optimizer=optimizer, │

│ 128 │ │ │ │ │ │ │ │ model_parameters=model_parameters, │

│ │

│ /opt/conda/lib/python3.8/site-packages/deepspeed/runtime/engine.py:336 in __init__ │

│ │

│ 333 │ │ │ model_parameters = self.module.parameters() │

│ 334 │ │ │

│ 335 │ │ if has_optimizer: │

│ ❱ 336 │ │ │ self._configure_optimizer(optimizer, model_parameters) │

│ 337 │ │ │ self._configure_lr_scheduler(lr_scheduler) │

│ 338 │ │ │ self._report_progress(0) │

│ 339 │ │ elif self.zero_optimization(): │

│ │

│ /opt/conda/lib/python3.8/site-packages/deepspeed/runtime/engine.py:1292 in _configure_optimizer │

│ │

│ 1289 │ │ optimizer_wrapper = self._do_optimizer_sanity_check(basic_optimizer) │

│ 1290 │ │ │

│ 1291 │ │ if optimizer_wrapper == ZERO_OPTIMIZATION: │

│ ❱ 1292 │ │ │ self.optimizer = self._configure_zero_optimizer(basic_optimizer) │

│ 1293 │ │ elif optimizer_wrapper == AMP: │

│ 1294 │ │ │ amp_params = self.amp_params() │

│ 1295 │ │ │ log_dist(f"Initializing AMP with these params: {amp_params}", ranks=[0]) │

│ │

│ /opt/conda/lib/python3.8/site-packages/deepspeed/runtime/engine.py:1542 in │

│ _configure_zero_optimizer │

│ │

│ 1539 │ │ │ │ │ │ "Pipeline parallelism does not support overlapped communication, │

│ 1540 │ │ │ │ │ ) │

│ 1541 │ │ │ │ │ overlap_comm = False │

│ ❱ 1542 │ │ │ optimizer = DeepSpeedZeroOptimizer( │

│ 1543 │ │ │ │ optimizer, │

│ 1544 │ │ │ │ self.param_names, │

│ 1545 │ │ │ │ timers=timers, │

│ │

│ /opt/conda/lib/python3.8/site-packages/deepspeed/runtime/zero/stage_1_and_2.py:451 in __init__ │

│ │

│ 448 │ │ │ self.norm_for_param_grads = {} │

│ 449 │ │ │ self.local_overflow = False │

│ 450 │ │ │ self.grad_position = {} │

│ ❱ 451 │ │ │ self.temp_grad_buffer_for_cpu_offload = get_accelerator().pin_memory( │

│ 452 │ │ │ │ torch.zeros(largest_param_numel, │

│ 453 │ │ │ │ │ │ │ device=self.device, │

│ 454 │ │ │ │ │ │ │ dtype=self.dtype)) │

│ │

│ /opt/conda/lib/python3.8/site-packages/deepspeed/accelerator/cuda_accelerator.py:214 in │

│ pin_memory │

│ │

│ 211 │ │ return torch.cuda.LongTensor │

│ 212 │ │

│ 213 │ def pin_memory(self, tensor): │

│ ❱ 214 │ │ return tensor.pin_memory() │

│ 215 │ │

│ 216 │ def on_accelerator(self, tensor): │

│ 217 │ │ device_str = str(tensor.device) │

╰──────────────────────────────────────────────────────────────────────────────────────────────────╯

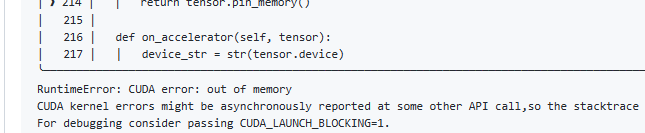

RuntimeError: CUDA error: out of memory

CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

[2023-03-09 02:23:56,918] [INFO] [launch.py:318:sigkill_handler] Killing subprocess 735

[2023-03-09 02:23:56,918] [ERROR] [launch.py:324:sigkill_handler] ['/opt/conda/bin/python3.8', '-u', 'train_gptj_summarize.py', '--local_rank=0'] exits with return code = 1

ds config

{

"train_batch_size": 1,

"fp16": {

"enabled": true,

"min_loss_scale": 1,

"opt_level": "O2"

},

"zero_optimization": {

"stage": 2,

"offload_param": {

"device": "cpu",

"pin_memory": true

},

"offload_optimizer": {

"device": "cpu",

"pin_memory": true

},

"allgather_partitions": true,

"allgather_bucket_size": 5e8,

"contiguous_gradients": true

},

"optimizer": {

"type": "AdamW",

"params": {

"lr": 1e-05,

"betas": [

0.9,

0.95

],

"eps": 1e-08,

"torch_adam": false

}

},

"scheduler": {

"type": "WarmupLR",

"params": {

"warmup_min_lr": 0,

"warmup_max_lr": 1e-05,

"warmup_num_steps": "auto"

}

}

}

is cpu offload not support in wsl2 ? i can run the same code success in another machine with a v100 gpu

Originally posted by @lpty in https://github.com/microsoft/DeepSpeed/issues/2676#issuecomment-1461177861

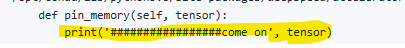

after i edit this code ,it works, somebody know why?

/opt/conda/lib/python3.8/site-packages/deepspeed/accelerator/cuda_accelerator.py

def pin_memory(self, tensor):

print('#################come on', tensor)

#return tensor.pin_memory()

return tensor

log

Loading extension module utils...

Time to load utils op: 0.07759284973144531 seconds

Rank: 0 partition count [1] and sizes[(1315575808, False)]

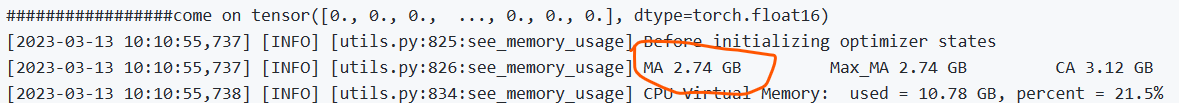

#################come on tensor([0., 0., 0., ..., 0., 0., 0.], dtype=torch.float16)

[2023-03-13 10:10:55,737] [INFO] [utils.py:825:see_memory_usage] Before initializing optimizer states

[2023-03-13 10:10:55,737] [INFO] [utils.py:826:see_memory_usage] MA 2.74 GB Max_MA 2.74 GB CA 3.12 GB Max_CA 3 GB

[2023-03-13 10:10:55,738] [INFO] [utils.py:834:see_memory_usage] CPU Virtual Memory: used = 10.78 GB, percent = 21.5%

#################come on tensor([0., 0., 0., ..., 0., 0., 0.])

[2023-03-13 10:10:58,351] [INFO] [utils.py:825:see_memory_usage] After initializing optimizer states

[2023-03-13 10:10:58,351] [INFO] [utils.py:826:see_memory_usage] MA 2.74 GB Max_MA 2.74 GB CA 3.12 GB Max_CA 3 GB

[2023-03-13 10:10:58,352] [INFO] [utils.py:834:see_memory_usage] CPU Virtual Memory: used = 25.51 GB, percent = 51.0%

[2023-03-13 10:10:58,352] [INFO] [stage_1_and_2.py:527:__init__] optimizer state initialized

[2023-03-13 10:10:58,431] [INFO] [utils.py:825:see_memory_usage] After initializing ZeRO optimizer

[2023-03-13 10:10:58,432] [INFO] [utils.py:826:see_memory_usage] MA 2.74 GB Max_MA 2.74 GB CA 3.12 GB Max_CA 3 GB

[2023-03-13 10:10:58,432] [INFO] [utils.py:834:see_memory_usage] CPU Virtual Memory: used = 25.51 GB, percent = 51.0%

[2023-03-13 10:10:58,436] [INFO] [logging.py:75:log_dist] [Rank 0] DeepSpeed Final Optimizer = adamw

[2023-03-13 10:10:58,437] [INFO] [logging.py:75:log_dist] [Rank 0] DeepSpeed using configured LR scheduler = WarmupLR

[2023-03-13 10:10:58,437] [INFO] [logging.py:75:log_dist] [Rank 0] DeepSpeed LR Scheduler = <deepspeed.runtime.lr_schedules.WarmupLR object at 0x7f05d9572d9

pin_memory only called twice during training

@lpty, thanks for reporting this issue and sharing your investigation. Unfortunately, pin_memory can be memory-inefficient as it requires page-locked memory. We are working to improve this issue.

By the way, can you confirm the model size? The following log snippet suggests a ~1.3B model and not 125M:

@tjruwase yeah,this is a 1.3B model, but 125M models also report errors. The reason I found out is the location of the pin_memory, which is strange

@lpty, thanks for the update. I think we have not previously seen this issue because we have not tested pin_memory on WSL.

Can you please try the following to observe the behavior of pin_memory in your environment?

- Re-enable

tensor.pin_memory() - Profile memory usage by add see_memory_usage() before and after

tensor.pin_memory(). You can see an example here? - Share the log.

Thanks!

@tjruwase raise a RuntimeError: CUDA error: out of memory

[2023-03-24 13:10:21,979] [INFO] [logging.py:75:log_dist] [Rank 0] Using DeepSpeed Optimizer param name adamw as basic optimizer

[2023-03-24 13:10:21,983] [INFO] [logging.py:75:log_dist] [Rank 0] DeepSpeed Basic Optimizer = DeepSpeedCPUAdam

[2023-03-24 13:10:21,984] [INFO] [utils.py:53:is_zero_supported_optimizer] Checking ZeRO support for optimizer=DeepSpeedCPUAdam type=<class 'deepspeed.ops.adam.cpu_adam.DeepSpeedCPUAdam'>

[2023-03-24 13:10:21,984] [INFO] [logging.py:75:log_dist] [Rank 0] Creating torch.float16 ZeRO stage 2 optimizer

[2023-03-24 13:10:21,984] [INFO] [stage_1_and_2.py:144:__init__] Reduce bucket size 500,000,000

[2023-03-24 13:10:21,984] [INFO] [stage_1_and_2.py:145:__init__] Allgather bucket size 500000000

[2023-03-24 13:10:21,984] [INFO] [stage_1_and_2.py:146:__init__] CPU Offload: True

[2023-03-24 13:10:21,984] [INFO] [stage_1_and_2.py:147:__init__] Round robin gradient partitioning: False

Using /root/.cache/torch_extensions/py38_cu117 as PyTorch extensions root...

Emitting ninja build file /root/.cache/torch_extensions/py38_cu117/utils/build.ninja...

Building extension module utils...

Allowing ninja to set a default number of workers... (overridable by setting the environment variable MAX_JOBS=N)

ninja: no work to do.

Loading extension module utils...

Time to load utils op: 0.07312488555908203 seconds

Rank: 0 partition count [1] and sizes[(125198592, False)]

#################come on tensor([0., 0., 0., ..., 0., 0., 0.], dtype=torch.float16)

[2023-03-24 13:10:22,501] [INFO] [utils.py:825:see_memory_usage] before

[2023-03-24 13:10:22,501] [INFO] [utils.py:826:see_memory_usage] MA 0.28 GB Max_MA 0.28 GB CA 0.45 GB Max_CA 0 GB

[2023-03-24 13:10:22,502] [INFO] [utils.py:834:see_memory_usage] CPU Virtual Memory: used = 5.53 GB, percent = 11.1%

@lpty, thanks for sharing. I presume it got the original OOM reported in this issue?

I suspect that OOM is actually in CPU memory despite the "CUDA" in the error message. This is because pin_memory() is defined only for CPU tensors. To confirm this, can you please add tensor.numel() and tensor.device to the following print?

@tjruwase cpu memory usage is only 10%, it should be enough to load this tensor

Rank: 0 partition count [1] and sizes[(125198592, False)]

#################come on tensor([0., 0., 0., ..., 0., 0., 0.], dtype=torch.float16) 38597376 cpu

[2023-03-25 10:50:54,882] [INFO] [utils.py:825:see_memory_usage] before

[2023-03-25 10:50:54,882] [INFO] [utils.py:826:see_memory_usage] MA 0.28 GB Max_MA 0.28 GB CA 0.45 GB Max_CA 0 GB

[2023-03-25 10:50:54,882] [INFO] [utils.py:834:see_memory_usage] CPU Virtual Memory: used = 5.17 GB, percent = 10.3%

...

│ /opt/conda/lib/python3.8/site-packages/deepspeed/accelerator/cuda_accelerator.py:217 in │

│ pin_memory │

│ │

│ 214 │ │ print('#################come on', tensor, tensor.numel(), tensor.device) │

│ 215 │ │ from deepspeed.runtime.utils import see_memory_usage │

│ 216 │ │ see_memory_usage(f'before', force=True) │

│ ❱ 217 │ │ return tensor.pin_memory() │

│ 218 │ │ return tensor │

│ 219 │ │

│ 220 │ def on_accelerator(self, tensor): │

╰──────────────────────────────────────────────────────────────────────────────────────────────────╯

RuntimeError: CUDA error: out of memory

after i edit this code ,it works, somebody know why?

/opt/conda/lib/python3.8/site-packages/deepspeed/accelerator/cuda_accelerator.py def pin_memory(self, tensor): print('#################come on', tensor) #return tensor.pin_memory() return tensorlog

Loading extension module utils... Time to load utils op: 0.07759284973144531 seconds Rank: 0 partition count [1] and sizes[(1315575808, False)] #################come on tensor([0., 0., 0., ..., 0., 0., 0.], dtype=torch.float16) [2023-03-13 10:10:55,737] [INFO] [utils.py:825:see_memory_usage] Before initializing optimizer states [2023-03-13 10:10:55,737] [INFO] [utils.py:826:see_memory_usage] MA 2.74 GB Max_MA 2.74 GB CA 3.12 GB Max_CA 3 GB [2023-03-13 10:10:55,738] [INFO] [utils.py:834:see_memory_usage] CPU Virtual Memory: used = 10.78 GB, percent = 21.5% #################come on tensor([0., 0., 0., ..., 0., 0., 0.]) [2023-03-13 10:10:58,351] [INFO] [utils.py:825:see_memory_usage] After initializing optimizer states [2023-03-13 10:10:58,351] [INFO] [utils.py:826:see_memory_usage] MA 2.74 GB Max_MA 2.74 GB CA 3.12 GB Max_CA 3 GB [2023-03-13 10:10:58,352] [INFO] [utils.py:834:see_memory_usage] CPU Virtual Memory: used = 25.51 GB, percent = 51.0% [2023-03-13 10:10:58,352] [INFO] [stage_1_and_2.py:527:__init__] optimizer state initialized [2023-03-13 10:10:58,431] [INFO] [utils.py:825:see_memory_usage] After initializing ZeRO optimizer [2023-03-13 10:10:58,432] [INFO] [utils.py:826:see_memory_usage] MA 2.74 GB Max_MA 2.74 GB CA 3.12 GB Max_CA 3 GB [2023-03-13 10:10:58,432] [INFO] [utils.py:834:see_memory_usage] CPU Virtual Memory: used = 25.51 GB, percent = 51.0% [2023-03-13 10:10:58,436] [INFO] [logging.py:75:log_dist] [Rank 0] DeepSpeed Final Optimizer = adamw [2023-03-13 10:10:58,437] [INFO] [logging.py:75:log_dist] [Rank 0] DeepSpeed using configured LR scheduler = WarmupLR [2023-03-13 10:10:58,437] [INFO] [logging.py:75:log_dist] [Rank 0] DeepSpeed LR Scheduler = <deepspeed.runtime.lr_schedules.WarmupLR object at 0x7f05d9572d9pin_memory only called twice during training

Worked for me, thanks so much.

Anyone having trouble using DeepSpeed for training on WSL2 Ubuntu, this is your hacky fix right here!

@lpty and @robriks, can you please set pin_memory to False in your ds_config and try PR #4131?