Question: how to continue the training with more or fewer GPUs

If there are N GPUs, the snapshot will be N files for optimizer states. Each file corresponds to 1 GPU. (let me know if the understanding is not correct). Then, how to continue the training with more GPU, say, 2N GPUs? Is there an easy way to consolidate the optimizer states?

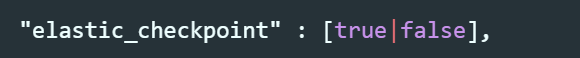

Yes, the N optimizer state files correspond to the N GPUs. We are working on a feature to support changing the number of GPUs between training runs. Can you try setting the following parameter to true in your zero config?

Are there any resources on how to do this manually?

@itsnamgyu, please see in-development feature called Universal Checkpointing https://github.com/microsoft/Megatron-DeepSpeed/blob/main/examples_deepspeed/universal_checkpointing/README.md