Question baout how to calculate the relative rotation from between two shots.

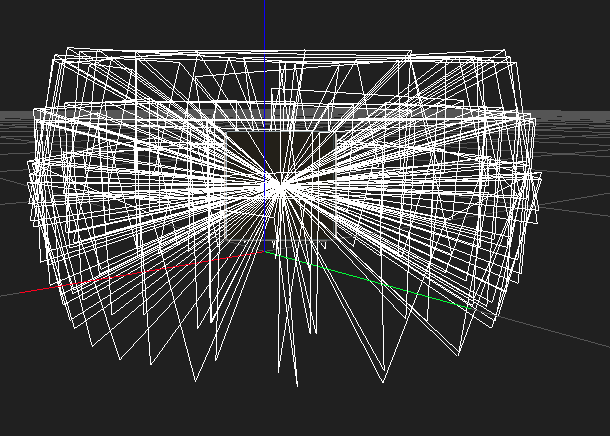

Hello, opensfm is a great job, thank you. I use opensfm to reconstruct the camera position on a set of images.This group of pictures is taken by a fixed PTZ camera. The camera rotates around the yaw angle, takes 20 images, adjusts the pitch angle and repeats it three times. The reconstructed effect looks good, as shown in the following figure:

At this time, I want to calculate the relative rotation between the two images. The code is as follows.

At this time, I want to calculate the relative rotation between the two images. The code is as follows.

reconstruction = data.load_reconstruction()[0]

mov_shot_pose = reconstruction.shots["20.jpg"].pose

fixed_pose = reconstruction.shots["0.jpg"].pose

relative_pose = mov_shot_pose.relative_to(fixed_pose)

rotation_matrix = relative_pose.get_rotation_matrix()

euler_angle = relative_pose.get_R_cam_to_world_min()

But this answer is wrong. I would like to ask how to calculate this relative rotation. Look forward to your reply.

Hi @18242360613 ,

thanks for using OpenSfM. Since you know what the relative rotation is, could you please provide the actual numbers of the transformation matrices using:

print(mov_shot_pose.get_world_to_cam())

print(fixed_pose.get_world_to_cam())

and then write what you expect as outcome. Working with poses is always a bit tricky because a transpose is easily missed or coordinate systems get mixed up.

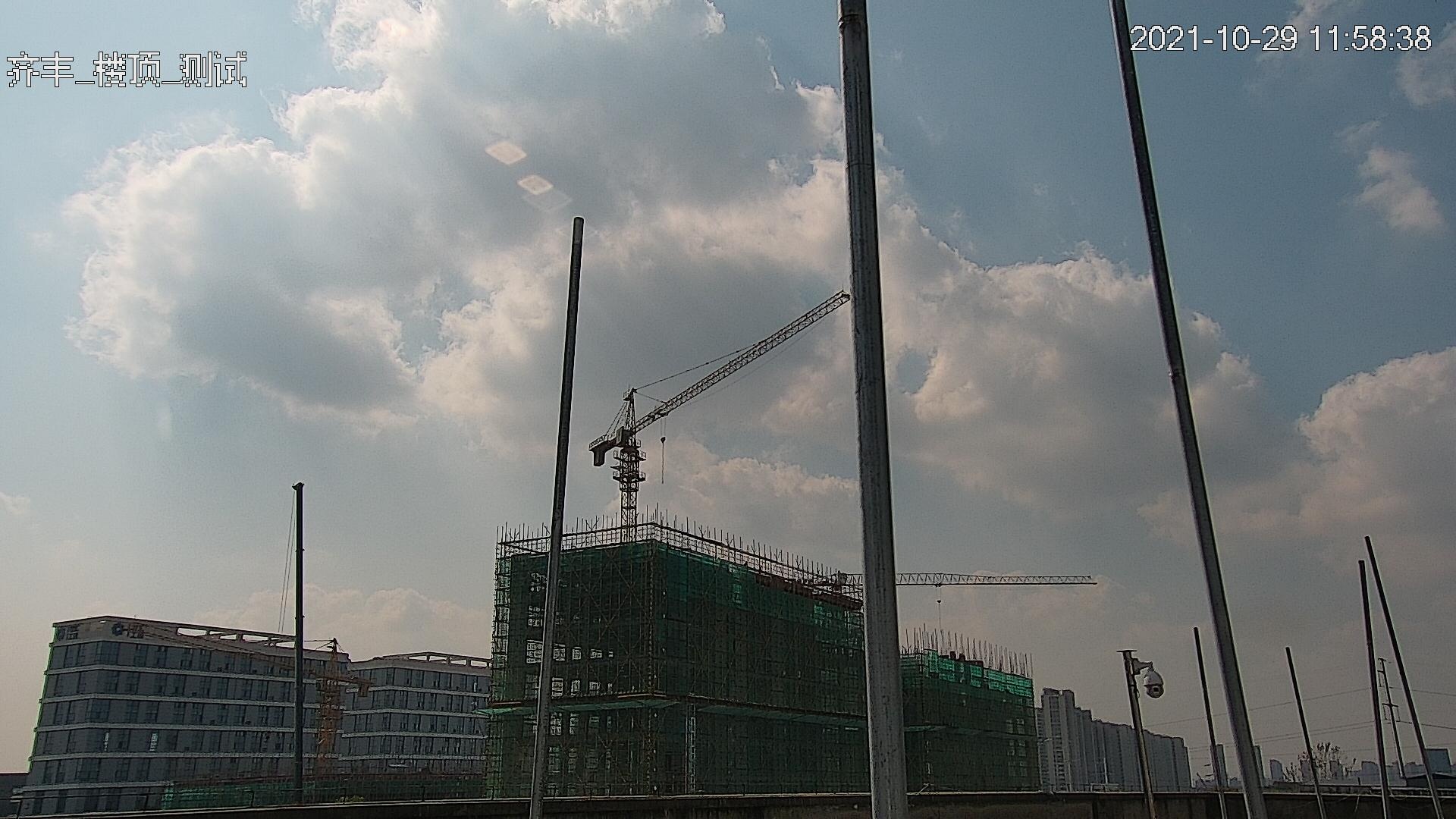

hi @fabianschenk , In my case, "0. jpg" is set as a fixed image and "20. jpg" is set as a moving image. When these two images were taken, there was only a difference in pitch angle, about 18 degrees."0.jpg" is shown in the following figure:,

"20.jpg" is shown in the following figure:.

"20.jpg" is shown in the following figure:.

I used the visualization tool to carefully check their relative position in reconstruction.json, which is basically reasonable.

I used the visualization tool to carefully check their relative position in reconstruction.json, which is basically reasonable.

print(mov_shot_pose.get_world_to_cam()) print(fixed_pose.get_world_to_cam())

The outputs of the above codes are: [[ 9.44438287e-01 -3.28688792e-01 -3.97867187e-06 1.98456206e-01] [-3.57780147e-06 1.82439801e-06 -1.00000000e+00 2.27471692e-01] [ 3.28688792e-01 9.44438287e-01 5.47048083e-07 3.45422822e-01] [ 0.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00]]

[[ 9.43526023e-01 -3.31298417e-01 -4.70005828e-05 1.97528613e-01] [-3.62918063e-05 3.85100511e-05 -9.99999999e-01 2.25654272e-01] [ 3.31298418e-01 9.43526024e-01 2.43118174e-05 3.45971994e-01] [ 0.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00]]

The output of

relative_ pose.get_ R_ cam_ to_ world_ min()

is

[-2.38352199e-05 -2.76448379e-03 -4.29875225e-05]

The calculated value is too small, which makes me very confused. The calculated value is too small, which makes me very confused. The calculated value is close to 18 degrees

Hi @18242360613 ,

Sorry for the late response. I've also tried to find the angle from the poses but came to the same result. Maybe you selected the wrong shots and the angle between them is really that small? Here's the code I used:

import numpy as np

from opensfm import pygeometry

mov_shot = pygeometry.Pose()

T1 = np.array(

[

[9.44438287e-01, -3.28688792e-01, -3.97867187e-06, 1.98456206e-01],

[-3.57780147e-06, 1.82439801e-06, -1.00000000e00, 2.27471692e-01],

[3.28688792e-01, 9.44438287e-01, 5.47048083e-07, 3.45422822e-01],

[0.00000000e00, 0.00000000e00, 0.00000000e00, 1.00000000e00],

]

)

mov_shot.set_from_world_to_cam(T1)

T2 = np.array(

[

[9.43526023e-01, -3.31298417e-01, -4.70005828e-05, 1.97528613e-01],

[-3.62918063e-05, 3.85100511e-05, -9.99999999e-01, 2.25654272e-01],

[3.31298418e-01, 9.43526024e-01, 2.43118174e-05, 3.45971994e-01],

[0.00000000e00, 0.00000000e00, 0.00000000e00, 1.00000000e00],

]

)

fixed_shot = pygeometry.Pose()

fixed_shot.set_from_world_to_cam(T2)

T_fixed_mov = fixed_shot.get_world_to_cam().dot(mov_shot.get_cam_to_world())

R_fixed_mov = T_fixed_mov[:3, :3]

print(np.degrees(np.arccos(0.5 * (R_fixed_mov.trace() - 1))))

Output in degrees:

0.15841516161644922