Multi-gpu demo failed on two A6000

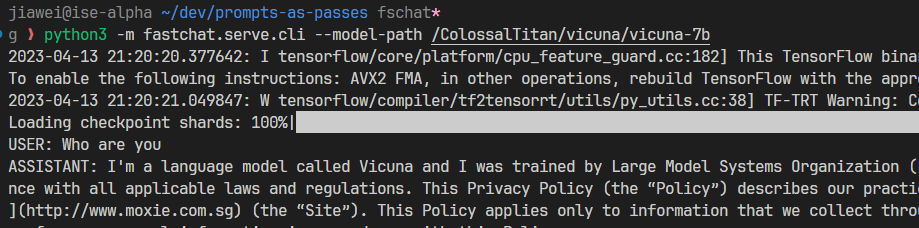

Thanks for the great work! When going through the tutorial I can successfully run vicuna on single A6000:

However, when trying to accelerate things with 2 gpus I found it crashed. With CUDA_LAUNCH_BLOCKING=1 python3 -m fastchat.serve.cli --model-path /path/to/7b-model --num-gpus 2 over 2 A6000, it crashed with:

Curious if I made any misconfiguration from the user side? Thanks!

$ pip show fschat

Name: fschat

Version: 0.2.1

Summary: An open platform for training, serving, and evaluating large language model based chatbots.

Home-page:

Author:

Author-email:

License:

Location: /home/jiawei/.conda/envs/g/lib/python3.8/site-packages

Requires: accelerate, fastapi, gradio, markdown2, numpy, prompt-toolkit, requests, rich, sentencepiece, tokenizers, torch, uvicorn, wandb

$ pip show transformers

Name: transformers

Version: 4.29.0.dev0

Summary: State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow

Home-page: https://github.com/huggingface/transformers

Author: The Hugging Face team (past and future) with the help of all our contributors (https://github.com/huggingface/transformers/graphs/contributors)

Author-email: [email protected]

License: Apache 2.0 License

Location: /home/jiawei/.conda/envs/g/lib/python3.8/site-packages

Requires: filelock, huggingface-hub, numpy, packaging, pyyaml, regex, requests, tokenizers, tqdm

What is the VRAM on your GPU? By default FastChat sets the max memory to 13GiB when using multiple GPUs which can lead to a total of 26 with two GPUs = not enough

A6000 has 50gb vram.

Can you try changing this line to "40GiB"? https://github.com/lm-sys/FastChat/blob/e112299e3c3529de0c430b98fd24743296d273f4/fastchat/serve/inference.py#L29

What is the VRAM on your GPU? By default FastChat sets the max memory to 13GiB when using multiple GPUs which can lead to a total of 26 with two GPUs = not enough

I have been running the 13B model on 2 GPUs for some time, and that could explain the random (but rare) crashes. How much memory is enough memory?

For me it usually takes around 40GiB. It never worked with 2 GPUs @ 13GiB for me :thinking: what am I doing wrong?

For me it usually takes around 40GiB. It never worked with 2 GPUs @ 13GiB for me 🤔 what am I doing wrong?

With two A30, nvidia-smi reports around 14GiB on each GPU. I'm using cuda 11.7 and pytorch 2.0.0 on linux.

Can you try changing this line to "40GiB"?

https://github.com/lm-sys/FastChat/blob/e112299e3c3529de0c430b98fd24743296d273f4/fastchat/serve/inference.py#L29

Unfortunately, it does not work for the 7b model. It kept saying ../aten/src/ATen/native/cuda/ScatterGatherKernel.cu:144: operator(): block: [2,0,0], thread: [104,0,0] Assertion idx_dim >= 0 && idx_dim < index_size && "index out of bounds" failed..

Same issue experienced.

@ganler @weiddeng It is a tokenizer version issue.

https://github.com/lm-sys/FastChat/issues/199#issuecomment-1537618299 Please refer to the above issue for the solution. Let us know if it is solved. Feel free to re-open.