Get Error:use_cache is not supported, when finetune

@zhangsanfeng86 why would you need to use cache for fine-tuning? It is only useful for decoding.

@zhisbug How to set "use cache=False"? I just run "train_mem.py"

Did you turn on the gradient_checkpointing? We need to turn on that for the train_mem.py as that will turn off the use_cache implicitly.

Thank u, I'll try!

Did you turn on the

gradient_checkpointing? We need to turn on that for thetrain_mem.pyas that will turn off theuse_cacheimplicitly.

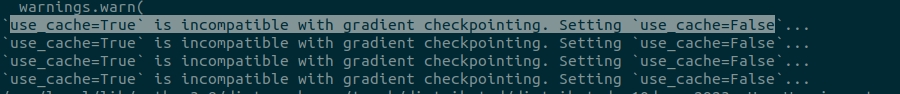

Hi @Michaelvll I turn on 'gradient_checkpointing', get this warning, when finetune.

Hi, we have released a new training script and a new version of weights (https://github.com/lm-sys/FastChat/blob/main/docs/weights_version.md). You can follow https://github.com/lm-sys/FastChat#fine-tuning-vicuna-7b-with-local-gpus, which only uses 4 X A100 (40GB).

The error you mentioned can be safely ignored. Could you try the latest training script again?

special_tokens_map.json tokenizer.model tokenizer_config.json

@merrymercy These 3 files seem to be missing from the hugging face repo of version 1.1 Can you please add them as apply_delta code is showing up errors of missing tokenizer files.

Thank you.

@samarthsarin Could you uninstall fschat and reinstall the latest fschat?

The tokenizer files are omitted on purpose because we didn't change the tokenizer. apply_delta.py will just copy LLaMA's tokenizer

I was not aware that apply_delta.py is also modified in the latest push. Now its working fine. Thank you.

Seems like the issue is resolved.