Reproducing Reptile on mini-imagenet

Hello. First of all, thanks to implement various meta learning codes.

I ran code "examples/vision/reptile_miniimagenet.py" without modification. Also, I used default setting. However, I could not get accuracy 65.5% which is mentioned on README.md.

Should I modify code or setting to get that accuracy(65.5%)?

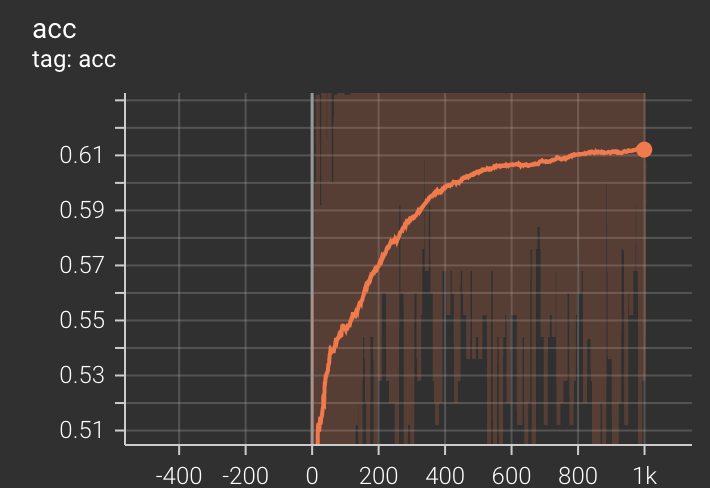

x-axis's 1 unit is 100iteration. (i.e. 1k = 100,000).

x-axis's 1 unit is 100iteration. (i.e. 1k = 100,000).

This image is "meta test accuracy" graph on Tensorboard. I got accuracy 60.0% at 100,000 iteration.

Thanks for reporting this, @shkim960520. The current hyper-parameters should match the tensorflow code release, but I had to tune them a little get 65.5%. If I recall correctly, it was meta_bsz and fast_lr but I don't have the values at hand.

It would be a good idea to keep track of the hyper-parameters to replicate numbers in the README. Do you want to give a shot at tuning the hyper-parameters and updating the README accordingly?

Thanks for reply @seba-1511

I think that your setting(current hyper-parameters) is set well. Because current hyper-parameters are same as original hyper-parameters in Reptile paper.

Of course, hyper parameter tuning would be helpful to get high accuracy like 65.5%. In terms of reproducing, however, it has to get similar performance(accuracy) without tuning,.

It seems like changing model = l2l.vision.models.MiniImagenetCNN(ways) for model = l2l.vision.models.MiniImagenetCNN(ways, hidden_size=32) on l. 86 fixes the issue as it matches the original code. Could you confirm this also works on your side? And if so, do you want to submit a PR for it?

On my side, I ran two MiniImagenetCNN(A & B). A is hidden_size=32, B is hidden_size=64. B had better accuracy about 62.3% than A which had accuracy about 60.5%. But it could not get accuracy like 65.5%.

I think your "reptile"code has no problem. I don't know what is the reason.

Closing: it seems the error came from decaying the meta learning rate, which is now fixed.